Blog

What Is Synthetic Media and Why It’s the Future of Digital Content

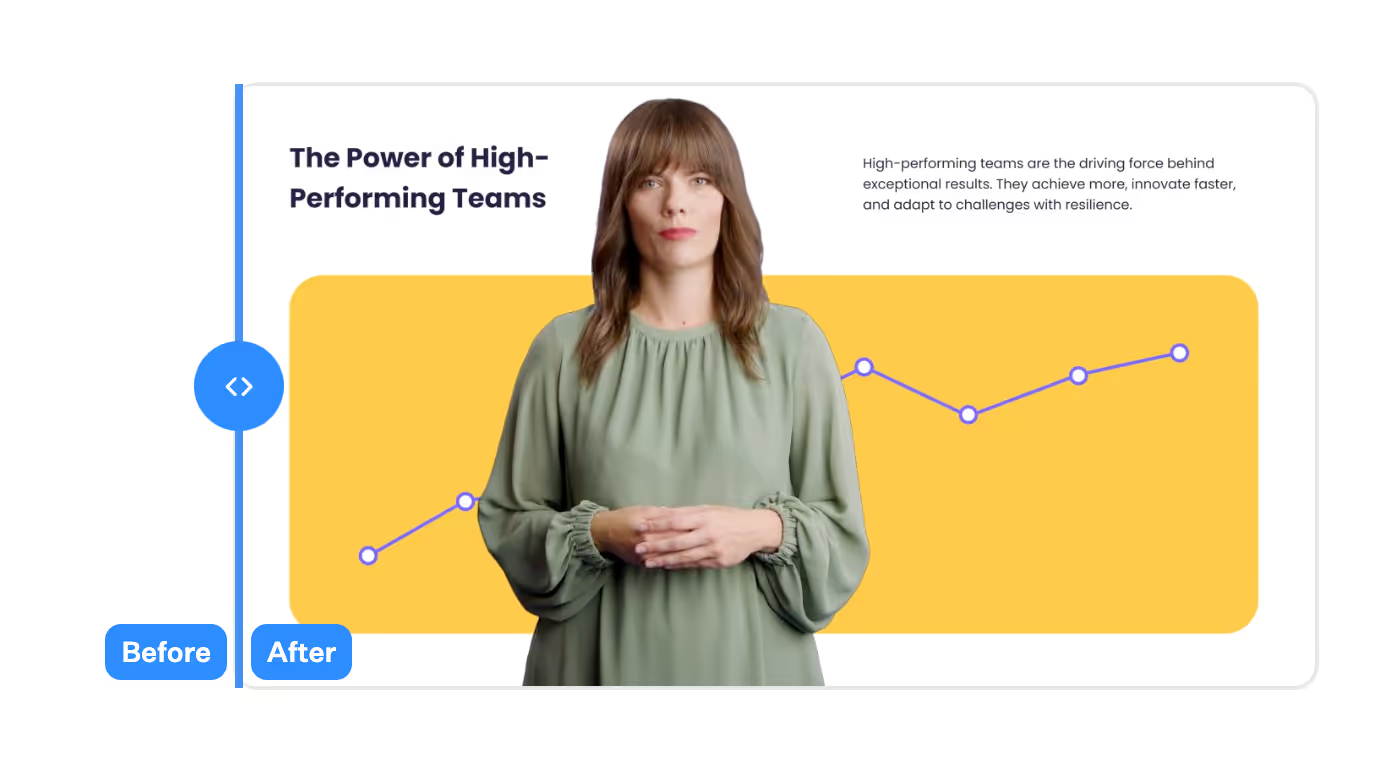

Synthetic media refers to content created or modified by AI—text, images, audio, and video. Instead of filming or recording in the physical world, content is generated in software, which reduces time and cost and allows for personalization at scale. It also raises important questions about accuracy, consent, and misuse.

The technology has matured quickly. Generative adversarial networks (GANs) started producing photorealistic images a decade ago, speech models made voices more natural, and transformers advanced language and multimodal generation. Alongside benefits, deepfakes, scams, and platform policy changes emerged. Organizations involved in training, communications, or localization can adopt this capability—but with clear rules and strong oversight.

A Quick Timeline of Synthetic Media’s Rise

- 2014: GANs enable photorealistic image synthesis.

- 2016: WaveNet models raw audio for more natural speech.

- 2017: Transformers unlock humanlike language and music; “deepfakes” gain attention on Reddit, with r/deepfakes banned in early 2018.

- 2020: Large-scale models like GPT-3 and Jukebox reach mainstream attention.

Platforms responded: major sites banned non-consensual deepfake porn in 2018–2019, and social networks rolled out synthetic media labels and stricter policies before the 2020 U.S. election.

The scale is significant. A Harvard Misinformation Review analysis found 556 tweets with AI-generated media amassed 1.5B+ views. Images dominated, but AI videos skewed political and drew higher median views.

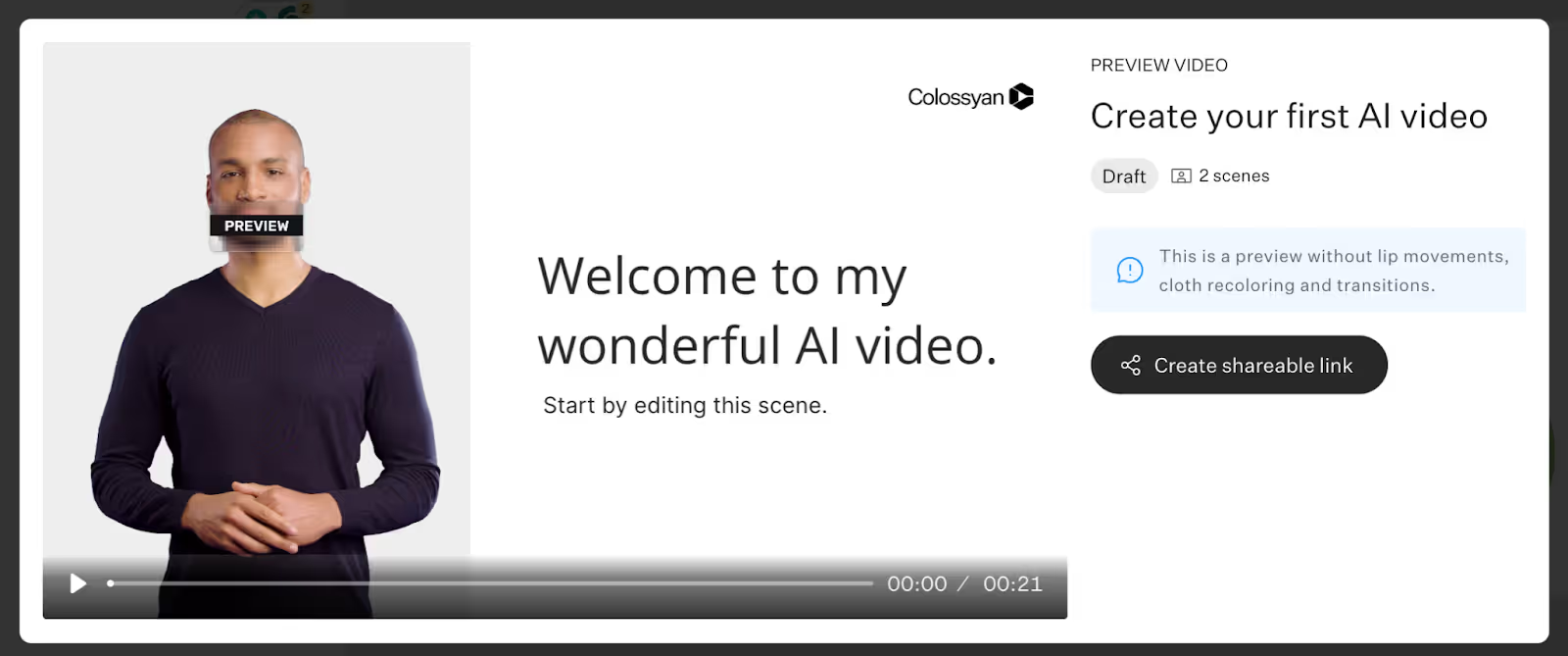

Production has also moved from studios to browsers. Tools like Doc2Video or Prompt2Video allow teams to upload a Word file or type a prompt to generate draft videos with scenes, visuals, and timing ready for refinement.

What Exactly Is Synthetic Media?

Synthetic media includes AI-generated or AI-assisted content. Common types:

- Synthetic video, images, voice, AI-generated text

- AI influencers, mixed reality, face swaps

Examples:

- Non-synthetic: a newspaper article with a staff photo

- Synthetic: an Instagram AR filter adding bunny ears, or a talking-head video created from a text script

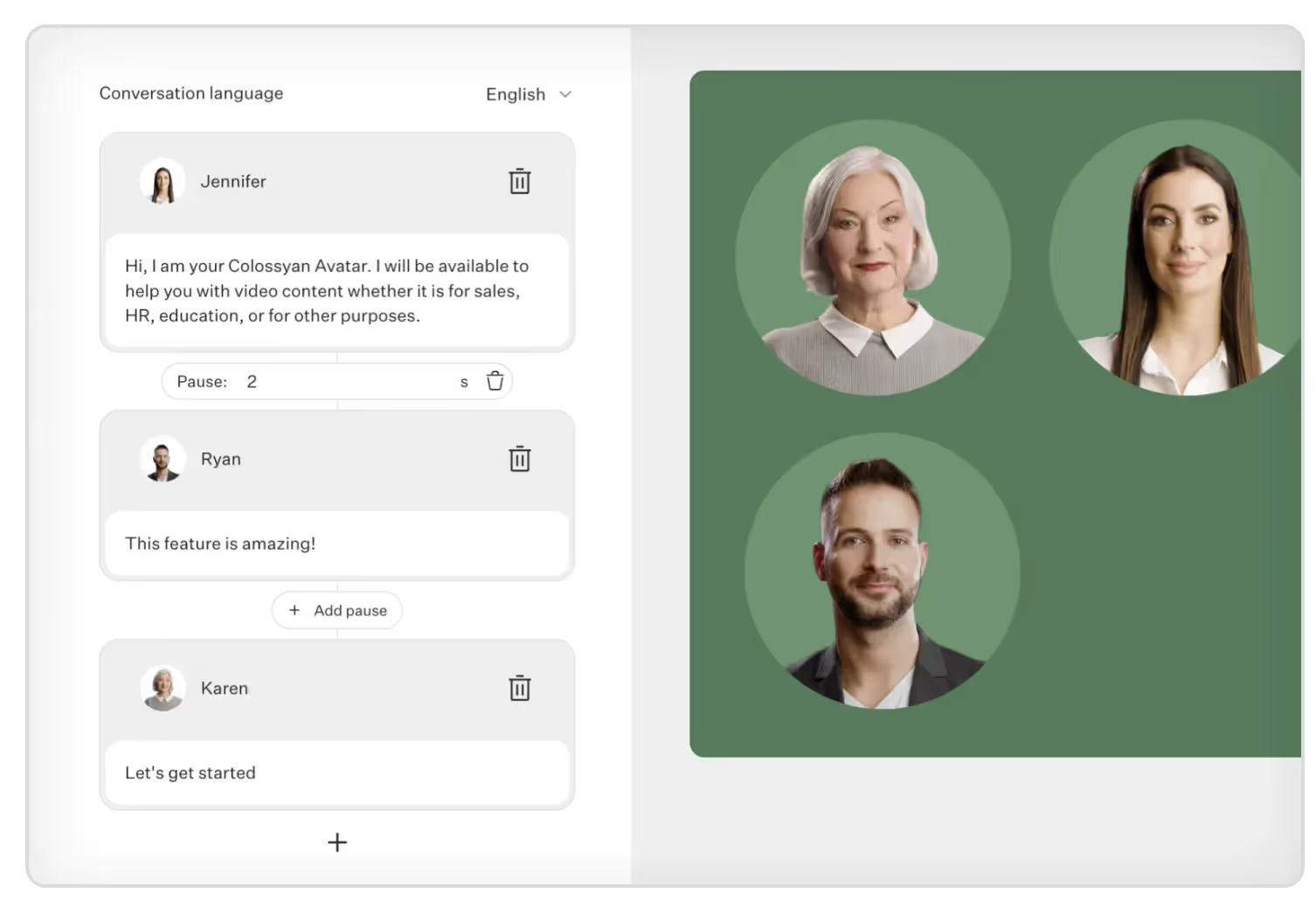

Digital personas like Lil Miquela show the cultural impact of fully synthetic characters. Synthetic video can use customizable AI avatars or narration-only scenes. Stock voices or cloned voices (with consent) ensure consistent speakers, and Conversation Mode allows role-plays with multiple presenters in one scene.

Synthetic Media Types and Examples

Why Synthetic Media Is the Future of Digital Content

Speed and cost: AI enables faster production. For instance, one creator produced a 30-page children’s book in under an hour using AI tools. Video is following a similar trajectory, making high-quality effects accessible to small teams.

Personalization and localization: When marginal cost approaches zero, organizations can produce audience-specific variants by role, region, or channel.

Accessibility: UNESCO-backed guidance highlights synthetic audio, captions, real-time transcription, and instant multilingual translation for learners with special needs. VR/AR and synthetic simulations provide safe practice environments for complex tasks.

Practical production tools:

- Rapid drafts: Doc2Video converts dense PDFs and Word files into structured scenes.

- Localization: Instant Translation creates language variants while preserving layout and animation.

- Accessibility: Export SRT/VTT captions and audio-only versions; Pronunciations ensure correct terminology.

Practical Use Cases

Learning and Development

- Convert SOPs and handbooks into interactive training with quizzes and branching. Generative tools can help build lesson plans and simulations.

- Recommended tools: Doc2Video or PPT Import, Interaction for MCQs, Conversation Mode for role-plays, SCORM export, Analytics for plays and quiz scores.

Corporate Communications and Crisis Readiness

- Simulate risk scenarios, deliver multilingual updates, and standardize compliance refreshers. AI scams have caused real losses, including a €220,000 voice-cloning fraud and market-moving fake videos (Forbes overview).

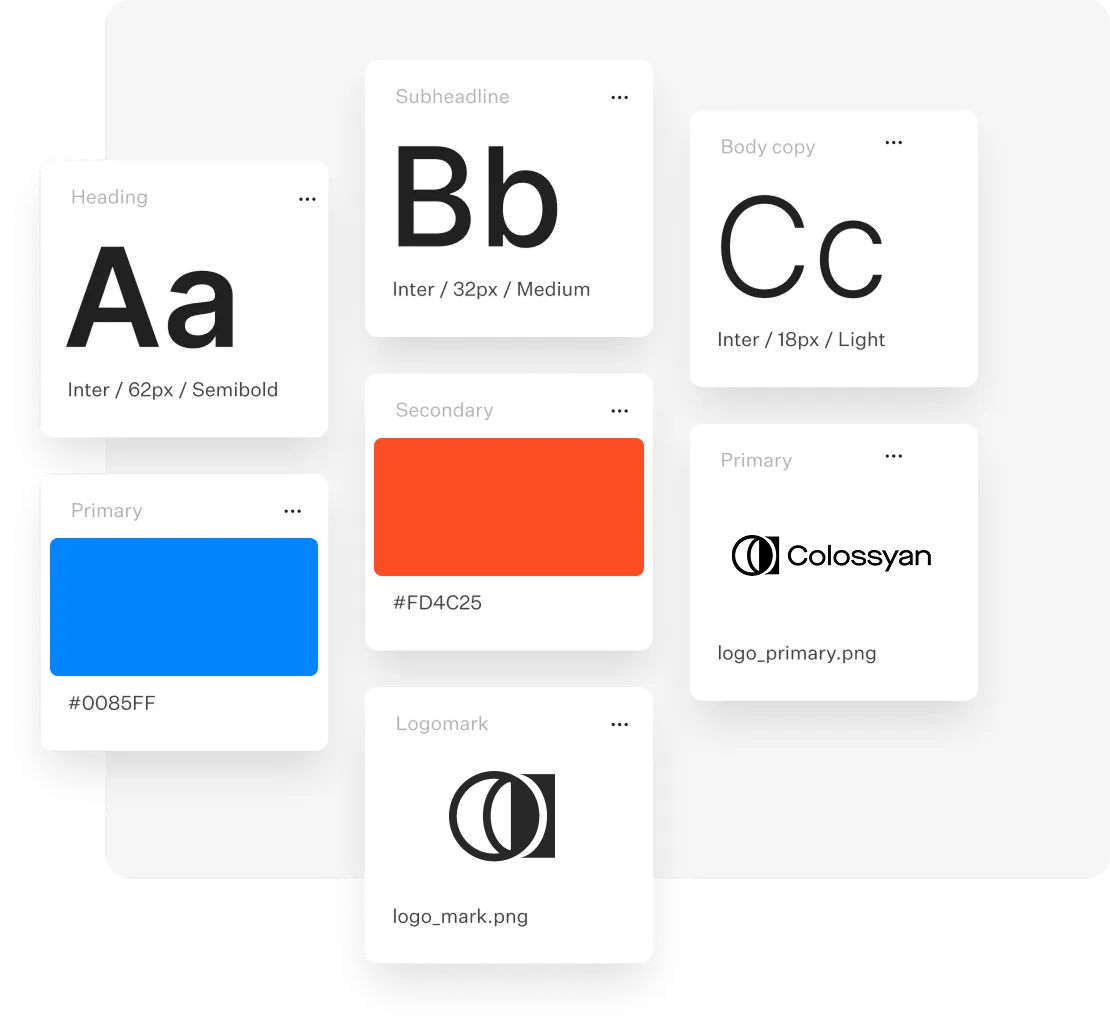

- Recommended tools: Instant Avatars, Brand Kits, Workspace Management, Commenting for approvals.

Global Marketing and Localization

- Scale product explainers and onboarding across regions with automated lip-synced redubbing.

- Recommended tools: Instant Translation with multilingual voices, Pronunciations, Templates.

Education and Regulated Training

- Build scenario-based modules for healthcare or finance.

- Recommended tools: Branching for decision trees, Analytics, SCORM to track pass/fail.

Risk Landscape and Mitigation

Prevalence and impact are increasing. 2 in 3 cybersecurity professionals observed deepfakes in business disinformation in 2022, and AI-generated posts accumulated billions of views (Harvard analysis).

Detection methods include biological signals, phoneme–viseme mismatches, and frame-level inconsistencies. Intel’s FakeCatcher reports 96% real-time accuracy, while Google’s AudioLM classifier achieves ~99% accuracy. Watermarking and C2PA metadata help with provenance.

Governance recommendations: Follow Partnership on AI Responsible Practices emphasizing consent, disclosure, and transparency. Durable, tamper-resistant disclosure remains a research challenge. UK Online Safety Bill criminalizes revenge porn (techUK summary).

Risk reduction strategies:

- Use in-video disclosures (text overlays or intro/end cards) stating content is synthetic.

- Enforce approval roles (admin/editor/viewer) and maintain Commenting threads as audit trails.

- Monitor Analytics for distribution anomalies.

- Add Pronunciations to prevent misreads of sensitive terms.

Responsible Adoption Playbook (30-Day Pilot)

Week 1: Scope and Governance

- Pick 2–3 training modules, write disclosure language, set workspace roles, create Brand Kit, add Pronunciations.

Week 2: Produce MVPs

- Use Doc2Video or PPT Import for drafts. Add MCQs, Conversation Mode, Templates, Avatars, Pauses, and Animation Markers.

Week 3: Localize and Test

- Create 1–2 language variants with Instant Translation. Check layout, timing, multilingual voices, accessibility (captions, audio-only).

Week 4: Deploy and Measure

- Export SCORM 1.2/2004, set pass marks, track plays, time, and scores. Collect feedback, iterate, finalize disclosure SOPs.

Measurement and ROI

- Production: time to first draft, reduced review cycles, cost per minute of video.

- Learning: completion rate, average quiz scores, branch choices.

- Localization: time to launch variants, pronunciation errors, engagement metrics.

- Governance: percent of content with disclosures, approval turnaround, incident rate.

Top Script Creator Tools to Write and Plan Your Videos Faster

If video projects tend to slow down at the scripting stage, modern AI script creators can now draft, structure, and storyboard faster than ever—before handing off to a video platform for production, analytics, and tracking.

Below is an objective, stats-backed roundup of top script tools, plus ways to plug scripts into Colossyan to generate on-brand training videos with analytics, branching, and SCORM export.

What to look for in a script creator

- Structure and coherence: scene and act support, genre templates, outline-to-script.

- Targeting and tone: platform outputs (YouTube vs TikTok), tones (serious, humorous), length controls.

- Collaboration and revisions: comments, versioning, and ownership clarity.

- Integrations and exports: easy movement of scripts into a video workflow.

- Security and data policy: content ownership, training data usage.

- Multilingual capability: write once, adapt globally.

- Pacing and delivery: words-per-minute guidance and teleprompter-ready text.

Top script creator tools (stats, standout features, and example prompts)

1) Squibler AI Script Generator

Quick stat: 20,000 writers use Squibler AI Toolkit

Standout features:

- Free on-page AI Script Generator with unlimited regenerations; editable in the editor after signup.

- Storytelling-focused AI with genre templates; Smart Writer extends scenes using context.

- Output targeting for YouTube, TV shows, plays, Instagram Reels; tones include Humorous, Serious, Sarcastic, Optimistic, Objective.

- Users retain 100% rights to generated content.

- Prompt limit: max 3,000 words; cannot be empty.

Ideal for: Fast ideation and structured long-form or short-form scripts with coherent plot and character continuity.

Example prompt: “Write a serious, medium-length YouTube explainer on ‘Zero-Trust Security Basics’ with a clear 15-second hook, 3 key sections, and a 20-second summary.”

Integration with Colossyan: Copy Squibler’s scenes into Colossyan’s Editor, assign avatars, apply Brand Kits, and set animation markers for timing and emphasis. Export as SCORM with quizzes for tracking.

2) ProWritingAid Script Generator

Quick stat: 4+ million writers use ProWritingAid

Standout features:

- Free plan edits/runs reports on up to 500 words; 3 “Sparks” per day to generate scripts.

- Plagiarism checker scans against 1B+ web pages, published works, and academic papers.

- Integrations with Word, Google Docs, Scrivener, Atticus, Apple Notes; desktop app and browser extensions.

- Bank-level security; user text is not used to train algorithms.

Ideal for: Polishing and compliance-heavy workflows needing grammar, style, and originality checks.

Integration with Colossyan: Scripts can be proofed for grammar and clarity, with pronunciations added for niche terms. SCORM export allows analytics tracking.

3) Teleprompter.com Script Generator

Quick stat: Since 2018, helped 1M+ creators record 17M+ videos

Standout guidance:

- Calibrated for ~150 WPM: 30s ≈ 75–80 words; 1 min ≈ 150–160; 3 min ≈ 450–480; 5 min ≈ 750–800; 10 min ≈ 1,500–1,600.

- Hooks in the first 3–5 seconds are critical.

- Platform tips: YouTube favors longer, value-driven scripts with CTAs; TikTok/IG Reels need instant hooks; LinkedIn prefers professional thought leadership.

- Teleprompter-optimized scripts include natural pauses, emphasis markers, and speaking-speed calculators.

Ideal for: On-camera delivery and precise pacing.

Integration with Colossyan: Use WPM to set word count. Add pauses and animation markers for emphasis, resize canvas for platform-specific formats (16:9 YouTube, 9:16 Reels).

4) Celtx

Quick stats: 4.4/5 average rating from 1,387 survey responses; trusted by 7M+ storytellers

Standout features:

- End-to-end workflow: script formatting (film/TV, theater, interactive), Beat Sheet, Storyboard, shot lists, scheduling, budgeting.

- Collaboration: comments, revision history, presence awareness.

- 7-day free trial; option to remain on free plan.

Ideal for: Teams managing full pre-production workflows.

Integration with Colossyan: Approved slides and notes can be imported; avatars, branching, and MCQs convert storyboards into interactive training.

5) QuillBot AI Script Generator

Quick stats: Trustpilot 4.8; Chrome extension 4.7/5; 5M+ users

Standout features:

- Free tier and Premium for long-form generation.

- Supports multiple languages; adapts scripts to brand tone.

Ideal for: Rapid drafting and tone adaptation across languages and channels.

Integration with Colossyan: Scripts can be localized with Instant Translation; multilingual avatars and voices allow versioning and layout tuning.

6) Boords AI Script Generator

Quick stats: Trusted by 1M+ video professionals; scripts in 18+ languages

Standout features:

- Script and storyboard generator, versioning, commenting, real-time feedback.

Ideal for: Agencies and teams wanting script-to-storyboard in one platform.

Integration with Colossyan: Approved scripts can be imported and matched to avatars and scenes; generate videos for each language variant.

7) PlayPlay AI Script Generator

Quick stats: Used by 3,000+ teams; +165% social video views reported

Standout features:

- Free generator supports EN, FR, DE, ES, PT, IT; outputs platform-specific scripts.

- Enables fast turnaround of high-volume social content.

Ideal for: Marketing and communications teams.

Integration with Colossyan: Scripts can be finalized for avatars, gestures, and brand layouts; engagement tracked via analytics.

Pacing cheat sheet: words-per-minute for common video lengths

Based on Teleprompter.com ~150 WPM guidance:

- 30 seconds: 75–80 words

- 1 minute: 150–160 words

- 2 minutes: 300–320 words

- 3 minutes: 450–480 words

- 5 minutes: 750–800 words

- 10 minutes: 1,500–1,600 words

From script to finished video: sample workflows in Colossyan

Workflow A: Policy training in under a day

- Draft: Script created in Squibler with a 15-second hook and 3 sections

- Polish: Grammar and originality checked in ProWritingAid

- Produce: Scenes built in Colossyan with avatar, Brand Kit, MCQs

- Measure: Analytics tracks plays, time watched, and quiz scores; export CSV for reporting

Workflow B: Scenario-based role-play for sales

- Outline: Beats and dialogue in Celtx with approval workflow

- Script: Alternate endings generated in Squibler Smart Writer for branching

- Produce: Conversation Mode in Colossyan with avatars, branching, and gestures

- Localize: Spanish variant added with Instant Translation

Workflow C: On-camera style delivery without filming

- Draft: Teleprompter.com script (~300 words for 2 min)

- Produce: Clone SME voice, assign avatar, add pauses and animation markers

- Distribute: Embed video in LMS, track retention and quiz outcomes

L&D-specific tips: compliance, localization, and reporting

- Brand Kits ensure consistent fonts/colors/logos across departments

- Pronunciations maintain accurate terminology

- Multi-language support via QuillBot or Boords + Instant Translation

- SCORM export enables pass marks and LMS analytics

- Slide/PDF imports convert notes into narration; avatars and interactive elements enhance learning

Quick picks by use case

- Story-first scripts: Squibler

- Grammar/style/originality: ProWritingAid

- Pacing and delivery: Teleprompter.com

- Full pre-production workflow: Celtx

- Multilingual drafting: QuillBot

- Quick browser ideation: Colossyan

- Script-to-storyboard collaboration: Boords

- Social platform-specific: PlayPlay

A Complete Guide to eLearning Software Development in 2025

eLearning software development in 2025 blends interoperable standards (SCORM, xAPI, LTI), cloud-native architectures, AI-driven personalization, robust integrations (ERP/CRM/HRIS), and rigorous security and accessibility to deliver engaging, measurable training at global scale—often accelerated by AI video authoring and interactive microlearning.

The market is big and getting bigger. The global eLearning market is projected to reach about $1T by 2032 (14% CAGR). Learners want online options: 73% of U.S. students favor online classes, and Coursera learners grew 438% over five years. The ROI is strong: eLearning can deliver 120–430% annual ROI, cut learning costs by 20–50%, boost productivity by 30–60%, and improve knowledge retention by 25–60%.

This guide covers strategy, features, standards, architecture, timelines, costs, tools, analytics, localization, and practical ways to accelerate content—plus where an AI video layer helps.

2025 Market Snapshot and Demand Drivers

Across corporate training, K-12, higher ed, and professional certification, the drivers are clear: upskilling at scale, mobile-first learning, and cloud-native platforms that integrate with the rest of the stack. Demand clusters around AI personalization, VR/AR, gamification, and virtual classrooms—alongside secure, compliant data handling.

- Interoperability is the baseline. SCORM remains the most widely adopted, xAPI expands tracking beyond courses, and LTI connects tools to LMS portals.

- Real-world scale is proven. A global SaaS eLearning platform runs with 2M+ active users and supports SCORM, xAPI, LTI, AICC, and cmi5, serving enterprise brands like Visa and PepsiCo (stacked vendor case on the same source).

- Enterprise training portals work. A Moodle-based portal at a major fintech was “highly rated” by employees, proving that well-executed LMS deployments can drive adoption (Itransition’s client example).

On the compliance side, expect GDPR, HIPAA, FERPA, COPPA, SOC 2 Type II, and WCAG accessibility as table stakes in many sectors.

Business Case and ROI (with Examples)

The economics still favor eLearning. Industry benchmarks show 120–430% annual ROI, 20–50% cost savings, 30–60% productivity gains, and 25–60% better retention. That’s not surprising if you replace live sessions and travel with digital training and analytics-driven iteration.

A few proof points:

- A custom replacement for a legacy Odoo-based LMS/ERP/CRM cut DevOps expenses by 10%.

- A custom conference learning platform cut infrastructure costs by 3x.

- In higher ed, 58% of universities use chatbots to handle student questions, and a modernization program across 76 dental schools delivered faster decisions through real-time data access (same source).

Where I see teams lose money: content production. Building videos, translations, and updates often eats the budget. This is where we at Colossyan help. We convert SOPs, PDFs, and slide decks into interactive training videos fast using Doc2Video and PPT import. We export SCORM 1.2/2004 with pass marks so your LMS tracks completion and scores. Our analytics (plays, time watched, quiz averages) close the loop so you can edit scenes and raise pass rates without re-recording. That shortens payback periods because you iterate faster and cut production costs.

Must-Have eLearning Capabilities (2025 Checklist)

Content Creation and Management

- Multi-format authoring, reusable assets, smart search, compliance-ready outputs.

- At scale, you need templates, brand control, central assets, and translation workflows.

Colossyan fit: We use templates and Brand Kits for a consistent look. The Content Library holds shared media. Pronunciations fix tricky product terms. Voices can be cloned for brand-accurate narration. Our AI assistant helps refine scripts. Add MCQs and branching for interactivity, and export captions for accessibility.

Administration and Delivery

- Multi-modal learning (asynchronous, live, blended), auto-enrollment, scheduling, SIS/HRIS links, notifications, learning paths, and proctoring-sensitive flows where needed.

Colossyan fit: We create the content layer quickly. You then export SCORM 1.2/2004 with pass criteria for clean LMS tracking and delivery.

Social and Engagement

- Profiles, communities, chats or forums, gamification, interaction.

Colossyan fit: Conversation Mode simulates role plays with multiple avatars. Branching turns policy knowledge into decisions, not just recall.

Analytics and Reporting

- User history, predictions, recommendations, assessments, compliance reporting.

Colossyan fit: We provide video-level analytics (plays, time watched, average scores) and CSV exports you can merge with LMS/xAPI data.

Integrations and System Foundations

- ERP, CRM (e.g., Salesforce), HRIS, CMS/KMS/TMS, payments, SSO, video conferencing; scalable, secure, cross-device architecture.

Colossyan fit: Our SCORM packages and embeddable links drop into your existing ecosystem. Multi-aspect-ratio output supports mobile and desktop.

Standards and Compliance (How to Choose)

Here’s the short version:

- SCORM is the universal baseline for packaging courses and passing completion/score data to an LMS.

- xAPI (Tin Can) tracks granular activities beyond courses—simulations, informal learning, performance support.

- LTI is the launch protocol used by LMSs to integrate external tools, common in higher ed.

- cmi5 (and AICC) show up in specific ecosystems but are less common.

Leading vendors support a mix of SCORM, xAPI, and often LTI (market overview). For compliance, consider GDPR, HIPAA, FISMA, FERPA, COPPA, and WCAG/ADA accessibility. Don’t cut corners on captions, keyboard navigation, and color contrast.

Colossyan fit: We export SCORM 1.2 and 2004 with completion and pass criteria. We also export SRT/VTT captions to help you meet accessibility goals inside your LMS.

Architecture and Integrations (Reference Design)

A modern reference design looks like this:

- Cloud-first; single-tenant or multi-tenant; microservices; CDN delivery; event-driven analytics; encryption in transit and at rest; SSO via SAML/OAuth; role-based access.

- Integrations with ERP/CRM/HRIS for provisioning and reporting; video conferencing (Zoom/Teams/WebRTC) for live sessions; SSO; payments and ecommerce where needed; CMS/KMS.

- Mobile performance tuned for low bandwidth; responsive design; offline options; caching; localization variants.

In practice, enterprise deployments standardize SCORM/xAPI/LTI handling and SSO to Teams/Zoom in corporate and higher ed stacks. This aligns with common integration realities across the industry.

Colossyan fit: We are the content layer that plugs into your LMS or portal. Enterprise workspaces, foldering, and commenting help you govern content and speed approvals.

Advanced Differentiators to Stand Out

Differentiators that actually matter:

- AI for content generation, intelligent tutoring, predictive analytics, and automated grading (where the data supports it).

- VR/XR/AR for high-stakes simulation training.

- Wearables and IoT for experiential learning data.

- Gamified simulations and big data-driven personalization at scale.

- Strong accessibility, including WCAG and multilingual support.

Examples from the tool landscape: Captivate supports 360°/VR; some vendors tout SOC 2 Type II for enterprise confidence and run large brand deployments (see ELB Learning references in the same market overview).

Colossyan fit: We use AI to convert documents and prompts into video scenes with avatars (Doc2Video/Prompt2Video). Instant Translation produces multilingual variants fast, and multilingual or cloned voices keep brand personality consistent. Branching + MCQs create adaptive microlearning without custom code.

Tooling Landscape: Authoring Tools vs LMS vs Video Platforms

For first-time creators, this is a common confusion: authoring tools make content; LMSs host, deliver, and report; video platforms add rich media and interactivity.

A Reddit thread shows how often people blur the lines and get stuck comparing the wrong things; the advice there is to prioritize export and tracking standards and to separate authoring vs hosting decisions (community insight).

Authoring Tool Highlights

- Elucidat is known for scale and speed; best-practice templates can be up to 4x faster. It has strong translation/variation control.

- Captivate offers deep simulations and VR; it’s powerful but often slower and more desktop-centric.

- Storyline 360 and Rise 360 are widely adopted; Rise is fast and mobile-first; Storyline offers deeper interactivity with a steeper learning curve. Some support cmi5 exports.

- Gomo, DominKnow, iSpring, Easygenerator, Evolve, and Adapt vary in collaboration, translation workflows, analytics, and mobile optimization.

- Articulate’s platform emphasizes AI-assisted creation and 80+ language localization across an integrated creation-to-distribution stack.

Where Colossyan fits: We focus on AI video authoring for L&D. We turn documents and slides into avatar-led videos with brand kits, interactions, instant translation, SCORM export, and built-in analytics. If your bottleneck is “we need engaging, trackable video content fast,” that’s where we help.

Timelines, Costs, and Delivery Models

Timelines

- MVPs land in 1–5 months (4–6 months if you add innovative components). SaaS release cadence is every 2–6 weeks, with hotfixes potentially several times/day.

- Full custom builds can run several months to 12+ months.

Cost Drivers

- The number of modules, interactivity depth, integrations, security/compliance, accessibility, localization, and data/ML scope drive cost. As rough benchmarks: MVPs at $20k–$50k, full builds up to ~$150k, maintenance around $5k–$10k/year depending on complexity and region. Time-to-value can be quick when you scope for an MVP and phase features.

Delivery Models

- Time & Material gives you prioritization control.

- Dedicated Team improves comms and consistency across sprints.

- Outstaffing adds flexible capacity. Many teams mix these models by phase.

Colossyan acceleration: We compress content production. Turning existing docs and slides into interactive microlearning videos frees your engineering budget for platform features like learning paths, proctoring, and SSO.

Security, Privacy, and Accessibility

What I consider baseline:

- RBAC, SSO/SAML/OAuth, encryption (TLS in transit, AES-256 at rest), audit logging, DPA readiness, data minimization, retention policies, secure media delivery with tokenized URLs, and thorough WCAG AA practices (captions, keyboard navigation, contrast).

Regulate to the highest bar your sector demands: GDPR/HIPAA/FERPA/COPPA, and SOC 2 Type II where procurement requires it.

Colossyan contribution: We supply accessible learning assets with captions files and package SCORM so you inherit LMS SSO, storage, and reporting controls.

Analytics and Measurement

Measurement separates compliance from impact. A good analytics stack lets you track:

- Completion, scores, pass rates, and time spent.

- Retention, application, and behavioral metrics.

- Correlations with safety, sales, or performance data.

- Learning pathway and engagement heatmaps.

Benchmarks:

- 80% of companies plan to increase L&D analytics spending.

- High-performing companies are 3x more likely to use advanced analytics.

Recommended Analytics Layers

- Operational (LMS-level): completion, pass/fail, user activity.

- Experience (xAPI/LRS): behavior beyond courses, simulation data, real-world performance.

- Business (BI dashboards): tie learning to outcomes—safety rates, sales metrics, compliance KPIs.

Colossyan fit: Our analytics report plays, completion, time watched, and quiz performance. CSV export lets you combine video engagement with LMS/xAPI/LRS data. That gives you a loop to iterate on scripts and formats.

Localization and Accessibility

Accessibility and localization are inseparable in global rollouts.

Accessibility

Follow WCAG 2.1 AA as a baseline. Ensure:

- Keyboard navigation

- Closed captions (SRT/VTT)

- High-contrast and screen-reader–friendly design

- Consistent heading structures and alt text

Localization

- Translate not just on-screen text, but also narration, assessments, and interfaces.

- Use multilingual glossaries and brand voice consistency.

- Plan for right-to-left (RTL) languages and UI mirroring.

Colossyan fit: Instant Translation creates fully localized videos with multilingual avatars and captions in one click. You can produce Spanish, French, German, or Mandarin versions instantly while maintaining timing and brand tone.

Common Challenges and How to Solve Them

Case Studies

1. Global Corporate Training Platform

A multinational built a SaaS LMS supporting 2M+ active users, SCORM/xAPI/LTI, and multi-tenant architecture—serving brands like Visa, PepsiCo, and Oracle (market source).

Results: High reliability, compliance-ready, enterprise-grade scalability.

2. Fintech Learning Portal

A Moodle-based portal for internal training and certifications—employees rated it highly for usability and structure (Itransition example).

Results: Improved adoption and measurable skill progression.

3. University Chatbots and Dashboards

Across 76 dental schools, chatbots streamlined decision-making with real-time student data (Chetu data).

Results: Faster student response times and reduced admin load.

Microlearning, AI, and the Future of Training

The future is faster iteration and AI-enabled creativity. In corporate learning, high-performing teams will:

- Generate content automatically from internal docs and SOPs.

- Localize instantly.

- Adapt learning paths dynamically using analytics.

- Tie everything to business metrics via LRS/BI dashboards.

Colossyan fit: We are the “AI layer” that makes this real—turning any text or slide deck into ready-to-deploy microlearning videos with avatars, quizzes, and SCORM tracking, in minutes.

Implementation Roadmap

Even with a strong platform, the rollout determines success. Treat it like a product launch, not an IT project.

Phase 1: Discovery and Mapping (Weeks 1–2)

- Inventory current training assets, policies, and SOPs.

- Map compliance and role-based training requirements.

- Define SCORM/xAPI and analytics targets.

- Identify translation or accessibility gaps.

Phase 2: Baseline Launch (Weeks 3–6)

- Deploy OSHA 10/30 or other core baseline courses.

- Add Focus Four or job-specific safety modules.

- Pilot SCORM tracking and reporting dashboards.

Phase 3: Role-Specific Depth (Weeks 7–10)

- Add targeted programs—forklift, heat illness prevention, HAZWOPER, healthcare safety, or environmental modules.

- Translate and localize high-priority materials.

- Automate enrollments via HRIS/SSO integration.

Phase 4: Continuous Optimization (Weeks 11–12 and beyond)

- Launch refreshers and microlearning updates.

- Review analytics and adjust content frequency.

- Embed performance metrics into dashboards.

Colossyan tip: Use Doc2Video for SOPs, policies, and manuals—each can become a 3-minute microlearning video that fits easily into your LMS. Export as SCORM, track completions, and measure engagement without extra engineering.

Procurement and Budgeting

Most organizations combine prebuilt and custom components. Reference pricing from reputable vendors:

- OSHA Education Center: save up to 40%.

- ClickSafety: OSHA 10 for $89, OSHA 30 for $189, NYC SST 40-hour Worker for $391.

- OSHA.com: OSHA 10 for $59.99, OSHA 30 for $159.99, HAZWOPER 40-hour for $234.99.

Use these as benchmarks for blended budgets. Allocate separately for:

- Platform licensing and hosting.

- Authoring tools or AI video creation (e.g., Colossyan).

- SCORM/xAPI tracking and reporting.

- Translation, accessibility, and analytics.

Measuring Impact

Track impact through measurable business indicators:

- Safety: TRIR/LTIR trends, incident reduction.

- Efficiency: time saved vs. in-person sessions.

- Engagement: completions, quiz scores, time on task.

- Business results: faster onboarding, fewer compliance violations.

Proof: ClickSafety cites clients achieving safety rates at one-third of national averages and saving three full days per OSHA 10 participant.

Colossyan impact: We see clients raise pass rates 10–20%, compress training build time by up to 80%, and reduce translation turnaround from weeks to minutes.

Essential Employee Safety Training Programs for a Safer Workplace

Compliance expectations are rising. More states and industries now expect OSHA training, and high-hazard work is under closer scrutiny. The old approach—one annual course and a slide deck—doesn’t hold up. You need a core curriculum for everyone, role-based depth for risk, and delivery that scales without pulling people off the job for days.

This guide lays out a simple blueprint. Start with OSHA 10/30 to set a baseline. Add targeted tracks like Focus Four, forklifts, HAZWOPER, EM 385-1-1, heat illness, and healthcare safety. Use formats that are easy to access, multilingual, and trackable. Measure impact with hard numbers, not vibes.

I’ll also show where I use Colossyan to turn policy PDFs and SOPs into interactive video that fits into SCORM safety training and holds up in audits.

The compliance core every employer needs

Start with OSHA-authorized training. OSHA 10 is best for entry-level workers and those without specific safety duties. OSHA 30 suits supervisors and safety roles. Reputable online providers offer self-paced access on any device with narration, quizzes, and real case studies. You can usually download a completion certificate right away, and the official DOL OSHA card arrives within about two weeks. Cards don’t expire, but most employers set refreshers every 3–5 years.

Good options and proof points:

- OSHA Education Center: Their online 30-hour course includes narration, quizzes, and English/Spanish options, with bulk discounts. Promos can be meaningful—see save up to 40%—and they cite 84,000+ reviews.

- OSHA.com: Clarifies there’s no “OSHA certification.” You complete Outreach training and get a DOL card. Current discounts—OSHA 10 at $59.99 and OSHA 30 at $159.99—and DOL cards arrive in ~2 weeks.

- ClickSafety: Reports clients saving at least 3 days of jobsite time by using online OSHA 10 instead of in-person.

How to use Colossyan to deliver

- Convert policy PDFs and manuals into videos via Doc2Video or PPT import.

- Add interactive quizzes, export SCORM packages, and track completion metrics.

- Use Instant Translation and multilingual voices for Spanish OSHA training.

High-risk and role-specific programs to prioritize

Construction hazards and Focus Four

Focus Four hazards—falls, caught-in/between, struck-by, and electrocution—cause most serious incidents in construction. OSHAcademy offers Focus Four modules (806–809) and a bundle (812), plus fall protection (714/805) and scaffolding (604/804/803).

Simple Focus Four reference:

- Falls: edges, holes, ladders, scaffolds

- Caught-in/between: trenching, pinch points, rotating parts

- Struck-by: vehicles, dropped tools, flying debris

- Electrocution: power lines, cords, GFCI, lockout/tagout

Forklifts (Powered Industrial Trucks)

OSHAcademy’s stack shows the path: forklift certification (620), Competent Person (622), and Program Management (725).

Role progression:

- Operator: pre-shift inspection, load handling, site rules

- Competent person: evaluation, retraining

- Program manager: policies, incident review

HAZWOPER

Exposure determines hours: 40-hour for highest risk, 24-hour for occasional exposure, and 8-hour for the refresher.

From OSHA.com:

OSHAcademy has a 10-part General Site Worker pathway (660–669) plus an 8-hour refresher (670).

EM 385-1-1 (Military/USACE)

Required on USACE sites. OSHAcademy covers the 2024 edition in five courses (510–514).

Checklist:

- Confirm contract, record edition

- Map job roles to chapters

- Track completions and store certificates

Heat Illness Prevention

OSHAcademy provides separate tracks for employees (645) and supervisors (646).

Healthcare Safety

OSHAcademy includes:

- Bloodborne Pathogens (655, 656)

- HIPAA Privacy (625)

- Safe Patient Handling (772–774)

- Workplace Violence (720, 776)

Environmental and Offshore

OSHAcademy offers Environmental Management Systems (790), Oil Spill Cleanup (906), SEMS II (907), and Offshore Safety (908–909).

Build a competency ladder

From awareness to leadership—OSHAcademy’s ladder moves from “Basic” intros like PPE (108) and Electrical (115) up to 700-/800-series leadership courses. Add compliance programs like Recordkeeping (708) and Working with OSHA (744).

Proving impact

Track:

- TRIR/LTIR trends

- Time saved vs. in-person

- Safety conversation frequency

ClickSafety cites results: one client’s rates dropped to under one-third of national averages and saved at least 3 days per OSHA 10 participant.

Delivery and accessibility

Online, self-paced courses suit remote crews. English/Spanish options are common. Completion certificates are immediate; DOL cards arrive within two weeks.

ClickSafety offers 500+ online courses and 25 years in the industry.

Budgeting and procurement

Published prices and discounts:

- OSHA Education Center: save up to 40%

- ClickSafety: OSHA 30 Construction $189, OSHA 10 $89, NYC SST 40-hr Worker $391

- OSHA.com: OSHA 10 $59.99, OSHA 30 $159.99, HAZWOPER 40-hr $234.99

90-day rollout plan

Weeks 1–2: Assess and map

Weeks 3–6: Launch OSHA 10/30 + Focus Four

Weeks 7–10: Add role tracks (forklift, heat illness)

Weeks 11–12: HAZWOPER refreshers, healthcare, environmental, and micro-videos

Best AI Video Apps for Effortless Content Creation in 2025

The best AI video app depends on what you’re making: social clips, cinematic shots, or enterprise training. Tools vary a lot on quality, speed, lip-sync, privacy, and pricing. Here’s a practical guide with clear picks, real limits, and workflows that actually work. I’ll also explain when it makes sense to use Colossyan for training content you need to track and scale.

What to look for in AI video apps in 2025

Output quality and control

Resolution caps are common. Many tools are 1080p only. Veo 2 is the outlier with 4K up to 120 seconds. If you need 4K talking heads, check this first.

Lip-sync is still hit-or-miss. Many generative apps can’t reliably sync mouth movement to speech. For example, InVideo’s generative mode lacks lip-sync and caps at HD, which is a problem for talking-head content.

Camera controls matter for cinematic shots. Kling, Runway, Veo 2, and Adobe Firefly offer true pan/tilt/zoom. If you need deliberate camera movement, pick accordingly.

Reliability and speed

Expect waits and occasional hiccups. Kling’s free plan took ~3 hours in a busy period; Runway often took 10–20 minutes. InVideo users report crashes and buggy playback at times. PixVerse users note credit quirks.

Pricing and credit models

Weekly subs and hard caps are common, especially on mobile. A typical example: $6.99/week for 1,500 credits, then creation stops. It’s fine for short sprints, but watch your usage.

Data safety and ownership

Privacy isn’t uniform. Some apps track identifiers and link data for analytics and personalization. Others report weak protections. HubX’s listing says data isn’t encrypted and can’t be deleted. On the other hand, VideoGPT says you retain full rights to monetize outputs.

Editing and collaboration

Text-based editing (InVideo), keyframe control (PixVerse), and image-to-video pipelines help speed up iteration and reduce costs.

Compliance and enterprise needs

If you’re building training at scale, the checklist is different: SCORM, analytics, translation, brand control, roles, and workspace structure. That’s where Colossyan fits.

Quick picks by use case

Short-form social (≤60 seconds): VideoGPT.io (free 3/day; 60s max paid; simple VO; owns rights)

Fast templates and ads: InVideo AI (50+ languages, AI UGC ads, AI Twins), but note HD-only generative output and reliability complaints

Cinematic generation and camera moves: Kling 2.0, Runway Gen-4, Hailou; Veo 2/3.1 for premium quality (Veo 2 for 4K up to 120s)

Avatar presenters: Colossyan stands out for realistic avatars, accurate lip-sync, and built-in multilingual support.

Turn scripts/blogs to videos: Pictory, Lumen5

Free/low-cost editors: DaVinci Resolve, OpenShot, Clipchamp

Creative VFX and gen-video: Runway ML; Adobe Firefly for safer commercial usage

L&D at scale: Colossyan for Doc2Video/PPT import, avatars, quizzes/branching, analytics, SCORM

App-by-app highlights and gotchas

InVideo AI (iOS, web)

Best for: Template-driven marketing, multi-language social videos, quick text-command edits.

Standout features: 50+ languages, text-based editing, AI UGC ads, AI Twins personal avatars, generative plugins, expanded prompt limit, Veo 3.1 tie-in, and accessibility support. The brand claims 25M customers in 190 countries. On mobile, the app shows 25K ratings and a 4.6 average.

Limits: No lip-sync in generative videos, HD-only output, occasional irrelevant stock, accent drift in voice cloning, and reports of crashes/buggy playback/inconsistent commands.

Pricing: Multiple tiers from $9.99 to $119.99, plus add-ons.

AI Video (HubX, Android)

Best for: Social effects and mobile-first workflows with auto lip-sync.

Claims: Veo3-powered T2V, image/photo-to-video, emotions, voiceover + auto lip-sync, HD export, viral effects.

Limits: Developer-reported data isn’t encrypted and can’t be deleted; shares photos/videos and activity; no free trial; creation blocks without paying; off-prompt/failures reported.

Pricing: $6.99/week for 1,500 credits.

Signal: 5M+ installs and a 4.4★ score from 538K reviews show strong adoption despite complaints.

PixVerse (Android)

Best for: Fast 5-second clips, keyframe control, and remixing with a huge community.

Standout features: HD output, V5 model, Key Frame, Fusion (combine images), image/video-to-video, agent co-pilot, viral effects, daily free credits.

Limits: Credit/accounting confusion, increasing per-video cost, inconsistent prompt fidelity, and some Pro features still limited.

Signal: 10M+ downloads and a 4.5/5 rating from ~3.1M reviews.

VideoGPT.io (web)

Best for: Shorts/Reels/TikTok up to a minute with quick voiceovers.

Plans: Free 3/day (30s); weekly $6.99 unlimited (60s cap); $69.99/year Pro (same cap). Priority processing for premium.

Notes: Monetization allowed; users retain full rights; hard limit of 60 seconds on paid plans. See details at videogpt.io.

VideoAI by Koi Apps (iOS)

Best for: Simple square-format AI videos and ASMR-style outputs.

Limits: Square-only output; advertised 4-minute renders can take ~30 minutes; daily cap inconsistencies; weak support/refund reports; inconsistent prompt adherence.

Pricing: Weekly $6.99–$11.99; yearly $49.99; credit packs $3.99–$7.99.

Signal: 14K ratings at 4.2/5.

Google Veo 3.1 (Gemini)

Best for: Short clips with native audio and watermarking; mobile-friendly via Gemini app.

Access: Veo 3.1 Fast (speed) vs. Veo 3.1 (quality), availability varies, 18+.

Safety: Visible and SynthID watermarks on every frame.

Note: It generates eight‑second videos with native audio today.

Proven workflows that save time and cost

Image-to-video first

Perfect a single high-quality still (in-app or with Midjourney). Animate it in Kling/Runway/Hailou. It’s cheaper and faster than regenerating full clips from scratch.

Legal safety priority

Use Adobe Firefly when you need licensed training data and safer commercial usage.

Long shots

If you must have long single shots, use Veo 2 up to 120s or Kling’s extend-to-~3 minutes approach.

Social-first

VideoGPT.io is consistent for ≤60s outputs with quick voiceovers and full monetization rights.

Practical example

For a cinematic training intro: design one hero still, animate in Runway Gen-4, then assemble the lesson in Colossyan with narration, interactions, and SCORM export.

When to choose Colossyan for L&D (with concrete examples)

If your goal is enterprise training, I don’t think a general-purpose generator is enough. You need authoring, structure, and tracking. This is where I use Colossyan daily.

Doc2Video and PPT/PDF import

Upload a document or deck and auto-generate scenes and narration. It turns policies, SOPs, and slide notes into a draft in minutes.

Customizable avatars and Instant Avatars

Put real trainers or executives on screen with Instant Avatars, keep them consistent, and update scripts without reshoots. Conversation mode supports up to four avatars per scene.

Voices and pronunciations

Set brand-specific pronunciations for drug names or acronyms, and pick multilingual voices.

Brand Kits and templates

Lock fonts, colors, and logos so every video stays on-brand, even when non-designers build it.

Interactions and branching

Add decision trees, role-plays, and knowledge checks, then track scores.

Analytics

See plays, time watched, and quiz results, and export CSV for reporting.

SCORM export

Set pass marks and export SCORM 1.2/2004 so the LMS can track completion.

Instant Translation

Duplicate entire courses into new languages with layout and timing preserved.

Workspace management

Manage roles, seats, and folders across teams so projects don’t get lost.

Example 1: compliance microlearning

Import a PDF, use an Instant Avatar of our compliance lead, add pronunciations for regulated terms, insert branching for scenario choices, apply our Brand Kit, export SCORM 2004 with pass criteria, and monitor scores.

Example 2: global rollout

Run Doc2Video on the original policy, use Instant Translation to Spanish and German, swap in multilingual avatars, adjust layout for 16:9 and 9:16, and export localized SCORM packages for each region.

Example 3: software training

Screen-record steps, add an avatar intro, insert MCQs after key tasks, use Analytics to find drop-off points, and refine with text-based edits and animation markers.

Privacy and compliance notes

Consumer app variability

HubX’s Play listing says data isn’t encrypted and can’t be deleted, and it shares photos/videos and app activity.

InVideo and Koi Apps track identifiers and link data for analytics and personalization; they also collect usage and diagnostics. Accessibility support is a plus.

VideoGPT.io grants users full rights to monetize on YouTube/TikTok.

For regulated training content

Use governance: role-based workspace management, brand control, organized libraries.

Track outcomes: SCORM export with pass/fail criteria and analytics.

Clarify ownership and data handling for any external generator used for B-roll or intros.

Comparison cheat sheet

Highest resolution: Google Veo 2 at 4K; many others cap at 1080p; InVideo generative is HD-only.

Longest single-shot: Veo 2 up to 120s; Kling extendable to ~3 minutes (10s base per gen).

Lip-sync: More reliable in Kling/Runway/Hailou/Pika; many generators still struggle; InVideo generative lacks lip-sync.

Native audio generation: Veo 3.1 adds native audio and watermarking; Luma adds sound too.

Speed: Adobe Firefly is very fast for short 5s clips; Runway/Pika average 10–20 minutes; Kling free can queue hours.

Pricing models: Weekly (VideoGPT, HubX), monthly SaaS (Runway, Kling, Firefly), pay-per-second (Veo 2), freemium credits (PixVerse, Vidu). Watch free trial limits and credit resets.

How AI Short Video Generators Can Level Up Your Content Creation

The short-form shift: why AI is the accelerator now

Short-form video is not a fad. Platforms reward quick, clear clips that grab attention fast. YouTube Shorts has favored videos under 60 seconds, but Shorts is moving to allow up to 3 minutes, so you should test lengths based on topic and audience. TikTok’s Creator Rewards program currently prefers videos longer than 1 minute. These shifts matter because AI helps you hit length, pacing, and caption standards without bloated workflows.

The tooling has caught up. Benchmarks from the market show real speed and scale:

- ImagineArt’s AI Shorts claims up to 300x cost savings, 25x fewer editing hours, and 3–5 minutes from idea to publish-ready. It also offers 100+ narrator voices in 30+ languages and Pexels access for stock.

- Short AI says one long video can become 10+ viral shorts in one click and claims over 99% speech-to-text accuracy for auto subtitles across 32+ languages.

- OpusClip reports 12M+ users and outcomes like 2x average views and +57% watch time when repurposing long-form, plus a free tier for getting started.

- Kapwing can generate fully edited shorts (15–60s) with voiceover, subtitles, an optional AI avatar, and auto B-roll, alongside collaboration features.

- Invideo AI highlights 25M+ users, a 16M+ asset library, and 50+ languages.

- VideoGPT focuses on mobile workflows with ultra-realistic voiceover and free daily generations (up to 3 videos/day) and says users can monetize output rights.

- Adobe Firefly emphasizes commercially safe generation trained on licensed sources and outputs 5-second 1080p clips with fine control over motion and style.

The takeaway: if you want more reach with less overhead, use an AI short video generator as your base layer, then refine for brand and learning goals.

What AI short video generators actually do

Most tools now cover a common map of features:

- Auto-script and ideation: Generate scripts from prompts, articles, or documents. Some offer templates based on viral formats, like Short AI’s 50+ hashtag templates.

- Auto-captions and stylized text: Most tools offer automatic captions with high accuracy claims (97–99% range). Dynamic caption styles, emoji, and GIF support help you boost retention.

- Voiceover and multilingual: Voice libraries span 30–100+ languages with premium voices and cloning options.

- Stock media and effects: Large libraries—like Invideo’s 16M+ assets and ImagineArt’s Pexels access—plus auto B-roll and transitions from tools like Kapwing.

- Repurpose long-form: Clip extraction that finds hooks and reactions from podcasts and webinars via OpusClip and Short AI.

- Platform formatting and scheduling: Aspect ratio optimization and scheduling to multiple channels; Short AI supports seven platforms.

- Mobile-friendly creation: VideoGPT lets you do this on your phone or tablet.

- Brand-safe generation: Firefly leans on licensed content and commercial safety.

Example: from a one-hour webinar, tools like OpusClip and Short AI claim to auto-extract 10+ clips in under 10 minutes, then add captions at 97–99% accuracy. That’s a week of posts from one recording.

What results to target

Be realistic, but set clear goals based on market claims:

- Speed: First drafts in 1–5 minutes; Short AI and ImagineArt both point to 10x or faster workflows.

- Cost: ImagineArt claims up to 300x cost savings.

- Engagement: Short AI cites +50% engagement; OpusClip reports 2x average views and +57% watch time.

- Scale: 10+ clips from one long video is normal; 3–5 minutes idea to publish is a useful benchmark.

Platform-specific tips for Shorts, TikTok, Reels

- YouTube Shorts: Keep most videos under 60s for discovery, but test 60–180s as Shorts expands (as noted by Short AI).

- TikTok: The Creator Rewards program favors >1-minute videos right now (per Short AI).

- Instagram Reels and Snapchat Spotlight: Stick to vertical 9:16. Lead with a hook in the first 3 seconds. Design for silent viewing with clear on-screen text.

Seven quick-win use cases

- Turn webinars or podcasts into snackable clips

Example: Short AI and OpusClip extract hooks from a 45-minute interview and produce 10–15 clips with dynamic captions. - Idea-to-video rapid prototyping

Example: ImagineArt reports 3–5 minutes from idea to publish-ready. - Multilingual reach at scale

Example: Invideo supports 50+ languages; Kapwing claims 100+ for subtitles/translation. - On-brand product explainers and microlearning

Example: Firefly focuses on brand-safe visuals great for e-commerce clips. - News and thought leadership

Example: Kapwing’s article-to-video pulls fresh info and images from a URL. - Mobile-first social updates

Example: VideoGPT enables quick creation on phones. - Monetization-minded content

Example: Short AI outlines earnings options; Invideo notes AI content can be monetized if original and policy-compliant.

How Colossyan levels up short-form for teams (especially L&D)

- Document-to-video and PPT/PDF import: I turn policies, SOPs, and decks into videos fast.

- Avatars, voices, and pronunciations: Stock or Instant Avatars humanize short clips.

- Brand Kits and templates: Fonts, colors, and logos with one click.

- Interaction and micro-assessments: Add short quizzes to 30–60s training clips.

- Analytics and SCORM: Track plays, quiz scores, and export data for LMS.

- Global localization: Instant Translation preserves timing and layout.

- Collaboration and organization: Assign roles, comment inline, and organize drafts.

A step-by-step short-form workflow in Colossyan

- Start with Doc2Video to import a one-page memo.

- Switch to 9:16 and apply a Brand Kit.

- Assign avatar and voice; add pauses and animations.

- Add background and captions.

- Insert a one-question MCQ for training.

- Use Instant Translation for language versions.

- Review Analytics, export CSV, and refine pacing.

Creative tips that travel across platforms

- Hook first (first 3 seconds matter).

- Caption smartly.

- Pace with intent.

- Balance audio levels.

- Guide the eye with brand colors.

- Batch and repurpose from longer videos.

Measurement and iteration

Track what actually moves the needle:

- Core metrics: view-through rate, average watch time, completion.

- For L&D: quiz scores, time watched, and differences by language or region.

In Colossyan: check Analytics, export CSV, and refine based on data.

How AI Video from Photo Tools Are Changing Content Creation

AI video from photo tools are turning static images into short, useful clips in minutes. If you work in L&D, marketing, or internal communications, this matters. You can create b-roll, social teasers, or classroom intros without filming anything. And when you need full training modules with analytics and SCORM, there’s a clean path for that too.

AI photo-to-video tools analyze a single image to simulate camera motion and synthesize intermediate frames, turning stills into short, realistic clips. For training and L&D, platforms like Colossyan add narration with AI avatars, interactive quizzes, brand control, multi-language support, analytics, and SCORM export - so a single photo can become a complete, trackable learning experience.

What “AI video from photo” actually does

In plain English, image to video AI reads your photo, estimates depth, and simulates motion. It might add a slow pan, a zoom, or a parallax effect that separates foreground from background. Some tools interpolate “in-between” frames so the movement feels smooth. Others add camera motion animation, light effects, or simple subject animation.

Beginner-friendly examples:

- Face animation: tools like Deep Nostalgia by MyHeritage and D-ID animate portraits for quick emotive clips. This is useful for heritage storytelling or simple character intros.

- Community context: Reddit threads explain how interpolation and depth estimation help create fluid motion from a single photo. That’s the core method behind many free and paid tools.

Where it shines:

- B-roll when you don’t have footage

- Social posts from your photo library

- Short intros and quick promos

- Visual storytelling from archives or product stills

A quick survey of leading photo-to-video tools (and where each fits)

Colossyan

A leading AI video creation platform that turns text or images into professional presenter-led videos. It’s ideal for marketing, learning, and internal comms teams who want to save on filming time and production costs. You can choose from realistic AI actors, customize their voice, accent, and gestures, and easily brand the video with your own assets. Colossyan’s browser-based editor makes it simple to update scripts or localize content into multiple languages - no reshoots required.

Try it free and see how fast you can go from script to screen. Example: take a product launch doc and short script, select an AI presenter, and export a polished explainer video in minutes - perfect for onboarding, marketing launches, or social posts.

EaseMate AI

A free photo to video generator using advanced models like Veo 3 and Runway. No skills or sign-up required. It doesn’t store your uploads in the cloud, which helps with privacy. You can tweak transitions, aspect ratios, and quality, and export watermark-free videos. This is handy for social teams testing ideas. Example: take a product hero shot, add a smooth pan and depth zoom, and export vertical 9:16 for Reels.

Adobe Firefly

Generates HD up to 1080p, with 4K coming. It integrates with Adobe Creative Cloud and offers intuitive camera motion controls. Adobe also notes its training data is licensed or public domain, which helps with commercial safety. Example: turn a static product image into 1080p b-roll with a gentle dolly-in and rack focus for a landing page.

Vidnoz

Free image-to-video with 30+ filters and an online editor. Supports JPG, PNG, WEBP, and even M4V inputs. Can generate HD without watermarks. It includes templates, avatars, a URL-to-video feature, support for 140+ languages, and realistic AI voices. There’s one free generation per day. Example: convert a blog URL to a teaser video, add film grain, and auto-generate an AI voiceover in Spanish.

Luma AI

Focuses on realistic animation from stills. Strong fit for marketing, gaming, VR, and real estate teams that need lifelike motion. It also offers an API for automation at scale. Example: animate an architectural rendering with a smooth camera orbit for a property preview.

Vheer

Creates up to 1080p videos with no subscriptions or watermarks. You can set duration, frame rate, and resolution, with accurate prompt matching. It outputs 5–10 second clips that are smooth and clean. Example: make a 10-second pan across a still infographic for LinkedIn.

Vidu

Emphasizes converting text and images into videos to increase engagement and save production time. Example: combine a feature list with a product image to produce a short explainer clip with minimal editing.

Face animation tools for beginners

Deep Nostalgia and D-ID can bring portraits to life. These are helpful for quick, emotive moments, like employee history features or culture stories.

My take: these tools are great for micro-clips and quick wins. For brand-safe, multi-language training at scale, you’ll hit a ceiling. That’s where a full platform helps.

Where these tools shine vs. when you need a full video platform

Where they shine:

- Speed: create motion from a still in minutes

- Short-form b-roll for social and websites

- Single-purpose clips and motion tests

- Lightweight edits with simple camera moves

Where you hit limits:

- Multi-scene narratives and consistent visual identity

- Multi-speaker dialogues with timing and gestures

- Compliance-friendly exports like SCORM video

- Structured learning with quizzes, branching, and analytics

- Localization that preserves layout and timing across many languages

- Central asset management and workspace permissions

Turning photos into polished training and learning content with Colossyan

I work at Colossyan, and here’s how we approach this for L&D. You can start with a single photo, a set of slides, or a process document, then build a complete, interactive training flow - no advanced design skills required.

Why Colossyan for training:

- Document to video: import a PDF, Word doc, or slide deck to auto-build scenes and draft narration.

- AI avatars for training: choose customizable avatars, or create Instant Avatars of your trainers. Add AI voiceover - use default voices or clone your own for consistency.

- Brand kit for video: apply fonts, colors, and logos in one click.

- Interactive training videos: add quizzes and branching to turn passive content into decision-making practice.

- Analytics and SCORM: export SCORM 1.2/2004 and track completions, scores, and time watched in your LMS.

- Instant translation video: translate your entire module while keeping timing and animations intact.

- Pronunciations: lock in brand terms and technical words so narration is accurate.

Example workflow: safety onboarding from factory photos

- Import your SOP PDF or PPT with equipment photos. We convert each page into scenes.

- Add a safety trainer avatar for narration. Drop in your photos from the Content Library. Use animation markers to highlight hazards at the right line in the script.

- Use Pronunciations for technical terms. If you want familiarity, clone your trainer’s voice.

- Add a branching scenario: “Spot the hazard.” Wrong selections jump to a scene that explains consequences; right selections proceed.

- Export as SCORM 1.2/2004 with a pass mark. Push it to your LMS and monitor quiz scores and time watched.

Example workflow: product update explainer from a single hero image

- Start with Document to Video to generate a first-draft script.

- Add your hero photo and screenshots. Use Conversation Mode to stage a dialogue between a PM avatar and a Sales avatar.

- Resize from 16:9 for the LMS to 9:16 for mobile snippets.

- Translate to German and Japanese. The timing and animation markers carry over.

Example script snippet you can reuse

- On screen: close-up of the new dashboard image. Avatar narration: “This release introduces three upgrades: real-time alerts, role-based views, and offline sync. Watch how the ‘Alerts’ tab updates as we simulate a network event.” Insert an animation marker to highlight the Alerts icon.

Example interactive quiz

- Question: Which control prevents unauthorized edits?

- A) Draft lock B) Role-based views C) Offline sync D) Real-time alerts

- Correct: B. Feedback: “Role-based views restrict edit rights by role.”

Production tips for better photo-to-video results

- Start with high-resolution images; avoid heavy compression.

- Pick the right aspect ratio per channel: 16:9 for LMS, 9:16 for social.

- Keep camera motion subtle; time highlights with animation markers.

- Balance music and narration with per-scene volume controls.

- Lock pronunciations for brand names; use cloned voices for consistency.

- Keep micro-clips short; chain scenes with templates for longer modules.

- Localize early; Instant Translation preserves timing and layout.

Repurposing ideas: from static assets to scalable video

- SOPs and process docs to microlearning: Document to Video builds scenes; add photos, quizzes, and export SCORM.

- Field photos to scenario-based training: use Conversation Mode for role-plays like objection handling.

- Slide decks to on-demand refreshers: import PPT/PDF; speaker notes become scripts.

- Blog posts and web pages to explainers: summarize with Document to Video; add screenshots or stock footage.

Convert PowerPoints Into Videos With Four Clicks

Converting PowerPoints into videos isn’t just convenient anymore—it’s essential. Videos are more engaging, accessible, and easier to share across platforms. You don’t need special software to watch them, and they help your presentations reach a wider audience.

Instead of manually recording or exporting slides—which can be time-consuming and clunky—Colossyan makes it effortless. Here’s a simple, step-by-step guide to turning your PowerPoint presentation into a professional video using Colossyan.

🪄 Step 1: Upload Your PowerPoint File

Start by logging into your Colossyan account.

- Click “Create Video” and select “Upload Document”.

- Upload your PowerPoint (.pptx) file directly from your computer or cloud storage.

Colossyan will automatically process your slides and prepare them for video creation.

🎨 Step 2: Apply Your Brand Kit

Keep your video on-brand and professional.

- Open your Brand Kit settings to automatically apply your company’s logo, colors, and fonts.

- This ensures every video stays consistent with your visual identity—perfect for corporate or training content.

🗣️ Step 3: Add an AI Avatar and Voice

Bring your slides to life with a human touch.

- Choose from Colossyan’s library of AI avatars to act as your on-screen presenter.

- Select a voice and language that best matches your tone or audience (Colossyan supports multiple languages and natural-sounding voices).

- You can also adjust the script or narration directly in the editor.

✏️ Step 4: Customize and Edit Your Video

Once your slides are imported:

- Rearrange scenes, update text, or add visuals in the Editor.

- Insert quizzes, interactive elements, or analytics tracking if you’re creating training content.

- Adjust pacing, transitions, and on-screen media for a polished final result.

📦 Step 5: Export and Share Your Video

When you’re happy with your video:

- Export it in your preferred format (Full HD 1080p is a great balance of quality and file size).

- For e-learning or training, export as a SCORM package to integrate with your LMS.

- Download or share directly via a link—no PowerPoint software needed.

💡 Why Use Colossyan for PowerPoint-to-Video Conversion?

- No technical skills required: Turn decks into videos in minutes.

- Consistent branding: Maintain a professional, on-brand look.

- Engaging presentation: Human avatars and voiceovers hold attention better than static slides.

- Trackable performance: Use quizzes and analytics to measure engagement.

- Flexible output: From corporate training to educational content, your videos are ready for any platform.

🚀 In Short

Converting PowerPoints to videos with Colossyan saves time, increases engagement, and makes your content more accessible than ever.

You upload, customize, and share—all in a few clicks. It’s not just a faster way to make videos; it’s a smarter way to make your presentations work harder for you.

Translate Videos to English: The Complete Enterprise Localization Strategy

When you need to translate videos to English, you're tackling more than a simple language conversion task—you're executing a strategic business decision to expand your content's reach to the world's dominant business language. English remains the lingua franca of global commerce, spoken by 1.5 billion people worldwide and serving as the primary or secondary language in most international business contexts. But traditional video translation is expensive, slow, and operationally complex. How do modern organizations localize video content efficiently without sacrificing quality or breaking the budget?

The strategic answer lies in leveraging AI-powered translation workflows that integrate directly with your video creation process. Instead of treating translation as an afterthought—a separate project requiring new vendors, multiple handoffs, and weeks of coordination—platforms like Colossyan demonstrate how intelligent automation can make multilingual video creation as simple as clicking a button. This comprehensive guide reveals exactly how to translate videos to English at scale, which approach delivers the best ROI for different content types, and how leading organizations are building global video strategies that compound competitive advantage.

Why Translating Videos to English Is a Strategic Priority

English video translation isn't just about accessibility—it's about market access, brand credibility, and competitive positioning in the global marketplace.

The Global Business Case for English Video Content

English holds a unique position in global business. While Mandarin Chinese has more native speakers, English dominates international commerce, technology, and professional communication. Consider these strategic realities:

Market Reach: The combined purchasing power of English-speaking markets (US, UK, Canada, Australia, and English speakers in other countries) exceeds $30 trillion annually. A video available only in another language excludes this massive audience entirely.B2B Decision-Making: In multinational corporations, English is typically the common language regardless of headquarters location. Technical evaluations, vendor assessments, and purchasing decisions happen in English—meaning your product demos, case studies, and training content must be available in English to be seriously considered.Digital Discovery: English dominates online search and content discovery. Google processes English queries differently and more comprehensively than most other languages. Video content in English is more discoverable, more likely to rank, and more frequently shared in professional contexts.Talent Acquisition and Training: For companies with distributed or global teams, English training content ensures every team member—regardless of location—can access critical learning materials. This is particularly important in tech, engineering, and other fields where English is the de facto standard.

The Traditional Translation Bottleneck

Despite these compelling reasons, many organizations underutilize video because traditional translation is prohibitively expensive and operationally complex:

Cost: Professional human translation, voice-over recording, and video re-editing for a 10-minute video typically costs $2,000-5,000 per target language. For videos requiring multiple languages, costs multiply rapidly.Timeline: Traditional workflows span 2-4 weeks from source video completion to translated version delivery—during which your content sits idle rather than driving business value.Coordination Complexity: Managing translation agencies, voice talent, and video editors across time zones creates project management overhead that many teams simply can't sustain.Update Challenge: When source content changes (products update, regulations change, information becomes outdated), the entire translation cycle must repeat. This makes maintaining current multilingual content practically impossible.

These barriers mean most organizations either: (1) don't translate video content at all, limiting global reach, or (2) translate only the highest-priority flagship content, leaving the bulk of their video library unavailable to English-speaking audiences.

How AI Translation Transforms the Economics

AI-powered video translation fundamentally changes this calculus. The global AI video translation market was valued at USD 2.68 billion and is projected to reach USD 33.4 billion by 2034—a 28.7% CAGR—driven by organizations discovering that AI makes translation affordable, fast, and operationally sustainable.

Modern platforms enable workflows where:

- Translation happens in hours instead of weeks

- Costs are 90% lower than traditional services

- Updates are trivial (regenerate rather than re-translate)

- Multiple languages can be created simultaneously (no linear cost scaling)

This transformation makes it practical to translate your entire video library to English, not just select pieces—fundamentally expanding your content's impact and reach.

Understanding Your Translation Options: Subtitles vs. Dubbing

When you translate videos to English, your first strategic decision is how you'll deliver that translation. This isn't just a technical choice—it shapes viewer experience, engagement, and content effectiveness.

English Subtitles: Preserving Original Audio

Adding English subtitles keeps your original video intact while making content accessible to English-speaking audiences.

Advantages:

- Preserves authenticity: Original speaker's voice, emotion, and personality remain unchanged

- Lower production complexity: No need for voice talent or audio replacement

- Cultural preservation: Viewers hear authentic pronunciation, accent, and delivery

- Accessibility bonus: Subtitles also benefit deaf/hard-of-hearing viewers and enable sound-off viewing

Disadvantages:

- Cognitive load: Viewers must split attention between reading and watching

- Reduced engagement: Reading subtitles is less immersive than native language audio

- Visual complexity: For content with heavy on-screen text or detailed visuals, subtitles can overwhelm

Best use cases:

- Documentary or interview content where speaker authenticity is central

- Technical demonstrations where viewers need to focus on visual details

- Content for audiences familiar with reading subtitles

- Social media video (where much viewing happens with sound off)

AI Dubbing: Creating Native English Audio

Replacing original audio with AI-generated English voice-over creates an immersive, native viewing experience.

Advantages:

- Natural viewing experience: English speakers can simply watch and listen without reading

- Higher engagement: Viewers retain more when not splitting attention with subtitles

- Professional polish: AI voices are now remarkably natural and appropriate for business content

- Emotional connection: Voice inflection and tone enhance message impact

Disadvantages:

- Original speaker presence lost: Viewers don't hear the actual person speaking

- Voice quality variance: AI voice quality varies by platform; testing is important

- Lip-sync considerations: If original speaker is prominently on camera, lip movements won't match English audio

Best use cases:

- Training and educational content where comprehension is paramount

- Marketing videos optimizing for engagement and emotional connection

- Content where the speaker isn't prominently on camera

- Professional communications where polished delivery matters

The Hybrid Approach: Maximum Accessibility

Many organizations implement both:

- Primary audio: AI-generated English dubbing for immersive viewing

- Secondary option: Subtitles available for viewer preference

This combination delivers maximum accessibility and viewer choice, though it requires slightly more production work.

The Colossyan Advantage: Integrated Translation

This is where unified platforms deliver exponential efficiency. Rather than choosing between subtitles and dubbing as separate production tracks, Colossyan lets you generate both from a single workflow:

1. Your original script is auto-translated to English

2. AI generates natural English voice-over automatically

3. English subtitles are created simultaneously

4. You can even generate an entirely new video with an English-speaking AI avatar

This integrated approach means you're not locked into a single translation method—you can test different approaches and provide multiple options to accommodate viewer preferences.

Step-by-Step: How to Translate Videos to English Efficiently

Executing professional video translation requires a systematic approach. Here's the workflow leading organizations use to translate content efficiently and at scale.

Phase 1: Prepare Your Source Content

Quality translation starts with quality source material. Invest time here to ensure smooth downstream processes.

Obtain accurate source transcription:

If your video was created from a script, you're already ahead—that script is your starting point. If not, you need an accurate transcript of what's being said.

Modern AI transcription tools like Whisper AI, Otter.ai, or built-in platform features deliver 95%+ accuracy for clear audio. Upload your video, receive the transcript, and spend 15-20 minutes reviewing for errors in:

- Proper names and terminology

- Technical jargon specific to your industry

- Numbers, dates, and specific figures

- Acronyms and abbreviations

This investment dramatically improves translation quality since errors in transcription cascade into translation mistakes.

Clean and optimize the script:

Before translation, refine your source text:

- Remove filler words (um, uh, like, you know)

- Clarify ambiguous phrases that might confuse machine translation

- Add context notes for terms that shouldn't be translated (product names, company names)

- Break very long sentences into shorter, clearer statements

Well-prepared source text yields dramatically better translations—spending 30 minutes optimizing can save hours of correction later.

Phase 2: Execute the Translation

With clean source text, translation becomes straightforward—though quality varies significantly by approach.

Machine Translation (Fast and Affordable):

AI translation services like Google Translate, DeepL, or built-in platform features provide instant translation at zero or minimal cost.

Best practices:

- DeepL typically delivers more natural results than Google Translate for European languages

- ChatGPT or Claude can provide contextual translation if you provide background ("Translate this technical training script from French to English, maintaining a professional but accessible tone")

- Split long documents into manageable chunks for free-tier services with character limits

For straightforward business content, modern machine translation delivers 85-95% quality that requires only minor human refinement.

Human-in-the-Loop (Optimal Quality):

The strategic approach: leverage AI speed, apply human expertise where it matters most.

1. Generate initial translation with AI (5 minutes)

2. Have a bilingual reviewer refine for naturalness and accuracy (20-30 minutes)

3. Focus human time on critical sections: opening hook, key messages, calls-to-action