Blog

Bring Photos to Life with the Latest AI Picture to Video Generators

AI picture-to-video tools can turn a single photo into a moving clip within minutes. They’re becoming essential for social content, product teasers, concept pitches, and filler b-roll for training videos. But not all generators are equal — they vary widely in quality, speed, rights, and cost. Here’s a clear look at how they work, what’s available today, and how to integrate them with Colossyan to build on-brand, measurable training at scale.

What an AI Picture-to-Video Generator Does

These tools animate still images using simulated camera moves, transitions, and effects, then export them as short clips (typically MP4s, sometimes GIFs). Most let you choose from common aspect ratios like 16:9, 1:1, or 9:16, and resolutions from HD to 4K.

Typical applications range from b-roll and social posts to product promos, animated portraits, and background visuals for training or explainers.

The Latest Tools and What They Offer

EaseMate AI is a flexible entry point — it’s free to use without sign-up, watermark-free for new users, and supports several top engines including Veo, Sora, Runway, Kling, Wan, and PixVerse. You can control ratios, transitions, zooms, and particle effects. It’s a handy sandbox for testing multiple engines side-by-side.

Adobe Firefly (Image to Video) integrates tightly with Premiere Pro and After Effects. It currently supports 1080p output with 4K “coming soon,” and offers intuitive controls for pan, tilt, zoom, and directional sweeps. Its training data is licensed or public domain, giving it clear commercial footing.

On Reddit’s Stable Diffusion community, users often report Veo 3 as the best for overall quality, Kling for resolution (though slower), and Runway for balancing quality and speed. Sora’s paid tier allows unlimited generations, while offline options like WAN 2.2 and Snowpixel appeal to teams with strict privacy rules.

Vidnoz Image-to-Video offers one free generation per day without a watermark and claims commercial use is allowed. With more than 30 animation styles, multiple quality levels, and built-in editing, it’s a fast way to produce vertical or horizontal clips that can double as training visuals.

DeepAI Video Generator handles both text-to-video and image-to-video. Its short clips (4–12 seconds) work well for microlearning. The Pro plan starts at $4.99 per month and includes 25 seconds of standard video before per-second billing kicks in.

ImageMover AI focuses on animated portraits and batch creation. You can upload text, images, or scripts, select templates, and export HD clips with your own audio. Rights claims should be double-checked, but the simplicity makes it ideal for animating headshots for onboarding videos.

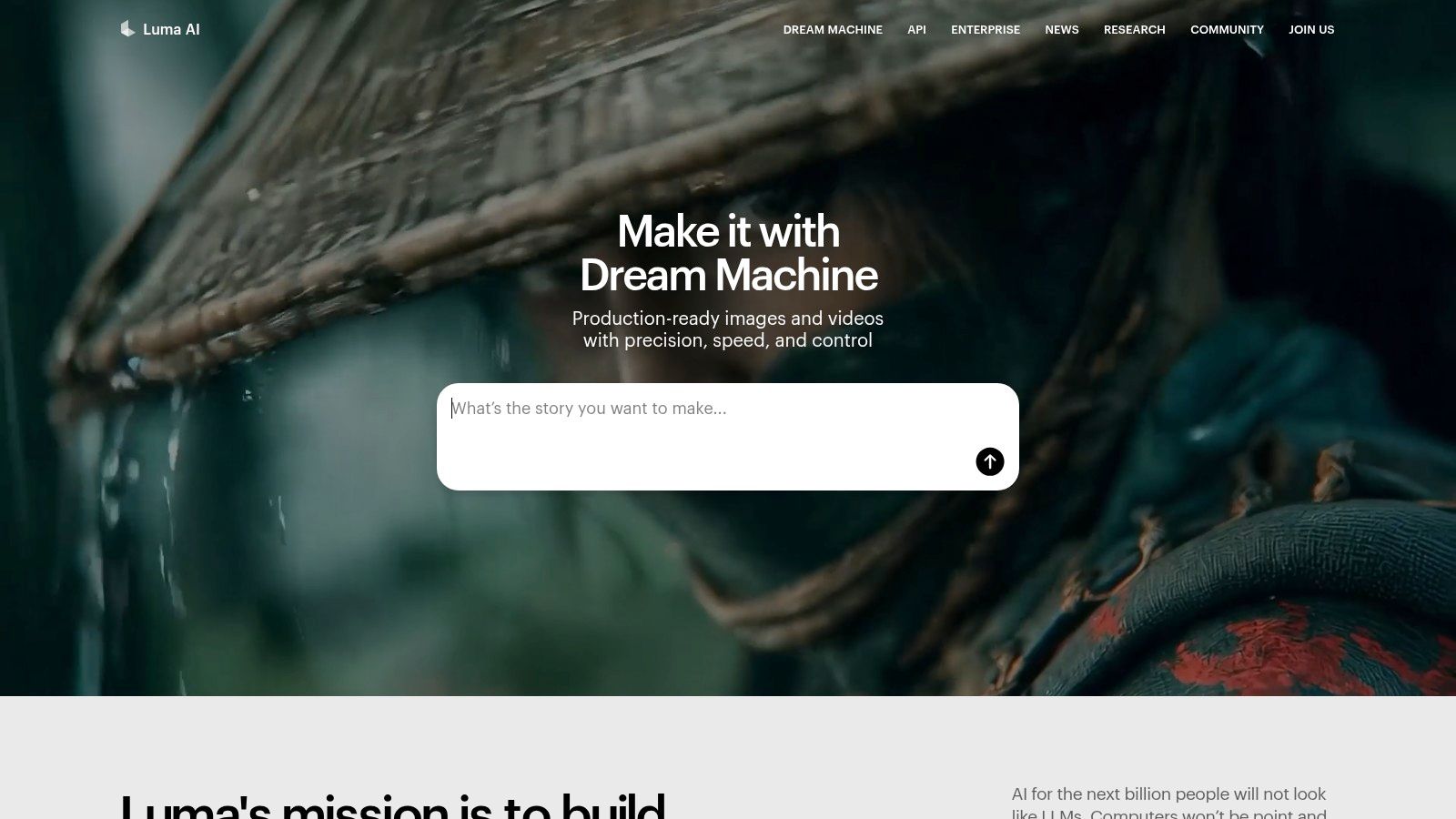

Luma AI’s Dream Machine stands out for its 3D-like depth and cinematic transitions. It even offers an API for developers, making it useful for teams looking to automate visuals at scale.

Pixlr Image-to-Video generates HD videos in under a minute and allows free, watermark-free exports up to 4K. Its built-in Brand Kit automatically applies company fonts, colors, and logos, making it great for branded e-learning clips.

What to Expect: Quality, Speed, and Cost

Among the current engines, Veo 3 consistently ranks highest in quality. Kling can push to higher resolutions but takes longer to render. Runway is the most balanced, while Sora and free options like VHEER suit bulk generation but may introduce glitches.

Pricing structures vary widely. EaseMate, Pixlr, and Vidnoz have free or limited tiers; Adobe uses a credit system; and DeepAI bills by the second after an included base.

Most tools are designed for short clips — typically under 12 seconds. Rather than forcing one long render, stack a few short clips for smoother results. Precise prompting makes a big difference: specify camera moves, lighting, and mood to help mid-tier engines produce cleaner motion.

Choosing the Right Tool

When comparing options, check each platform’s maximum resolution, supported aspect ratios, and available camera controls. Confirm watermark and commercial rights policies, especially on free tiers, and verify any “privacy-safe” claims with your legal team. If you need speed or volume, look for platforms that promise results in under a minute or support batch generation.

Integrations can also guide your decision: Firefly links directly with Adobe tools; Luma provides an API for automation. Predictable pricing — whether via credits, daily limits, or per-second billing — is another practical factor for enterprise teams.

Example Prompts for Consistent Results

For cinematic product b-roll, try describing your scene precisely:

“A stainless steel water bottle on a dark wood table, soft studio lighting, shallow depth of field, slow push-in, subtle parallax, 8 seconds, cinematic color grade.”

For animated portraits:

“Professional headshot, gentle head movement and natural eye blinks, soft front lighting, 1:1, 6 seconds.”

For technical explainers:

“Macro photo of a PCB, top-down to angled tilt, blueprint overlay, cool tone, 10 seconds.”

And for social verticals:

“Safety signage poster, bold colors, fast zoom with particle burst, upbeat motion, 9:16, 5 seconds.”

Fast Workflows with Colossyan

Once you’ve generated clips, Colossyan helps turn them into interactive, measurable training.

1. Social teaser to training module:

Create a short 9:16 clip in Pixlr, then import it into Colossyan as an opener. Add Avatars, Voices, and brand elements, followed by an interactive quiz to track engagement.

2. Onboarding role-plays:

Animate expert portraits using ImageMover, then script dialogue in Colossyan’s Conversation Mode. The Doc2Video feature can import handbooks directly, and final outputs are exportable to SCORM for your LMS.

3. Multilingual microlearning:

Build short b-roll loops in DeepAI, combine them with slides in Colossyan, and use Instant Translation for multilingual voiceovers and text. Analytics track completion and quiz scores across regions.

Matching Tools to Enterprise Needs

Use Firefly when you need precise camera motion that aligns with existing footage.

Turn to EaseMate as a testing hub for different engines.

Choose Luma for immersive 3D-style intros.

For quick, branded clips at scale, Pixlr and Vidnoz are efficient budget options.

Avoiding Common Pitfalls

Watch for unexpected watermarks or rights restrictions, especially as free-tier policies change. If a video looks jittery, switch engines or refine your prompt to better define camera motion and lighting. Keep visuals consistent using Brand Kits, and localize content through Colossyan’s Instant Translation to prevent layout shifts when text expands. Finally, make videos interactive — quizzes or branching scenarios help measure learning outcomes instead of passive viewing.

How Colossyan Turns Raw Clips into Scalable Learning

Colossyan isn’t just for assembly — it transforms your visuals into structured, measurable training. You can import documents or slides directly with Doc2Video, apply brand templates, clone executive voices for narration, and add interactions like quizzes. Instant Translation and SCORM export ensure global reach and compliance, while Analytics report engagement and scores. Workspace Management keeps everything organized for teams producing at scale.

Top eLearning Authoring Tools Every Course Creator Should Know

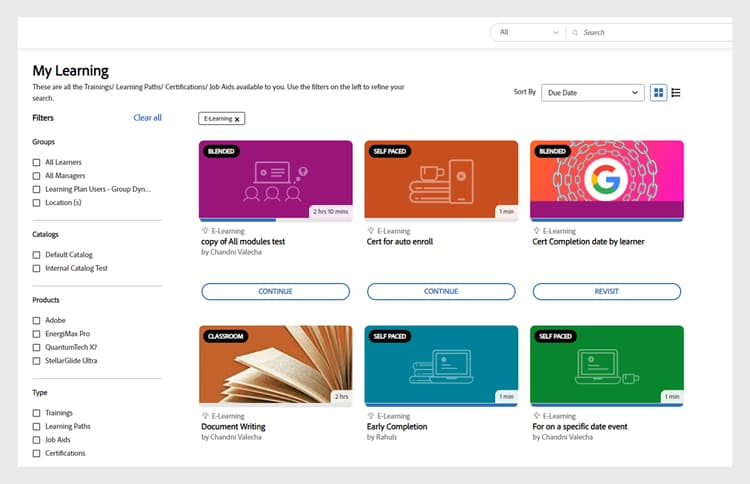

The authoring tools market is crowded. As of November 2025, 206 tools are listed in eLearning Industry’s directory. And the line between “authoring tool” and “course builder” keeps blurring. That’s why the right choice depends on your use case, not a generic “best of” list.

This guide gives you a practical way to choose, a quick set of best picks by scenario, short notes on top tools, and where I’ve seen AI video help teams move faster and measure more. I work at Colossyan, so when I mention video, I’ll explain exactly how I would pair it with these tools.

How to Choose: Evaluation Criteria and Deployment Models

Start with must-haves and be honest about constraints.

- Standards and data: SCORM is table stakes. If you need deeper event data or modern LRS flows, look at xAPI and cmi5. Academic stacks may need LTI. Check your LMS first.

- Interactivity: Branching, robust quizzes, and drag-and-drop should be simple to build.

- Collaboration and governance: Shared asset libraries, permissions, versioning, and review workflows matter once you scale.

- Mobile/responsive output: “Works on mobile” is not the same as “designed for mobile.”

- Localization: Translation workflows, multi-language variants in one course, or at least an efficient way to manage many language copies.

- Analytics: Built-in analytics help you iterate; relying only on LMS completion/score data slows improvement.

Deployment trade-offs

- Desktop: More customization and offline use, but slower updates and weaker collaboration.

- Cloud/SaaS: Real-time collaboration and auto updates, but ongoing subscription.

- Open source: No license fees and maximum control, but higher IT and dev skills needed.

Independent frameworks can help. eLearning Industry ranks tools across nine factors (support, experience, features, innovation, reviews, growth potential, retention, employee turnover, social responsibility). Gyrus adds accessibility, advanced features (VR/gamification/adaptive), and community.

My opinion: If you need to scale to many teams and countries, pick cloud-first with strong governance. If you build a few bespoke simulations per year, desktop can be fine.

Quick Comparison: Best-in-Class Picks by Scenario

Rapid, mobile-first authoring

- Rise 360: Fast, block-based, mobile-first; limited deep customization.

- Easygenerator: SME-friendly, built-in analytics; auto-translate into 75 languages.

- How to pair Colossyan: Convert docs or PPTs to on-brand videos in minutes with Doc2Video and Brand Kits, add quizzes, and export SCORM for the LMS.

Advanced custom interactivity and simulations

- Storyline 360: Very customizable interactions; slower to author; weaker mobile optimization.

- Adobe Captivate: Advanced sims and VR; steep learning curve; strong accessibility.

- dominKnow | ONE: Flow/Claro modes, single-source reuse, and collaboration.

- How to pair Colossyan: Front-load storylines with short explainer videos using avatars and conversation mode, then let the tool handle the branching. I export SCORM to capture pass/fail.

Global rollouts

- Elucidat: Up to 4x faster with best-practice templates; auto-translate to 75 languages; strong analytics and variation management.

- Gomo: Supports multi-language “layers” and localization for 160+ languages.

- Genially: AI translation into 100+ languages; Dynamic SCORM auto-syncs updates.

- How to pair Colossyan: Use Instant Translation and multilingual voices, with Pronunciations to handle brand and technical terms.

Accessibility and compliance

- Lectora: Deep customization with Section 508/WCAG focus.

- Evolve: Responsive and accessibility-minded.

- How to pair Colossyan: Add subtitles, export SRT/VTT, and lock styling with Brand Kits.

Video-first learning and microlearning

- Camtasia: Best-in-class screen capture with SCORM quizzes; 3-year price lock.

- How to pair Colossyan: Add avatars and multilingual narration, and combine screencasts with interactive, SCORM-compliant video segments.

Open-source and budget-conscious

- Adapt: Free, responsive, dev-heavy; SCORM-only.

- Open eLearning: Free, offline desktop; SCORM; mobile-responsive.

- How to pair Colossyan: Cut production time by turning SOPs into consistent, branded videos and keep LMS tracking via SCORM.

Deep Dive on Top Tools (Strengths, Watchouts, Pairing Tips)

Articulate 360 (Rise, Storyline, Review, Reach, Localization)

- Standouts: AI Assistant; Rise for speed, Storyline for custom interactivity; built-in localization to 80+ languages; integrated review and distribution.

- My take: A strong all-rounder suite. Rise is fast but limited; Storyline is powerful but slower. Use both where they fit.

- Pair with Colossyan: Create persona-led video intros and debriefs, use conversation mode for role-plays, and export SCORM so tracking is consistent.

Adobe Captivate

- Standouts: Advanced sims and VR; strong accessibility. Watchouts: steep learning curve, slower updates.

- My take: Good if you need high-fidelity software simulations or VR.

- Pair with Colossyan: Align stakeholders fast by turning requirements into short explainer videos and use engagement data to refine the simulations.

Elucidat

- Standouts: 4x faster production, Auto-Translate (75), advanced xAPI, Rapid Release updates.

- My take: One of the best for scaling quality across large teams and markets.

- Pair with Colossyan: Localize video intros/outros instantly and clone leaders’ voices for consistent sound in every market.

Gomo

- Standouts: Localization for 160+ languages; multi-language layers.

- My take: Strong choice for global programs where you want one course to handle many languages.

- Pair with Colossyan: Keep pronunciations consistent and export SCORM to track alongside Gomo courses.

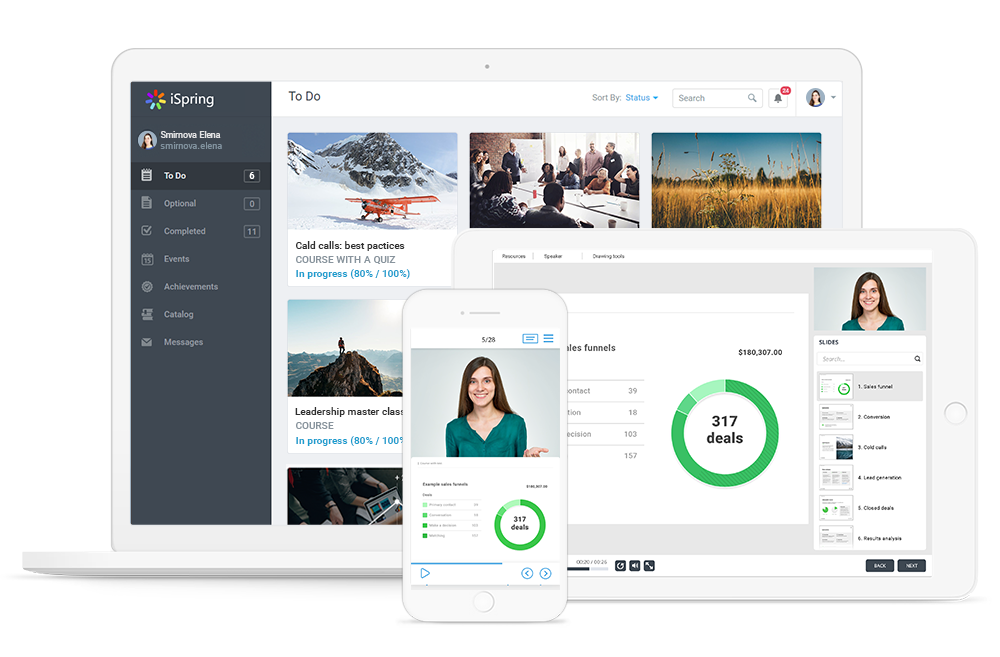

iSpring Suite

- Standouts: 4.7/5 from 300 reviews, 116,000 assets, pricing from $470/author/year.

- Watchouts: Windows-centric; not fully mobile-optimized; no auto-translate.

- My take: Great for PowerPoint-heavy teams that want speed without a big learning curve.

- Pair with Colossyan: Modernize PPT content with avatars and interactive checks, then export SCORM so it fits existing LMS flows.

dominKnow | ONE

- Standouts: Flow (true responsive) + Claro; single-source reuse; central assets; built-in sims; robust collaboration.

- My take: Powerful for teams that care about reuse and governance.

- Pair with Colossyan: Batch-convert SOPs to video with Doc2Video and keep branding aligned with Brand Kits.

Rise 360

- Standouts: Very fast, mobile-first; English-only authoring; limited customization.

- My take: Perfect for quick, clean microlearning and compliance basics.

- Pair with Colossyan: Localize video segments with Instant Translation and export SCORM to track with Rise.

Storyline 360

- Standouts: Deep customization; huge community; slower at scale; weaker mobile and collaboration.

- My take: Use it when you truly need custom interactions; not for everything.

- Pair with Colossyan: Add narrative scenes with avatars to set context before branching.

Easygenerator

- Standouts: Auto-translate (75), built-in analytics; SME-friendly.

- My take: Good for decentralizing authoring to subject matter experts.

- Pair with Colossyan: Convert SME notes into short videos and merge our CSV analytics with their reports.

Lectora

- Standouts: Accessibility leader; strong customization; heavier publishing.

- My take: A reliable pick for regulated industries.

- Pair with Colossyan: Supply captioned video guidance for complex tasks.

Evolve

- Standouts: Broad component set; WYSIWYG; accessibility emphasis.

- My take: Practical for responsive projects; some scale governance gaps.

- Pair with Colossyan: Use short explainers to clarify complex interactions.

Adapt (open source)

- Standouts: Free, responsive; SCORM-only; developer-heavy.

- My take: Viable if you have in-house dev skills and want control.

- Pair with Colossyan: Produce polished video without motion design resources.

Camtasia

- Standouts: Screen capture + quizzes; SCORM; 3-year price lock.

- My take: Best for software tutorials and microlearning.

- Pair with Colossyan: Add multilingual voices and embed avatar-led explainers.

Genially

- Standouts: SCORM and LTI; Dynamic SCORM; built-in analytics; AI voiceovers and 100+ language translation; gamification.

- My take: Flexible for interactive comms and learning with analytics baked in.

- Pair with Colossyan: Introduce or recap gamified modules with short avatar videos.

Note on AI: Nano Masters AI claims 90% time and cost reduction for AI-driven role-plays. This shows where the market is going: faster production with measurable outcomes. Test claims with a pilot before you commit.

Localization, Analytics, and Update Workflows

- Localization: Gomo’s multi-language layers and Elucidat’s auto-translate/variation management reduce rework. Genially’s AI translation to 100+ languages speeds up smaller teams. I use Colossyan Instant Translation and Pronunciations so brand names and technical terms are said correctly everywhere.

- Analytics: Elucidat, Easygenerator, and Genially give more than completion. Others lean on the LMS. In Colossyan, I track plays, time watched, and quiz scores, and export CSV to blend with LMS data.

- Update pipelines: Elucidat’s Rapid Release and Genially’s Dynamic SCORM avoid LMS reuploads. Desktop tools require more packaging and version management. With Colossyan, I regenerate videos from updated scripts, keep styling consistent with Brand Kits, and re-export SCORM fast.

Real-World Stacks: Examples You Can Copy

- First-time SCORM builder: Rise 360 or Easygenerator for structure; Colossyan Doc2Video for quick explainers; SCORM for both. Reddit beginners often want modern UI, fair pricing, and broad export support. This covers it.

- Global compliance across 10+ languages: Elucidat or Gomo for course management; Colossyan for Instant Translation, multilingual voices, and Pronunciations. Less rework, consistent sound.

- Complex branching and simulations: Storyline 360 or Captivate for interactivity; dominKnow | ONE for responsive reuse; Colossyan conversation mode for role-plays; SCORM pass/fail for quiz gates.

- Budget or open source: Adapt or Open eLearning for free SCORM output; Colossyan to produce clean, avatar-led videos without motion designers.

- Video-led software training: Camtasia for screencasts; Colossyan for branded intros/outros, multilingual narration, and interactive checks.

Where Colossyan Fits in Any Authoring Stack

- Speed: Turn SOPs, PDFs, and presentations into videos automatically with Doc2Video or Prompt2Video. Scenes, narration, and timing are generated instantly for faster production.

- Engagement: Use customizable AI avatars, Instant Avatars of real people, gestures, and conversation mode to create human, scenario-led learning experiences.

- Scale and governance: Brand Kits, the Content Library, and Workspace Management features keep teams aligned on design and messaging. Analytics and CSV export support continuous improvement.

- Standards and distribution: Export in SCORM 1.2/2004 with pass/fail and completion rules, or share via secure link or embed.

- Global readiness: Apply Instant Translation, multilingual voices, and Pronunciations to ensure consistent brand sound and correct pronunciation across languages.

- Interactivity and measurement: Add multiple-choice questions and branching directly inside videos, while tracking scores and time watched for detailed performance insights.

Selection Checklist

- Confirm standards: SCORM, xAPI, cmi5, LTI. Match to your LMS and reporting needs.

- Pick a deployment model: desktop for customization/offline; cloud for collaboration/auto-updates; open source for control/low cost.

- Plan localization: auto-translate, multi-language layers, or variation management.

- Design update workflows: can you push updates without reuploading to the LMS?

- Decide where video helps clarity and engagement; place Colossyan there for speed and measurement.

- Validate pricing and total cost of ownership, not just license fees.

- Pilot with a small course to test collaboration, mobile output, and analytics.

One last note: Lists of “best tools” are fine, but context is everything. Match the tool to your delivery model, language footprint, interactivity needs, and update cadence. Then add video where it actually improves understanding. That’s the stack that wins.

What Is Synthetic Media and Why It’s the Future of Digital Content

Synthetic media refers to content created or modified by AI—text, images, audio, and video. Instead of filming or recording in the physical world, content is generated in software, which reduces time and cost and allows for personalization at scale. It also raises important questions about accuracy, consent, and misuse.

The technology has matured quickly. Generative adversarial networks (GANs) started producing photorealistic images a decade ago, speech models made voices more natural, and transformers advanced language and multimodal generation. Alongside benefits, deepfakes, scams, and platform policy changes emerged. Organizations involved in training, communications, or localization can adopt this capability—but with clear rules and strong oversight.

A Quick Timeline of Synthetic Media’s Rise

- 2014: GANs enable photorealistic image synthesis.

- 2016: WaveNet models raw audio for more natural speech.

- 2017: Transformers unlock humanlike language and music; “deepfakes” gain attention on Reddit, with r/deepfakes banned in early 2018.

- 2020: Large-scale models like GPT-3 and Jukebox reach mainstream attention.

Platforms responded: major sites banned non-consensual deepfake porn in 2018–2019, and social networks rolled out synthetic media labels and stricter policies before the 2020 U.S. election.

The scale is significant. A Harvard Misinformation Review analysis found 556 tweets with AI-generated media amassed 1.5B+ views. Images dominated, but AI videos skewed political and drew higher median views.

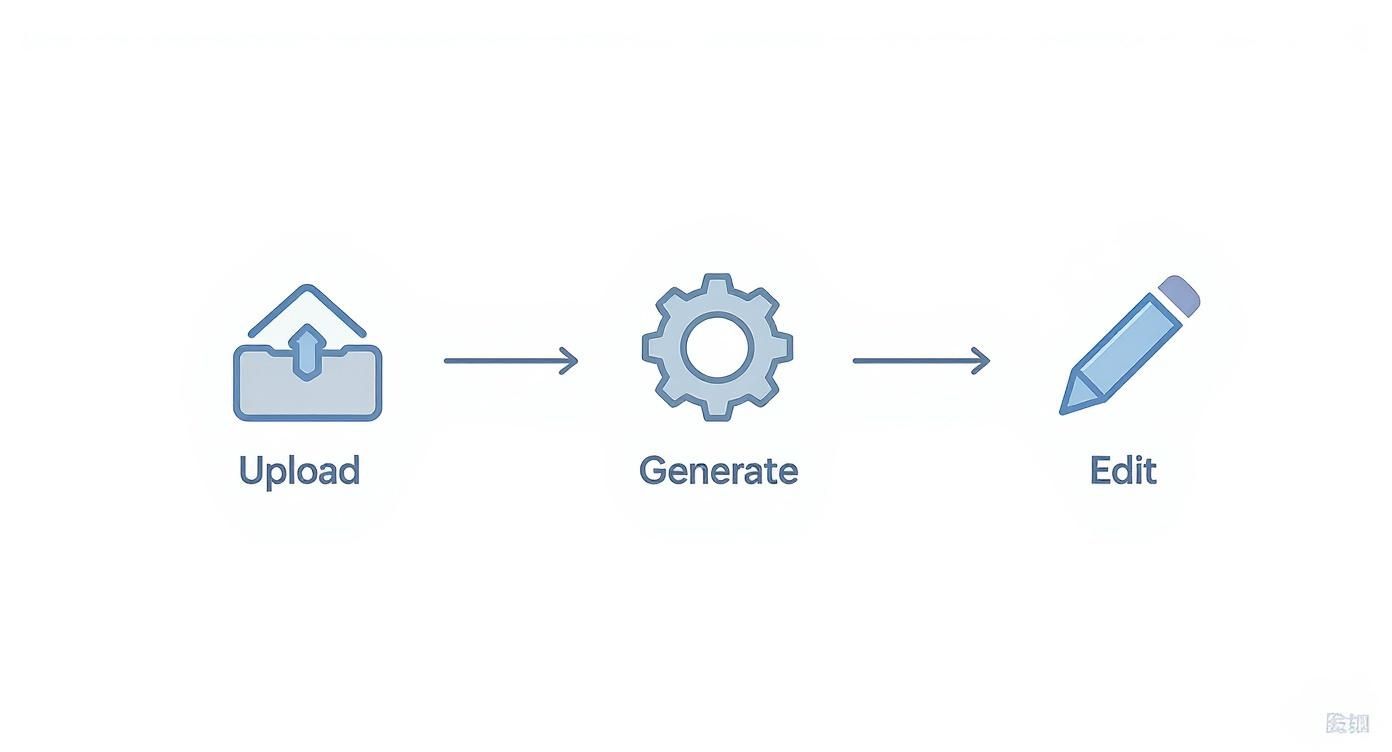

Production has also moved from studios to browsers. Tools like Doc2Video or Prompt2Video allow teams to upload a Word file or type a prompt to generate draft videos with scenes, visuals, and timing ready for refinement.

What Exactly Is Synthetic Media?

Synthetic media includes AI-generated or AI-assisted content. Common types:

- Synthetic video, images, voice, AI-generated text

- AI influencers, mixed reality, face swaps

Examples:

- Non-synthetic: a newspaper article with a staff photo

- Synthetic: an Instagram AR filter adding bunny ears, or a talking-head video created from a text script

Digital personas like Lil Miquela show the cultural impact of fully synthetic characters. Synthetic video can use customizable AI avatars or narration-only scenes. Stock voices or cloned voices (with consent) ensure consistent speakers, and Conversation Mode allows role-plays with multiple presenters in one scene.

Synthetic Media Types and Examples

Why Synthetic Media Is the Future of Digital Content

Speed and cost: AI enables faster production. For instance, one creator produced a 30-page children’s book in under an hour using AI tools. Video is following a similar trajectory, making high-quality effects accessible to small teams.

Personalization and localization: When marginal cost approaches zero, organizations can produce audience-specific variants by role, region, or channel.

Accessibility: UNESCO-backed guidance highlights synthetic audio, captions, real-time transcription, and instant multilingual translation for learners with special needs. VR/AR and synthetic simulations provide safe practice environments for complex tasks.

Practical production tools:

- Rapid drafts: Doc2Video converts dense PDFs and Word files into structured scenes.

- Localization: Instant Translation creates language variants while preserving layout and animation.

- Accessibility: Export SRT/VTT captions and audio-only versions; Pronunciations ensure correct terminology.

Practical Use Cases

Learning and Development

- Convert SOPs and handbooks into interactive training with quizzes and branching. Generative tools can help build lesson plans and simulations.

- Recommended tools: Doc2Video or PPT Import, Interaction for MCQs, Conversation Mode for role-plays, SCORM export, Analytics for plays and quiz scores.

Corporate Communications and Crisis Readiness

- Simulate risk scenarios, deliver multilingual updates, and standardize compliance refreshers. AI scams have caused real losses, including a €220,000 voice-cloning fraud and market-moving fake videos (Forbes overview).

- Recommended tools: Instant Avatars, Brand Kits, Workspace Management, Commenting for approvals.

Global Marketing and Localization

- Scale product explainers and onboarding across regions with automated lip-synced redubbing.

- Recommended tools: Instant Translation with multilingual voices, Pronunciations, Templates.

Education and Regulated Training

- Build scenario-based modules for healthcare or finance.

- Recommended tools: Branching for decision trees, Analytics, SCORM to track pass/fail.

Risk Landscape and Mitigation

Prevalence and impact are increasing. 2 in 3 cybersecurity professionals observed deepfakes in business disinformation in 2022, and AI-generated posts accumulated billions of views (Harvard analysis).

Detection methods include biological signals, phoneme–viseme mismatches, and frame-level inconsistencies. Intel’s FakeCatcher reports 96% real-time accuracy, while Google’s AudioLM classifier achieves ~99% accuracy. Watermarking and C2PA metadata help with provenance.

Governance recommendations: Follow Partnership on AI Responsible Practices emphasizing consent, disclosure, and transparency. Durable, tamper-resistant disclosure remains a research challenge. UK Online Safety Bill criminalizes revenge porn (techUK summary).

Risk reduction strategies:

- Use in-video disclosures (text overlays or intro/end cards) stating content is synthetic.

- Enforce approval roles (admin/editor/viewer) and maintain Commenting threads as audit trails.

- Monitor Analytics for distribution anomalies.

- Add Pronunciations to prevent misreads of sensitive terms.

Responsible Adoption Playbook (30-Day Pilot)

Week 1: Scope and Governance

- Pick 2–3 training modules, write disclosure language, set workspace roles, create Brand Kit, add Pronunciations.

Week 2: Produce MVPs

- Use Doc2Video or PPT Import for drafts. Add MCQs, Conversation Mode, Templates, Avatars, Pauses, and Animation Markers.

Week 3: Localize and Test

- Create 1–2 language variants with Instant Translation. Check layout, timing, multilingual voices, accessibility (captions, audio-only).

Week 4: Deploy and Measure

- Export SCORM 1.2/2004, set pass marks, track plays, time, and scores. Collect feedback, iterate, finalize disclosure SOPs.

Measurement and ROI

- Production: time to first draft, reduced review cycles, cost per minute of video.

- Learning: completion rate, average quiz scores, branch choices.

- Localization: time to launch variants, pronunciation errors, engagement metrics.

- Governance: percent of content with disclosures, approval turnaround, incident rate.

Top Script Creator Tools to Write and Plan Your Videos Faster

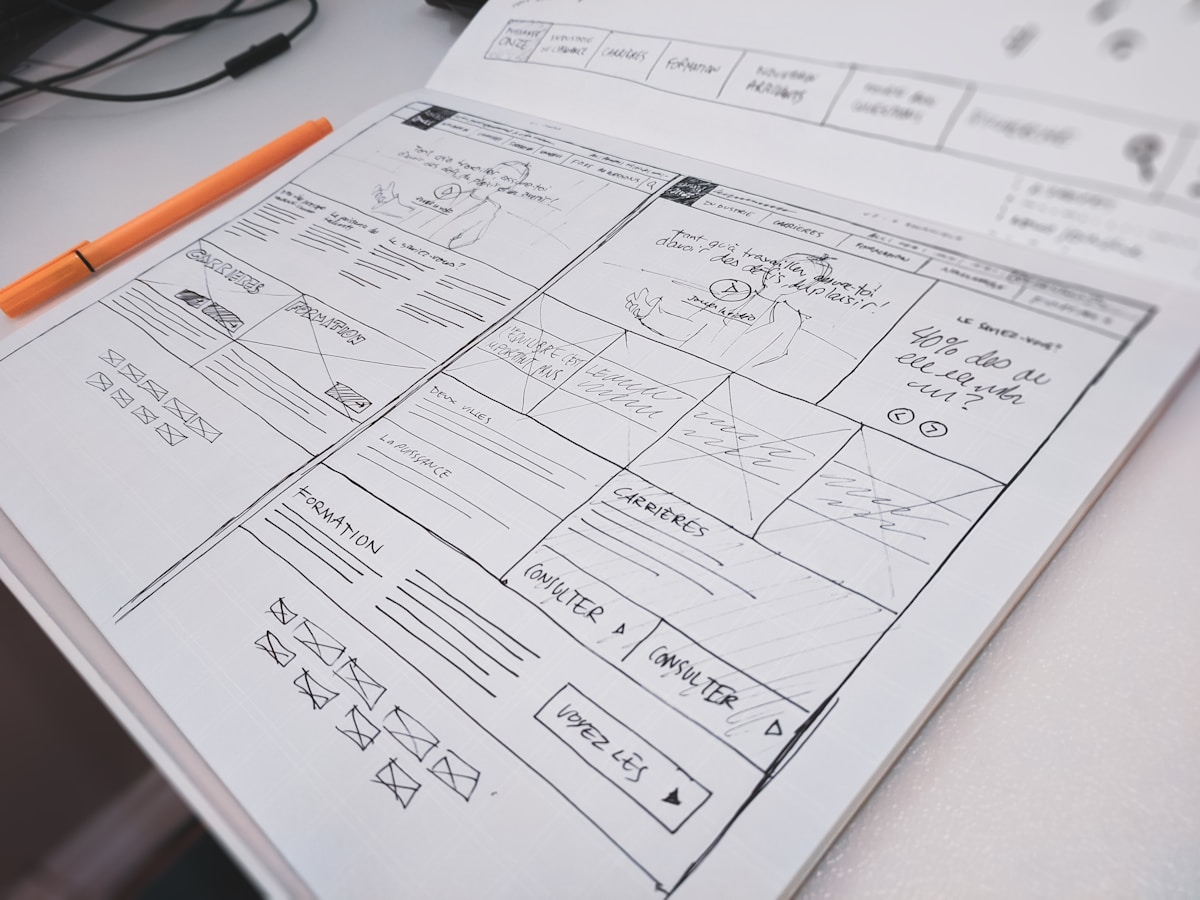

If video projects tend to slow down at the scripting stage, modern AI script creators can now draft, structure, and storyboard faster than ever—before handing off to a video platform for production, analytics, and tracking.

Below is an objective, stats-backed roundup of top script tools, plus ways to plug scripts into Colossyan to generate on-brand training videos with analytics, branching, and SCORM export.

What to look for in a script creator

- Structure and coherence: scene and act support, genre templates, outline-to-script.

- Targeting and tone: platform outputs (YouTube vs TikTok), tones (serious, humorous), length controls.

- Collaboration and revisions: comments, versioning, and ownership clarity.

- Integrations and exports: easy movement of scripts into a video workflow.

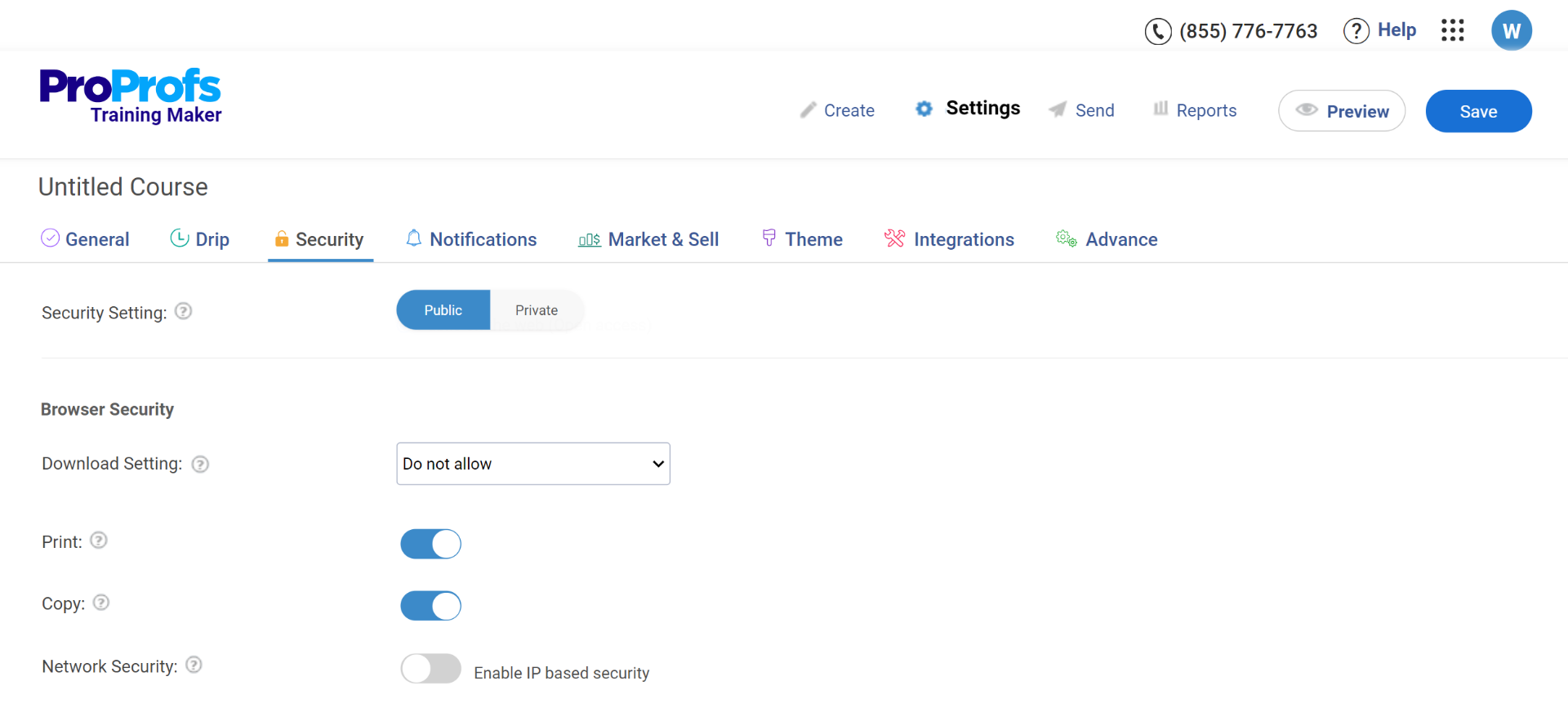

- Security and data policy: content ownership, training data usage.

- Multilingual capability: write once, adapt globally.

- Pacing and delivery: words-per-minute guidance and teleprompter-ready text.

Top script creator tools (stats, standout features, and example prompts)

1) Squibler AI Script Generator

Quick stat: 20,000 writers use Squibler AI Toolkit

Standout features:

- Free on-page AI Script Generator with unlimited regenerations; editable in the editor after signup.

- Storytelling-focused AI with genre templates; Smart Writer extends scenes using context.

- Output targeting for YouTube, TV shows, plays, Instagram Reels; tones include Humorous, Serious, Sarcastic, Optimistic, Objective.

- Users retain 100% rights to generated content.

- Prompt limit: max 3,000 words; cannot be empty.

Ideal for: Fast ideation and structured long-form or short-form scripts with coherent plot and character continuity.

Example prompt: “Write a serious, medium-length YouTube explainer on ‘Zero-Trust Security Basics’ with a clear 15-second hook, 3 key sections, and a 20-second summary.”

Integration with Colossyan: Copy Squibler’s scenes into Colossyan’s Editor, assign avatars, apply Brand Kits, and set animation markers for timing and emphasis. Export as SCORM with quizzes for tracking.

2) ProWritingAid Script Generator

Quick stat: 4+ million writers use ProWritingAid

Standout features:

- Free plan edits/runs reports on up to 500 words; 3 “Sparks” per day to generate scripts.

- Plagiarism checker scans against 1B+ web pages, published works, and academic papers.

- Integrations with Word, Google Docs, Scrivener, Atticus, Apple Notes; desktop app and browser extensions.

- Bank-level security; user text is not used to train algorithms.

Ideal for: Polishing and compliance-heavy workflows needing grammar, style, and originality checks.

Integration with Colossyan: Scripts can be proofed for grammar and clarity, with pronunciations added for niche terms. SCORM export allows analytics tracking.

3) Teleprompter.com Script Generator

Quick stat: Since 2018, helped 1M+ creators record 17M+ videos

Standout guidance:

- Calibrated for ~150 WPM: 30s ≈ 75–80 words; 1 min ≈ 150–160; 3 min ≈ 450–480; 5 min ≈ 750–800; 10 min ≈ 1,500–1,600.

- Hooks in the first 3–5 seconds are critical.

- Platform tips: YouTube favors longer, value-driven scripts with CTAs; TikTok/IG Reels need instant hooks; LinkedIn prefers professional thought leadership.

- Teleprompter-optimized scripts include natural pauses, emphasis markers, and speaking-speed calculators.

Ideal for: On-camera delivery and precise pacing.

Integration with Colossyan: Use WPM to set word count. Add pauses and animation markers for emphasis, resize canvas for platform-specific formats (16:9 YouTube, 9:16 Reels).

4) Celtx

Quick stats: 4.4/5 average rating from 1,387 survey responses; trusted by 7M+ storytellers

Standout features:

- End-to-end workflow: script formatting (film/TV, theater, interactive), Beat Sheet, Storyboard, shot lists, scheduling, budgeting.

- Collaboration: comments, revision history, presence awareness.

- 7-day free trial; option to remain on free plan.

Ideal for: Teams managing full pre-production workflows.

Integration with Colossyan: Approved slides and notes can be imported; avatars, branching, and MCQs convert storyboards into interactive training.

5) QuillBot AI Script Generator

Quick stats: Trustpilot 4.8; Chrome extension 4.7/5; 5M+ users

Standout features:

- Free tier and Premium for long-form generation.

- Supports multiple languages; adapts scripts to brand tone.

Ideal for: Rapid drafting and tone adaptation across languages and channels.

Integration with Colossyan: Scripts can be localized with Instant Translation; multilingual avatars and voices allow versioning and layout tuning.

6) Boords AI Script Generator

Quick stats: Trusted by 1M+ video professionals; scripts in 18+ languages

Standout features:

- Script and storyboard generator, versioning, commenting, real-time feedback.

Ideal for: Agencies and teams wanting script-to-storyboard in one platform.

Integration with Colossyan: Approved scripts can be imported and matched to avatars and scenes; generate videos for each language variant.

7) PlayPlay AI Script Generator

Quick stats: Used by 3,000+ teams; +165% social video views reported

Standout features:

- Free generator supports EN, FR, DE, ES, PT, IT; outputs platform-specific scripts.

- Enables fast turnaround of high-volume social content.

Ideal for: Marketing and communications teams.

Integration with Colossyan: Scripts can be finalized for avatars, gestures, and brand layouts; engagement tracked via analytics.

Pacing cheat sheet: words-per-minute for common video lengths

Based on Teleprompter.com ~150 WPM guidance:

- 30 seconds: 75–80 words

- 1 minute: 150–160 words

- 2 minutes: 300–320 words

- 3 minutes: 450–480 words

- 5 minutes: 750–800 words

- 10 minutes: 1,500–1,600 words

From script to finished video: sample workflows in Colossyan

Workflow A: Policy training in under a day

- Draft: Script created in Squibler with a 15-second hook and 3 sections

- Polish: Grammar and originality checked in ProWritingAid

- Produce: Scenes built in Colossyan with avatar, Brand Kit, MCQs

- Measure: Analytics tracks plays, time watched, and quiz scores; export CSV for reporting

Workflow B: Scenario-based role-play for sales

- Outline: Beats and dialogue in Celtx with approval workflow

- Script: Alternate endings generated in Squibler Smart Writer for branching

- Produce: Conversation Mode in Colossyan with avatars, branching, and gestures

- Localize: Spanish variant added with Instant Translation

Workflow C: On-camera style delivery without filming

- Draft: Teleprompter.com script (~300 words for 2 min)

- Produce: Clone SME voice, assign avatar, add pauses and animation markers

- Distribute: Embed video in LMS, track retention and quiz outcomes

L&D-specific tips: compliance, localization, and reporting

- Brand Kits ensure consistent fonts/colors/logos across departments

- Pronunciations maintain accurate terminology

- Multi-language support via QuillBot or Boords + Instant Translation

- SCORM export enables pass marks and LMS analytics

- Slide/PDF imports convert notes into narration; avatars and interactive elements enhance learning

Quick picks by use case

- Story-first scripts: Squibler

- Grammar/style/originality: ProWritingAid

- Pacing and delivery: Teleprompter.com

- Full pre-production workflow: Celtx

- Multilingual drafting: QuillBot

- Quick browser ideation: Colossyan

- Script-to-storyboard collaboration: Boords

- Social platform-specific: PlayPlay

A Complete Guide to eLearning Software Development in 2025

eLearning software development in 2025 blends interoperable standards (SCORM, xAPI, LTI), cloud-native architectures, AI-driven personalization, robust integrations (ERP/CRM/HRIS), and rigorous security and accessibility to deliver engaging, measurable training at global scale—often accelerated by AI video authoring and interactive microlearning.

The market is big and getting bigger. The global eLearning market is projected to reach about $1T by 2032 (14% CAGR). Learners want online options: 73% of U.S. students favor online classes, and Coursera learners grew 438% over five years. The ROI is strong: eLearning can deliver 120–430% annual ROI, cut learning costs by 20–50%, boost productivity by 30–60%, and improve knowledge retention by 25–60%.

This guide covers strategy, features, standards, architecture, timelines, costs, tools, analytics, localization, and practical ways to accelerate content—plus where an AI video layer helps.

2025 Market Snapshot and Demand Drivers

Across corporate training, K-12, higher ed, and professional certification, the drivers are clear: upskilling at scale, mobile-first learning, and cloud-native platforms that integrate with the rest of the stack. Demand clusters around AI personalization, VR/AR, gamification, and virtual classrooms—alongside secure, compliant data handling.

- Interoperability is the baseline. SCORM remains the most widely adopted, xAPI expands tracking beyond courses, and LTI connects tools to LMS portals.

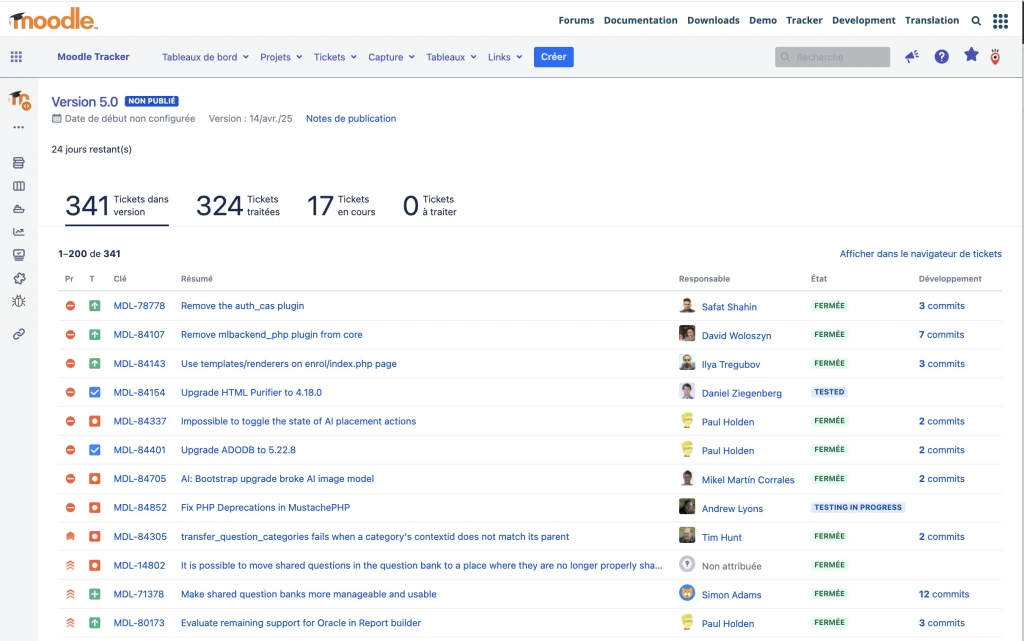

- Real-world scale is proven. A global SaaS eLearning platform runs with 2M+ active users and supports SCORM, xAPI, LTI, AICC, and cmi5, serving enterprise brands like Visa and PepsiCo (stacked vendor case on the same source).

- Enterprise training portals work. A Moodle-based portal at a major fintech was “highly rated” by employees, proving that well-executed LMS deployments can drive adoption (Itransition’s client example).

On the compliance side, expect GDPR, HIPAA, FERPA, COPPA, SOC 2 Type II, and WCAG accessibility as table stakes in many sectors.

Business Case and ROI (with Examples)

The economics still favor eLearning. Industry benchmarks show 120–430% annual ROI, 20–50% cost savings, 30–60% productivity gains, and 25–60% better retention. That’s not surprising if you replace live sessions and travel with digital training and analytics-driven iteration.

A few proof points:

- A custom replacement for a legacy Odoo-based LMS/ERP/CRM cut DevOps expenses by 10%.

- A custom conference learning platform cut infrastructure costs by 3x.

- In higher ed, 58% of universities use chatbots to handle student questions, and a modernization program across 76 dental schools delivered faster decisions through real-time data access (same source).

Where I see teams lose money: content production. Building videos, translations, and updates often eats the budget. This is where we at Colossyan help. We convert SOPs, PDFs, and slide decks into interactive training videos fast using Doc2Video and PPT import. We export SCORM 1.2/2004 with pass marks so your LMS tracks completion and scores. Our analytics (plays, time watched, quiz averages) close the loop so you can edit scenes and raise pass rates without re-recording. That shortens payback periods because you iterate faster and cut production costs.

Must-Have eLearning Capabilities (2025 Checklist)

Content Creation and Management

- Multi-format authoring, reusable assets, smart search, compliance-ready outputs.

- At scale, you need templates, brand control, central assets, and translation workflows.

Colossyan fit: We use templates and Brand Kits for a consistent look. The Content Library holds shared media. Pronunciations fix tricky product terms. Voices can be cloned for brand-accurate narration. Our AI assistant helps refine scripts. Add MCQs and branching for interactivity, and export captions for accessibility.

Administration and Delivery

- Multi-modal learning (asynchronous, live, blended), auto-enrollment, scheduling, SIS/HRIS links, notifications, learning paths, and proctoring-sensitive flows where needed.

Colossyan fit: We create the content layer quickly. You then export SCORM 1.2/2004 with pass criteria for clean LMS tracking and delivery.

Social and Engagement

- Profiles, communities, chats or forums, gamification, interaction.

Colossyan fit: Conversation Mode simulates role plays with multiple avatars. Branching turns policy knowledge into decisions, not just recall.

Analytics and Reporting

- User history, predictions, recommendations, assessments, compliance reporting.

Colossyan fit: We provide video-level analytics (plays, time watched, average scores) and CSV exports you can merge with LMS/xAPI data.

Integrations and System Foundations

- ERP, CRM (e.g., Salesforce), HRIS, CMS/KMS/TMS, payments, SSO, video conferencing; scalable, secure, cross-device architecture.

Colossyan fit: Our SCORM packages and embeddable links drop into your existing ecosystem. Multi-aspect-ratio output supports mobile and desktop.

Standards and Compliance (How to Choose)

Here’s the short version:

- SCORM is the universal baseline for packaging courses and passing completion/score data to an LMS.

- xAPI (Tin Can) tracks granular activities beyond courses—simulations, informal learning, performance support.

- LTI is the launch protocol used by LMSs to integrate external tools, common in higher ed.

- cmi5 (and AICC) show up in specific ecosystems but are less common.

Leading vendors support a mix of SCORM, xAPI, and often LTI (market overview). For compliance, consider GDPR, HIPAA, FISMA, FERPA, COPPA, and WCAG/ADA accessibility. Don’t cut corners on captions, keyboard navigation, and color contrast.

Colossyan fit: We export SCORM 1.2 and 2004 with completion and pass criteria. We also export SRT/VTT captions to help you meet accessibility goals inside your LMS.

Architecture and Integrations (Reference Design)

A modern reference design looks like this:

- Cloud-first; single-tenant or multi-tenant; microservices; CDN delivery; event-driven analytics; encryption in transit and at rest; SSO via SAML/OAuth; role-based access.

- Integrations with ERP/CRM/HRIS for provisioning and reporting; video conferencing (Zoom/Teams/WebRTC) for live sessions; SSO; payments and ecommerce where needed; CMS/KMS.

- Mobile performance tuned for low bandwidth; responsive design; offline options; caching; localization variants.

In practice, enterprise deployments standardize SCORM/xAPI/LTI handling and SSO to Teams/Zoom in corporate and higher ed stacks. This aligns with common integration realities across the industry.

Colossyan fit: We are the content layer that plugs into your LMS or portal. Enterprise workspaces, foldering, and commenting help you govern content and speed approvals.

Advanced Differentiators to Stand Out

Differentiators that actually matter:

- AI for content generation, intelligent tutoring, predictive analytics, and automated grading (where the data supports it).

- VR/XR/AR for high-stakes simulation training.

- Wearables and IoT for experiential learning data.

- Gamified simulations and big data-driven personalization at scale.

- Strong accessibility, including WCAG and multilingual support.

Examples from the tool landscape: Captivate supports 360°/VR; some vendors tout SOC 2 Type II for enterprise confidence and run large brand deployments (see ELB Learning references in the same market overview).

Colossyan fit: We use AI to convert documents and prompts into video scenes with avatars (Doc2Video/Prompt2Video). Instant Translation produces multilingual variants fast, and multilingual or cloned voices keep brand personality consistent. Branching + MCQs create adaptive microlearning without custom code.

Tooling Landscape: Authoring Tools vs LMS vs Video Platforms

For first-time creators, this is a common confusion: authoring tools make content; LMSs host, deliver, and report; video platforms add rich media and interactivity.

A Reddit thread shows how often people blur the lines and get stuck comparing the wrong things; the advice there is to prioritize export and tracking standards and to separate authoring vs hosting decisions (community insight).

Authoring Tool Highlights

- Elucidat is known for scale and speed; best-practice templates can be up to 4x faster. It has strong translation/variation control.

- Captivate offers deep simulations and VR; it’s powerful but often slower and more desktop-centric.

- Storyline 360 and Rise 360 are widely adopted; Rise is fast and mobile-first; Storyline offers deeper interactivity with a steeper learning curve. Some support cmi5 exports.

- Gomo, DominKnow, iSpring, Easygenerator, Evolve, and Adapt vary in collaboration, translation workflows, analytics, and mobile optimization.

- Articulate’s platform emphasizes AI-assisted creation and 80+ language localization across an integrated creation-to-distribution stack.

Where Colossyan fits: We focus on AI video authoring for L&D. We turn documents and slides into avatar-led videos with brand kits, interactions, instant translation, SCORM export, and built-in analytics. If your bottleneck is “we need engaging, trackable video content fast,” that’s where we help.

Timelines, Costs, and Delivery Models

Timelines

- MVPs land in 1–5 months (4–6 months if you add innovative components). SaaS release cadence is every 2–6 weeks, with hotfixes potentially several times/day.

- Full custom builds can run several months to 12+ months.

Cost Drivers

- The number of modules, interactivity depth, integrations, security/compliance, accessibility, localization, and data/ML scope drive cost. As rough benchmarks: MVPs at $20k–$50k, full builds up to ~$150k, maintenance around $5k–$10k/year depending on complexity and region. Time-to-value can be quick when you scope for an MVP and phase features.

Delivery Models

- Time & Material gives you prioritization control.

- Dedicated Team improves comms and consistency across sprints.

- Outstaffing adds flexible capacity. Many teams mix these models by phase.

Colossyan acceleration: We compress content production. Turning existing docs and slides into interactive microlearning videos frees your engineering budget for platform features like learning paths, proctoring, and SSO.

Security, Privacy, and Accessibility

What I consider baseline:

- RBAC, SSO/SAML/OAuth, encryption (TLS in transit, AES-256 at rest), audit logging, DPA readiness, data minimization, retention policies, secure media delivery with tokenized URLs, and thorough WCAG AA practices (captions, keyboard navigation, contrast).

Regulate to the highest bar your sector demands: GDPR/HIPAA/FERPA/COPPA, and SOC 2 Type II where procurement requires it.

Colossyan contribution: We supply accessible learning assets with captions files and package SCORM so you inherit LMS SSO, storage, and reporting controls.

Analytics and Measurement

Measurement separates compliance from impact. A good analytics stack lets you track:

- Completion, scores, pass rates, and time spent.

- Retention, application, and behavioral metrics.

- Correlations with safety, sales, or performance data.

- Learning pathway and engagement heatmaps.

Benchmarks:

- 80% of companies plan to increase L&D analytics spending.

- High-performing companies are 3x more likely to use advanced analytics.

Recommended Analytics Layers

- Operational (LMS-level): completion, pass/fail, user activity.

- Experience (xAPI/LRS): behavior beyond courses, simulation data, real-world performance.

- Business (BI dashboards): tie learning to outcomes—safety rates, sales metrics, compliance KPIs.

Colossyan fit: Our analytics report plays, completion, time watched, and quiz performance. CSV export lets you combine video engagement with LMS/xAPI/LRS data. That gives you a loop to iterate on scripts and formats.

Localization and Accessibility

Accessibility and localization are inseparable in global rollouts.

Accessibility

Follow WCAG 2.1 AA as a baseline. Ensure:

- Keyboard navigation

- Closed captions (SRT/VTT)

- High-contrast and screen-reader–friendly design

- Consistent heading structures and alt text

Localization

- Translate not just on-screen text, but also narration, assessments, and interfaces.

- Use multilingual glossaries and brand voice consistency.

- Plan for right-to-left (RTL) languages and UI mirroring.

Colossyan fit: Instant Translation creates fully localized videos with multilingual avatars and captions in one click. You can produce Spanish, French, German, or Mandarin versions instantly while maintaining timing and brand tone.

Common Challenges and How to Solve Them

Case Studies

1. Global Corporate Training Platform

A multinational built a SaaS LMS supporting 2M+ active users, SCORM/xAPI/LTI, and multi-tenant architecture—serving brands like Visa, PepsiCo, and Oracle (market source).

Results: High reliability, compliance-ready, enterprise-grade scalability.

2. Fintech Learning Portal

A Moodle-based portal for internal training and certifications—employees rated it highly for usability and structure (Itransition example).

Results: Improved adoption and measurable skill progression.

3. University Chatbots and Dashboards

Across 76 dental schools, chatbots streamlined decision-making with real-time student data (Chetu data).

Results: Faster student response times and reduced admin load.

Microlearning, AI, and the Future of Training

The future is faster iteration and AI-enabled creativity. In corporate learning, high-performing teams will:

- Generate content automatically from internal docs and SOPs.

- Localize instantly.

- Adapt learning paths dynamically using analytics.

- Tie everything to business metrics via LRS/BI dashboards.

Colossyan fit: We are the “AI layer” that makes this real—turning any text or slide deck into ready-to-deploy microlearning videos with avatars, quizzes, and SCORM tracking, in minutes.

Implementation Roadmap

Even with a strong platform, the rollout determines success. Treat it like a product launch, not an IT project.

Phase 1: Discovery and Mapping (Weeks 1–2)

- Inventory current training assets, policies, and SOPs.

- Map compliance and role-based training requirements.

- Define SCORM/xAPI and analytics targets.

- Identify translation or accessibility gaps.

Phase 2: Baseline Launch (Weeks 3–6)

- Deploy OSHA 10/30 or other core baseline courses.

- Add Focus Four or job-specific safety modules.

- Pilot SCORM tracking and reporting dashboards.

Phase 3: Role-Specific Depth (Weeks 7–10)

- Add targeted programs—forklift, heat illness prevention, HAZWOPER, healthcare safety, or environmental modules.

- Translate and localize high-priority materials.

- Automate enrollments via HRIS/SSO integration.

Phase 4: Continuous Optimization (Weeks 11–12 and beyond)

- Launch refreshers and microlearning updates.

- Review analytics and adjust content frequency.

- Embed performance metrics into dashboards.

Colossyan tip: Use Doc2Video for SOPs, policies, and manuals—each can become a 3-minute microlearning video that fits easily into your LMS. Export as SCORM, track completions, and measure engagement without extra engineering.

Procurement and Budgeting

Most organizations combine prebuilt and custom components. Reference pricing from reputable vendors:

- OSHA Education Center: save up to 40%.

- ClickSafety: OSHA 10 for $89, OSHA 30 for $189, NYC SST 40-hour Worker for $391.

- OSHA.com: OSHA 10 for $59.99, OSHA 30 for $159.99, HAZWOPER 40-hour for $234.99.

Use these as benchmarks for blended budgets. Allocate separately for:

- Platform licensing and hosting.

- Authoring tools or AI video creation (e.g., Colossyan).

- SCORM/xAPI tracking and reporting.

- Translation, accessibility, and analytics.

Measuring Impact

Track impact through measurable business indicators:

- Safety: TRIR/LTIR trends, incident reduction.

- Efficiency: time saved vs. in-person sessions.

- Engagement: completions, quiz scores, time on task.

- Business results: faster onboarding, fewer compliance violations.

Proof: ClickSafety cites clients achieving safety rates at one-third of national averages and saving three full days per OSHA 10 participant.

Colossyan impact: We see clients raise pass rates 10–20%, compress training build time by up to 80%, and reduce translation turnaround from weeks to minutes.

Essential Employee Safety Training Programs for a Safer Workplace

Compliance expectations are rising. More states and industries now expect OSHA training, and high-hazard work is under closer scrutiny. The old approach—one annual course and a slide deck—doesn’t hold up. You need a core curriculum for everyone, role-based depth for risk, and delivery that scales without pulling people off the job for days.

This guide lays out a simple blueprint. Start with OSHA 10/30 to set a baseline. Add targeted tracks like Focus Four, forklifts, HAZWOPER, EM 385-1-1, heat illness, and healthcare safety. Use formats that are easy to access, multilingual, and trackable. Measure impact with hard numbers, not vibes.

I’ll also show where I use Colossyan to turn policy PDFs and SOPs into interactive video that fits into SCORM safety training and holds up in audits.

The compliance core every employer needs

Start with OSHA-authorized training. OSHA 10 is best for entry-level workers and those without specific safety duties. OSHA 30 suits supervisors and safety roles. Reputable online providers offer self-paced access on any device with narration, quizzes, and real case studies. You can usually download a completion certificate right away, and the official DOL OSHA card arrives within about two weeks. Cards don’t expire, but most employers set refreshers every 3–5 years.

Good options and proof points:

- OSHA Education Center: Their online 30-hour course includes narration, quizzes, and English/Spanish options, with bulk discounts. Promos can be meaningful—see save up to 40%—and they cite 84,000+ reviews.

- OSHA.com: Clarifies there’s no “OSHA certification.” You complete Outreach training and get a DOL card. Current discounts—OSHA 10 at $59.99 and OSHA 30 at $159.99—and DOL cards arrive in ~2 weeks.

- ClickSafety: Reports clients saving at least 3 days of jobsite time by using online OSHA 10 instead of in-person.

How to use Colossyan to deliver

- Convert policy PDFs and manuals into videos via Doc2Video or PPT import.

- Add interactive quizzes, export SCORM packages, and track completion metrics.

- Use Instant Translation and multilingual voices for Spanish OSHA training.

High-risk and role-specific programs to prioritize

Construction hazards and Focus Four

Focus Four hazards—falls, caught-in/between, struck-by, and electrocution—cause most serious incidents in construction. OSHAcademy offers Focus Four modules (806–809) and a bundle (812), plus fall protection (714/805) and scaffolding (604/804/803).

Simple Focus Four reference:

- Falls: edges, holes, ladders, scaffolds

- Caught-in/between: trenching, pinch points, rotating parts

- Struck-by: vehicles, dropped tools, flying debris

- Electrocution: power lines, cords, GFCI, lockout/tagout

Forklifts (Powered Industrial Trucks)

OSHAcademy’s stack shows the path: forklift certification (620), Competent Person (622), and Program Management (725).

Role progression:

- Operator: pre-shift inspection, load handling, site rules

- Competent person: evaluation, retraining

- Program manager: policies, incident review

HAZWOPER

Exposure determines hours: 40-hour for highest risk, 24-hour for occasional exposure, and 8-hour for the refresher.

From OSHA.com:

OSHAcademy has a 10-part General Site Worker pathway (660–669) plus an 8-hour refresher (670).

EM 385-1-1 (Military/USACE)

Required on USACE sites. OSHAcademy covers the 2024 edition in five courses (510–514).

Checklist:

- Confirm contract, record edition

- Map job roles to chapters

- Track completions and store certificates

Heat Illness Prevention

OSHAcademy provides separate tracks for employees (645) and supervisors (646).

Healthcare Safety

OSHAcademy includes:

- Bloodborne Pathogens (655, 656)

- HIPAA Privacy (625)

- Safe Patient Handling (772–774)

- Workplace Violence (720, 776)

Environmental and Offshore

OSHAcademy offers Environmental Management Systems (790), Oil Spill Cleanup (906), SEMS II (907), and Offshore Safety (908–909).

Build a competency ladder

From awareness to leadership—OSHAcademy’s ladder moves from “Basic” intros like PPE (108) and Electrical (115) up to 700-/800-series leadership courses. Add compliance programs like Recordkeeping (708) and Working with OSHA (744).

Proving impact

Track:

- TRIR/LTIR trends

- Time saved vs. in-person

- Safety conversation frequency

ClickSafety cites results: one client’s rates dropped to under one-third of national averages and saved at least 3 days per OSHA 10 participant.

Delivery and accessibility

Online, self-paced courses suit remote crews. English/Spanish options are common. Completion certificates are immediate; DOL cards arrive within two weeks.

ClickSafety offers 500+ online courses and 25 years in the industry.

Budgeting and procurement

Published prices and discounts:

- OSHA Education Center: save up to 40%

- ClickSafety: OSHA 30 Construction $189, OSHA 10 $89, NYC SST 40-hr Worker $391

- OSHA.com: OSHA 10 $59.99, OSHA 30 $159.99, HAZWOPER 40-hr $234.99

90-day rollout plan

Weeks 1–2: Assess and map

Weeks 3–6: Launch OSHA 10/30 + Focus Four

Weeks 7–10: Add role tracks (forklift, heat illness)

Weeks 11–12: HAZWOPER refreshers, healthcare, environmental, and micro-videos

Best AI Video Apps for Effortless Content Creation in 2025

The best AI video app depends on what you’re making: social clips, cinematic shots, or enterprise training. Tools vary a lot on quality, speed, lip-sync, privacy, and pricing. Here’s a practical guide with clear picks, real limits, and workflows that actually work. I’ll also explain when it makes sense to use Colossyan for training content you need to track and scale.

What to look for in AI video apps in 2025

Output quality and control

Resolution caps are common. Many tools are 1080p only. Veo 2 is the outlier with 4K up to 120 seconds. If you need 4K talking heads, check this first.

Lip-sync is still hit-or-miss. Many generative apps can’t reliably sync mouth movement to speech. For example, InVideo’s generative mode lacks lip-sync and caps at HD, which is a problem for talking-head content.

Camera controls matter for cinematic shots. Kling, Runway, Veo 2, and Adobe Firefly offer true pan/tilt/zoom. If you need deliberate camera movement, pick accordingly.

Reliability and speed

Expect waits and occasional hiccups. Kling’s free plan took ~3 hours in a busy period; Runway often took 10–20 minutes. InVideo users report crashes and buggy playback at times. PixVerse users note credit quirks.

Pricing and credit models

Weekly subs and hard caps are common, especially on mobile. A typical example: $6.99/week for 1,500 credits, then creation stops. It’s fine for short sprints, but watch your usage.

Data safety and ownership

Privacy isn’t uniform. Some apps track identifiers and link data for analytics and personalization. Others report weak protections. HubX’s listing says data isn’t encrypted and can’t be deleted. On the other hand, VideoGPT says you retain full rights to monetize outputs.

Editing and collaboration

Text-based editing (InVideo), keyframe control (PixVerse), and image-to-video pipelines help speed up iteration and reduce costs.

Compliance and enterprise needs

If you’re building training at scale, the checklist is different: SCORM, analytics, translation, brand control, roles, and workspace structure. That’s where Colossyan fits.

Quick picks by use case

Short-form social (≤60 seconds): VideoGPT.io (free 3/day; 60s max paid; simple VO; owns rights)

Fast templates and ads: InVideo AI (50+ languages, AI UGC ads, AI Twins), but note HD-only generative output and reliability complaints

Cinematic generation and camera moves: Kling 2.0, Runway Gen-4, Hailou; Veo 2/3.1 for premium quality (Veo 2 for 4K up to 120s)

Avatar presenters: Colossyan stands out for realistic avatars, accurate lip-sync, and built-in multilingual support.

Turn scripts/blogs to videos: Pictory, Lumen5

Free/low-cost editors: DaVinci Resolve, OpenShot, Clipchamp

Creative VFX and gen-video: Runway ML; Adobe Firefly for safer commercial usage

L&D at scale: Colossyan for Doc2Video/PPT import, avatars, quizzes/branching, analytics, SCORM

App-by-app highlights and gotchas

InVideo AI (iOS, web)

Best for: Template-driven marketing, multi-language social videos, quick text-command edits.

Standout features: 50+ languages, text-based editing, AI UGC ads, AI Twins personal avatars, generative plugins, expanded prompt limit, Veo 3.1 tie-in, and accessibility support. The brand claims 25M customers in 190 countries. On mobile, the app shows 25K ratings and a 4.6 average.

Limits: No lip-sync in generative videos, HD-only output, occasional irrelevant stock, accent drift in voice cloning, and reports of crashes/buggy playback/inconsistent commands.

Pricing: Multiple tiers from $9.99 to $119.99, plus add-ons.

AI Video (HubX, Android)

Best for: Social effects and mobile-first workflows with auto lip-sync.

Claims: Veo3-powered T2V, image/photo-to-video, emotions, voiceover + auto lip-sync, HD export, viral effects.

Limits: Developer-reported data isn’t encrypted and can’t be deleted; shares photos/videos and activity; no free trial; creation blocks without paying; off-prompt/failures reported.

Pricing: $6.99/week for 1,500 credits.

Signal: 5M+ installs and a 4.4★ score from 538K reviews show strong adoption despite complaints.

PixVerse (Android)

Best for: Fast 5-second clips, keyframe control, and remixing with a huge community.

Standout features: HD output, V5 model, Key Frame, Fusion (combine images), image/video-to-video, agent co-pilot, viral effects, daily free credits.

Limits: Credit/accounting confusion, increasing per-video cost, inconsistent prompt fidelity, and some Pro features still limited.

Signal: 10M+ downloads and a 4.5/5 rating from ~3.1M reviews.

VideoGPT.io (web)

Best for: Shorts/Reels/TikTok up to a minute with quick voiceovers.

Plans: Free 3/day (30s); weekly $6.99 unlimited (60s cap); $69.99/year Pro (same cap). Priority processing for premium.

Notes: Monetization allowed; users retain full rights; hard limit of 60 seconds on paid plans. See details at videogpt.io.

VideoAI by Koi Apps (iOS)

Best for: Simple square-format AI videos and ASMR-style outputs.

Limits: Square-only output; advertised 4-minute renders can take ~30 minutes; daily cap inconsistencies; weak support/refund reports; inconsistent prompt adherence.

Pricing: Weekly $6.99–$11.99; yearly $49.99; credit packs $3.99–$7.99.

Signal: 14K ratings at 4.2/5.

Google Veo 3.1 (Gemini)

Best for: Short clips with native audio and watermarking; mobile-friendly via Gemini app.

Access: Veo 3.1 Fast (speed) vs. Veo 3.1 (quality), availability varies, 18+.

Safety: Visible and SynthID watermarks on every frame.

Note: It generates eight‑second videos with native audio today.

Proven workflows that save time and cost

Image-to-video first

Perfect a single high-quality still (in-app or with Midjourney). Animate it in Kling/Runway/Hailou. It’s cheaper and faster than regenerating full clips from scratch.

Legal safety priority

Use Adobe Firefly when you need licensed training data and safer commercial usage.

Long shots

If you must have long single shots, use Veo 2 up to 120s or Kling’s extend-to-~3 minutes approach.

Social-first

VideoGPT.io is consistent for ≤60s outputs with quick voiceovers and full monetization rights.

Practical example

For a cinematic training intro: design one hero still, animate in Runway Gen-4, then assemble the lesson in Colossyan with narration, interactions, and SCORM export.

When to choose Colossyan for L&D (with concrete examples)

If your goal is enterprise training, I don’t think a general-purpose generator is enough. You need authoring, structure, and tracking. This is where I use Colossyan daily.

Doc2Video and PPT/PDF import

Upload a document or deck and auto-generate scenes and narration. It turns policies, SOPs, and slide notes into a draft in minutes.

Customizable avatars and Instant Avatars

Put real trainers or executives on screen with Instant Avatars, keep them consistent, and update scripts without reshoots. Conversation mode supports up to four avatars per scene.

Voices and pronunciations

Set brand-specific pronunciations for drug names or acronyms, and pick multilingual voices.

Brand Kits and templates

Lock fonts, colors, and logos so every video stays on-brand, even when non-designers build it.

Interactions and branching

Add decision trees, role-plays, and knowledge checks, then track scores.

Analytics

See plays, time watched, and quiz results, and export CSV for reporting.

SCORM export

Set pass marks and export SCORM 1.2/2004 so the LMS can track completion.

Instant Translation

Duplicate entire courses into new languages with layout and timing preserved.

Workspace management

Manage roles, seats, and folders across teams so projects don’t get lost.

Example 1: compliance microlearning

Import a PDF, use an Instant Avatar of our compliance lead, add pronunciations for regulated terms, insert branching for scenario choices, apply our Brand Kit, export SCORM 2004 with pass criteria, and monitor scores.

Example 2: global rollout

Run Doc2Video on the original policy, use Instant Translation to Spanish and German, swap in multilingual avatars, adjust layout for 16:9 and 9:16, and export localized SCORM packages for each region.

Example 3: software training

Screen-record steps, add an avatar intro, insert MCQs after key tasks, use Analytics to find drop-off points, and refine with text-based edits and animation markers.

Privacy and compliance notes

Consumer app variability

HubX’s Play listing says data isn’t encrypted and can’t be deleted, and it shares photos/videos and app activity.

InVideo and Koi Apps track identifiers and link data for analytics and personalization; they also collect usage and diagnostics. Accessibility support is a plus.

VideoGPT.io grants users full rights to monetize on YouTube/TikTok.

For regulated training content

Use governance: role-based workspace management, brand control, organized libraries.

Track outcomes: SCORM export with pass/fail criteria and analytics.

Clarify ownership and data handling for any external generator used for B-roll or intros.

Comparison cheat sheet

Highest resolution: Google Veo 2 at 4K; many others cap at 1080p; InVideo generative is HD-only.

Longest single-shot: Veo 2 up to 120s; Kling extendable to ~3 minutes (10s base per gen).

Lip-sync: More reliable in Kling/Runway/Hailou/Pika; many generators still struggle; InVideo generative lacks lip-sync.

Native audio generation: Veo 3.1 adds native audio and watermarking; Luma adds sound too.

Speed: Adobe Firefly is very fast for short 5s clips; Runway/Pika average 10–20 minutes; Kling free can queue hours.

Pricing models: Weekly (VideoGPT, HubX), monthly SaaS (Runway, Kling, Firefly), pay-per-second (Veo 2), freemium credits (PixVerse, Vidu). Watch free trial limits and credit resets.

How AI Short Video Generators Can Level Up Your Content Creation

The short-form shift: why AI is the accelerator now

Short-form video is not a fad. Platforms reward quick, clear clips that grab attention fast. YouTube Shorts has favored videos under 60 seconds, but Shorts is moving to allow up to 3 minutes, so you should test lengths based on topic and audience. TikTok’s Creator Rewards program currently prefers videos longer than 1 minute. These shifts matter because AI helps you hit length, pacing, and caption standards without bloated workflows.

The tooling has caught up. Benchmarks from the market show real speed and scale:

- ImagineArt’s AI Shorts claims up to 300x cost savings, 25x fewer editing hours, and 3–5 minutes from idea to publish-ready. It also offers 100+ narrator voices in 30+ languages and Pexels access for stock.

- Short AI says one long video can become 10+ viral shorts in one click and claims over 99% speech-to-text accuracy for auto subtitles across 32+ languages.

- OpusClip reports 12M+ users and outcomes like 2x average views and +57% watch time when repurposing long-form, plus a free tier for getting started.

- Kapwing can generate fully edited shorts (15–60s) with voiceover, subtitles, an optional AI avatar, and auto B-roll, alongside collaboration features.

- Invideo AI highlights 25M+ users, a 16M+ asset library, and 50+ languages.

- VideoGPT focuses on mobile workflows with ultra-realistic voiceover and free daily generations (up to 3 videos/day) and says users can monetize output rights.

- Adobe Firefly emphasizes commercially safe generation trained on licensed sources and outputs 5-second 1080p clips with fine control over motion and style.

The takeaway: if you want more reach with less overhead, use an AI short video generator as your base layer, then refine for brand and learning goals.

What AI short video generators actually do

Most tools now cover a common map of features:

- Auto-script and ideation: Generate scripts from prompts, articles, or documents. Some offer templates based on viral formats, like Short AI’s 50+ hashtag templates.

- Auto-captions and stylized text: Most tools offer automatic captions with high accuracy claims (97–99% range). Dynamic caption styles, emoji, and GIF support help you boost retention.

- Voiceover and multilingual: Voice libraries span 30–100+ languages with premium voices and cloning options.

- Stock media and effects: Large libraries—like Invideo’s 16M+ assets and ImagineArt’s Pexels access—plus auto B-roll and transitions from tools like Kapwing.

- Repurpose long-form: Clip extraction that finds hooks and reactions from podcasts and webinars via OpusClip and Short AI.

- Platform formatting and scheduling: Aspect ratio optimization and scheduling to multiple channels; Short AI supports seven platforms.

- Mobile-friendly creation: VideoGPT lets you do this on your phone or tablet.

- Brand-safe generation: Firefly leans on licensed content and commercial safety.

Example: from a one-hour webinar, tools like OpusClip and Short AI claim to auto-extract 10+ clips in under 10 minutes, then add captions at 97–99% accuracy. That’s a week of posts from one recording.

What results to target

Be realistic, but set clear goals based on market claims:

- Speed: First drafts in 1–5 minutes; Short AI and ImagineArt both point to 10x or faster workflows.

- Cost: ImagineArt claims up to 300x cost savings.

- Engagement: Short AI cites +50% engagement; OpusClip reports 2x average views and +57% watch time.

- Scale: 10+ clips from one long video is normal; 3–5 minutes idea to publish is a useful benchmark.

Platform-specific tips for Shorts, TikTok, Reels

- YouTube Shorts: Keep most videos under 60s for discovery, but test 60–180s as Shorts expands (as noted by Short AI).

- TikTok: The Creator Rewards program favors >1-minute videos right now (per Short AI).

- Instagram Reels and Snapchat Spotlight: Stick to vertical 9:16. Lead with a hook in the first 3 seconds. Design for silent viewing with clear on-screen text.

Seven quick-win use cases

- Turn webinars or podcasts into snackable clips

Example: Short AI and OpusClip extract hooks from a 45-minute interview and produce 10–15 clips with dynamic captions. - Idea-to-video rapid prototyping

Example: ImagineArt reports 3–5 minutes from idea to publish-ready. - Multilingual reach at scale

Example: Invideo supports 50+ languages; Kapwing claims 100+ for subtitles/translation. - On-brand product explainers and microlearning

Example: Firefly focuses on brand-safe visuals great for e-commerce clips. - News and thought leadership

Example: Kapwing’s article-to-video pulls fresh info and images from a URL. - Mobile-first social updates

Example: VideoGPT enables quick creation on phones. - Monetization-minded content

Example: Short AI outlines earnings options; Invideo notes AI content can be monetized if original and policy-compliant.

How Colossyan levels up short-form for teams (especially L&D)

- Document-to-video and PPT/PDF import: I turn policies, SOPs, and decks into videos fast.

- Avatars, voices, and pronunciations: Stock or Instant Avatars humanize short clips.

- Brand Kits and templates: Fonts, colors, and logos with one click.

- Interaction and micro-assessments: Add short quizzes to 30–60s training clips.

- Analytics and SCORM: Track plays, quiz scores, and export data for LMS.

- Global localization: Instant Translation preserves timing and layout.

- Collaboration and organization: Assign roles, comment inline, and organize drafts.

A step-by-step short-form workflow in Colossyan

- Start with Doc2Video to import a one-page memo.

- Switch to 9:16 and apply a Brand Kit.

- Assign avatar and voice; add pauses and animations.

- Add background and captions.

- Insert a one-question MCQ for training.

- Use Instant Translation for language versions.

- Review Analytics, export CSV, and refine pacing.

Creative tips that travel across platforms

- Hook first (first 3 seconds matter).

- Caption smartly.

- Pace with intent.

- Balance audio levels.

- Guide the eye with brand colors.

- Batch and repurpose from longer videos.

Measurement and iteration

Track what actually moves the needle:

- Core metrics: view-through rate, average watch time, completion.

- For L&D: quiz scores, time watched, and differences by language or region.

In Colossyan: check Analytics, export CSV, and refine based on data.

How AI Video from Photo Tools Are Changing Content Creation

AI video from photo tools are turning static images into short, useful clips in minutes. If you work in L&D, marketing, or internal communications, this matters. You can create b-roll, social teasers, or classroom intros without filming anything. And when you need full training modules with analytics and SCORM, there’s a clean path for that too.

AI photo-to-video tools analyze a single image to simulate camera motion and synthesize intermediate frames, turning stills into short, realistic clips. For training and L&D, platforms like Colossyan add narration with AI avatars, interactive quizzes, brand control, multi-language support, analytics, and SCORM export - so a single photo can become a complete, trackable learning experience.

What “AI video from photo” actually does

In plain English, image to video AI reads your photo, estimates depth, and simulates motion. It might add a slow pan, a zoom, or a parallax effect that separates foreground from background. Some tools interpolate “in-between” frames so the movement feels smooth. Others add camera motion animation, light effects, or simple subject animation.

Beginner-friendly examples:

- Face animation: tools like Deep Nostalgia by MyHeritage and D-ID animate portraits for quick emotive clips. This is useful for heritage storytelling or simple character intros.

- Community context: Reddit threads explain how interpolation and depth estimation help create fluid motion from a single photo. That’s the core method behind many free and paid tools.

Where it shines:

- B-roll when you don’t have footage

- Social posts from your photo library

- Short intros and quick promos

- Visual storytelling from archives or product stills

A quick survey of leading photo-to-video tools (and where each fits)

Colossyan

A leading AI video creation platform that turns text or images into professional presenter-led videos. It’s ideal for marketing, learning, and internal comms teams who want to save on filming time and production costs. You can choose from realistic AI actors, customize their voice, accent, and gestures, and easily brand the video with your own assets. Colossyan’s browser-based editor makes it simple to update scripts or localize content into multiple languages - no reshoots required.

Try it free and see how fast you can go from script to screen. Example: take a product launch doc and short script, select an AI presenter, and export a polished explainer video in minutes - perfect for onboarding, marketing launches, or social posts.

EaseMate AI

A free photo to video generator using advanced models like Veo 3 and Runway. No skills or sign-up required. It doesn’t store your uploads in the cloud, which helps with privacy. You can tweak transitions, aspect ratios, and quality, and export watermark-free videos. This is handy for social teams testing ideas. Example: take a product hero shot, add a smooth pan and depth zoom, and export vertical 9:16 for Reels.

Adobe Firefly

Generates HD up to 1080p, with 4K coming. It integrates with Adobe Creative Cloud and offers intuitive camera motion controls. Adobe also notes its training data is licensed or public domain, which helps with commercial safety. Example: turn a static product image into 1080p b-roll with a gentle dolly-in and rack focus for a landing page.

Vidnoz

Free image-to-video with 30+ filters and an online editor. Supports JPG, PNG, WEBP, and even M4V inputs. Can generate HD without watermarks. It includes templates, avatars, a URL-to-video feature, support for 140+ languages, and realistic AI voices. There’s one free generation per day. Example: convert a blog URL to a teaser video, add film grain, and auto-generate an AI voiceover in Spanish.

Luma AI