Blog

How AI Video from Photo Tools Are Changing Content Creation

AI video from photo tools are turning static images into short, useful clips in minutes. If you work in L&D, marketing, or internal communications, this matters. You can create b-roll, social teasers, or classroom intros without filming anything. And when you need full training modules with analytics and SCORM, there’s a clean path for that too.

AI photo-to-video tools analyze a single image to simulate camera motion and synthesize intermediate frames, turning stills into short, realistic clips. For training and L&D, platforms like Colossyan add narration with AI avatars, interactive quizzes, brand control, multi-language support, analytics, and SCORM export - so a single photo can become a complete, trackable learning experience.

What “AI video from photo” actually does

In plain English, image to video AI reads your photo, estimates depth, and simulates motion. It might add a slow pan, a zoom, or a parallax effect that separates foreground from background. Some tools interpolate “in-between” frames so the movement feels smooth. Others add camera motion animation, light effects, or simple subject animation.

Beginner-friendly examples:

- Face animation: tools like Deep Nostalgia by MyHeritage and D-ID animate portraits for quick emotive clips. This is useful for heritage storytelling or simple character intros.

- Community context: Reddit threads explain how interpolation and depth estimation help create fluid motion from a single photo. That’s the core method behind many free and paid tools.

Where it shines:

- B-roll when you don’t have footage

- Social posts from your photo library

- Short intros and quick promos

- Visual storytelling from archives or product stills

A quick survey of leading photo-to-video tools (and where each fits)

Colossyan

A leading AI video creation platform that turns text or images into professional presenter-led videos. It’s ideal for marketing, learning, and internal comms teams who want to save on filming time and production costs. You can choose from realistic AI actors, customize their voice, accent, and gestures, and easily brand the video with your own assets. Colossyan’s browser-based editor makes it simple to update scripts or localize content into multiple languages - no reshoots required.

Try it free and see how fast you can go from script to screen. Example: take a product launch doc and short script, select an AI presenter, and export a polished explainer video in minutes - perfect for onboarding, marketing launches, or social posts.

EaseMate AI

A free photo to video generator using advanced models like Veo 3 and Runway. No skills or sign-up required. It doesn’t store your uploads in the cloud, which helps with privacy. You can tweak transitions, aspect ratios, and quality, and export watermark-free videos. This is handy for social teams testing ideas. Example: take a product hero shot, add a smooth pan and depth zoom, and export vertical 9:16 for Reels.

Adobe Firefly

Generates HD up to 1080p, with 4K coming. It integrates with Adobe Creative Cloud and offers intuitive camera motion controls. Adobe also notes its training data is licensed or public domain, which helps with commercial safety. Example: turn a static product image into 1080p b-roll with a gentle dolly-in and rack focus for a landing page.

Vidnoz

Free image-to-video with 30+ filters and an online editor. Supports JPG, PNG, WEBP, and even M4V inputs. Can generate HD without watermarks. It includes templates, avatars, a URL-to-video feature, support for 140+ languages, and realistic AI voices. There’s one free generation per day. Example: convert a blog URL to a teaser video, add film grain, and auto-generate an AI voiceover in Spanish.

Luma AI

Focuses on realistic animation from stills. Strong fit for marketing, gaming, VR, and real estate teams that need lifelike motion. It also offers an API for automation at scale. Example: animate an architectural rendering with a smooth camera orbit for a property preview.

Vheer

Creates up to 1080p videos with no subscriptions or watermarks. You can set duration, frame rate, and resolution, with accurate prompt matching. It outputs 5–10 second clips that are smooth and clean. Example: make a 10-second pan across a still infographic for LinkedIn.

Vidu

Emphasizes converting text and images into videos to increase engagement and save production time. Example: combine a feature list with a product image to produce a short explainer clip with minimal editing.

Face animation tools for beginners

Deep Nostalgia and D-ID can bring portraits to life. These are helpful for quick, emotive moments, like employee history features or culture stories.

My take: these tools are great for micro-clips and quick wins. For brand-safe, multi-language training at scale, you’ll hit a ceiling. That’s where a full platform helps.

Where these tools shine vs. when you need a full video platform

Where they shine:

- Speed: create motion from a still in minutes

- Short-form b-roll for social and websites

- Single-purpose clips and motion tests

- Lightweight edits with simple camera moves

Where you hit limits:

- Multi-scene narratives and consistent visual identity

- Multi-speaker dialogues with timing and gestures

- Compliance-friendly exports like SCORM video

- Structured learning with quizzes, branching, and analytics

- Localization that preserves layout and timing across many languages

- Central asset management and workspace permissions

Turning photos into polished training and learning content with Colossyan

I work at Colossyan, and here’s how we approach this for L&D. You can start with a single photo, a set of slides, or a process document, then build a complete, interactive training flow - no advanced design skills required.

Why Colossyan for training:

- Document to video: import a PDF, Word doc, or slide deck to auto-build scenes and draft narration.

- AI avatars for training: choose customizable avatars, or create Instant Avatars of your trainers. Add AI voiceover - use default voices or clone your own for consistency.

- Brand kit for video: apply fonts, colors, and logos in one click.

- Interactive training videos: add quizzes and branching to turn passive content into decision-making practice.

- Analytics and SCORM: export SCORM 1.2/2004 and track completions, scores, and time watched in your LMS.

- Instant translation video: translate your entire module while keeping timing and animations intact.

- Pronunciations: lock in brand terms and technical words so narration is accurate.

Example workflow: safety onboarding from factory photos

- Import your SOP PDF or PPT with equipment photos. We convert each page into scenes.

- Add a safety trainer avatar for narration. Drop in your photos from the Content Library. Use animation markers to highlight hazards at the right line in the script.

- Use Pronunciations for technical terms. If you want familiarity, clone your trainer’s voice.

- Add a branching scenario: “Spot the hazard.” Wrong selections jump to a scene that explains consequences; right selections proceed.

- Export as SCORM 1.2/2004 with a pass mark. Push it to your LMS and monitor quiz scores and time watched.

Example workflow: product update explainer from a single hero image

- Start with Document to Video to generate a first-draft script.

- Add your hero photo and screenshots. Use Conversation Mode to stage a dialogue between a PM avatar and a Sales avatar.

- Resize from 16:9 for the LMS to 9:16 for mobile snippets.

- Translate to German and Japanese. The timing and animation markers carry over.

Example script snippet you can reuse

- On screen: close-up of the new dashboard image. Avatar narration: “This release introduces three upgrades: real-time alerts, role-based views, and offline sync. Watch how the ‘Alerts’ tab updates as we simulate a network event.” Insert an animation marker to highlight the Alerts icon.

Example interactive quiz

- Question: Which control prevents unauthorized edits?

- A) Draft lock B) Role-based views C) Offline sync D) Real-time alerts

- Correct: B. Feedback: “Role-based views restrict edit rights by role.”

Production tips for better photo-to-video results

- Start with high-resolution images; avoid heavy compression.

- Pick the right aspect ratio per channel: 16:9 for LMS, 9:16 for social.

- Keep camera motion subtle; time highlights with animation markers.

- Balance music and narration with per-scene volume controls.

- Lock pronunciations for brand names; use cloned voices for consistency.

- Keep micro-clips short; chain scenes with templates for longer modules.

- Localize early; Instant Translation preserves timing and layout.

Repurposing ideas: from static assets to scalable video

- SOPs and process docs to microlearning: Document to Video builds scenes; add photos, quizzes, and export SCORM.

- Field photos to scenario-based training: use Conversation Mode for role-plays like objection handling.

- Slide decks to on-demand refreshers: import PPT/PDF; speaker notes become scripts.

- Blog posts and web pages to explainers: summarize with Document to Video; add screenshots or stock footage.

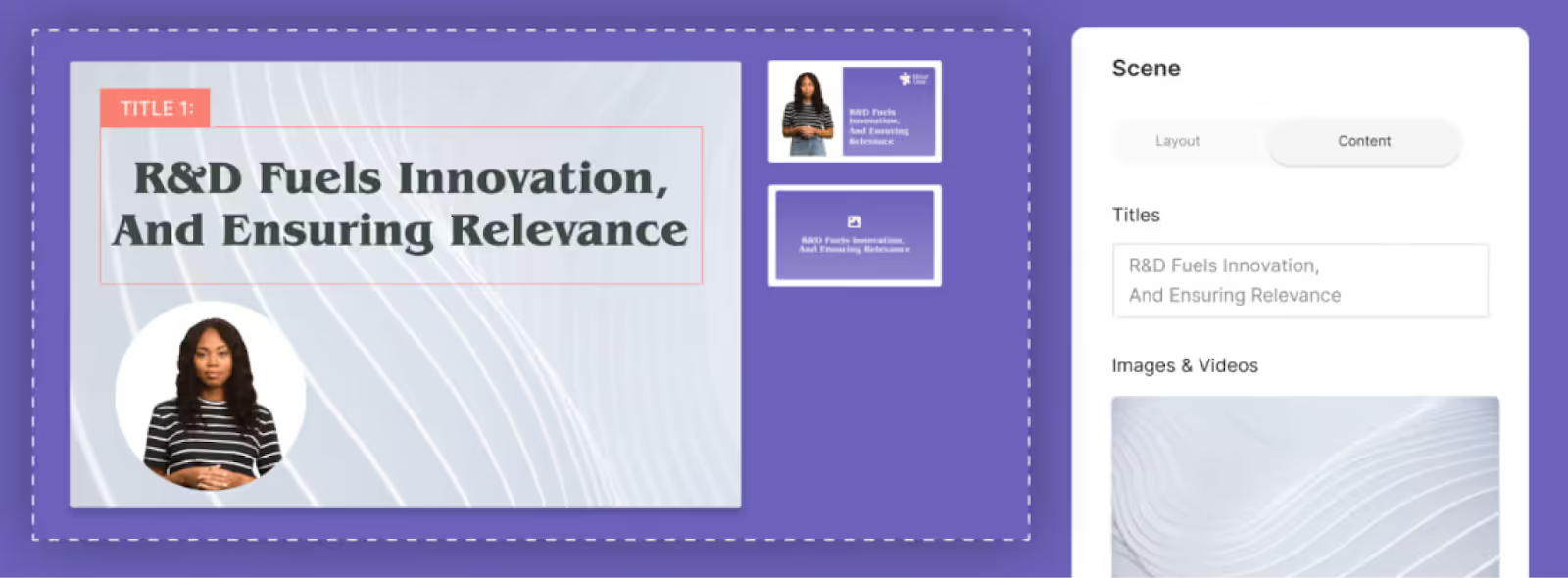

Convert PowerPoints Into Videos With Four Clicks

Converting PowerPoints into videos isn’t just convenient anymore—it’s essential. Videos are more engaging, accessible, and easier to share across platforms. You don’t need special software to watch them, and they help your presentations reach a wider audience.

Instead of manually recording or exporting slides—which can be time-consuming and clunky—Colossyan makes it effortless. Here’s a simple, step-by-step guide to turning your PowerPoint presentation into a professional video using Colossyan.

🪄 Step 1: Upload Your PowerPoint File

Start by logging into your Colossyan account.

- Click “Create Video” and select “Upload Document”.

- Upload your PowerPoint (.pptx) file directly from your computer or cloud storage.

Colossyan will automatically process your slides and prepare them for video creation.

🎨 Step 2: Apply Your Brand Kit

Keep your video on-brand and professional.

- Open your Brand Kit settings to automatically apply your company’s logo, colors, and fonts.

- This ensures every video stays consistent with your visual identity—perfect for corporate or training content.

🗣️ Step 3: Add an AI Avatar and Voice

Bring your slides to life with a human touch.

- Choose from Colossyan’s library of AI avatars to act as your on-screen presenter.

- Select a voice and language that best matches your tone or audience (Colossyan supports multiple languages and natural-sounding voices).

- You can also adjust the script or narration directly in the editor.

✏️ Step 4: Customize and Edit Your Video

Once your slides are imported:

- Rearrange scenes, update text, or add visuals in the Editor.

- Insert quizzes, interactive elements, or analytics tracking if you’re creating training content.

- Adjust pacing, transitions, and on-screen media for a polished final result.

📦 Step 5: Export and Share Your Video

When you’re happy with your video:

- Export it in your preferred format (Full HD 1080p is a great balance of quality and file size).

- For e-learning or training, export as a SCORM package to integrate with your LMS.

- Download or share directly via a link—no PowerPoint software needed.

💡 Why Use Colossyan for PowerPoint-to-Video Conversion?

- No technical skills required: Turn decks into videos in minutes.

- Consistent branding: Maintain a professional, on-brand look.

- Engaging presentation: Human avatars and voiceovers hold attention better than static slides.

- Trackable performance: Use quizzes and analytics to measure engagement.

- Flexible output: From corporate training to educational content, your videos are ready for any platform.

🚀 In Short

Converting PowerPoints to videos with Colossyan saves time, increases engagement, and makes your content more accessible than ever.

You upload, customize, and share—all in a few clicks. It’s not just a faster way to make videos; it’s a smarter way to make your presentations work harder for you.

Translate Videos to English: The Complete Enterprise Localization Strategy

When you need to translate videos to English, you're tackling more than a simple language conversion task—you're executing a strategic business decision to expand your content's reach to the world's dominant business language. English remains the lingua franca of global commerce, spoken by 1.5 billion people worldwide and serving as the primary or secondary language in most international business contexts. But traditional video translation is expensive, slow, and operationally complex. How do modern organizations localize video content efficiently without sacrificing quality or breaking the budget?

The strategic answer lies in leveraging AI-powered translation workflows that integrate directly with your video creation process. Instead of treating translation as an afterthought—a separate project requiring new vendors, multiple handoffs, and weeks of coordination—platforms like Colossyan demonstrate how intelligent automation can make multilingual video creation as simple as clicking a button. This comprehensive guide reveals exactly how to translate videos to English at scale, which approach delivers the best ROI for different content types, and how leading organizations are building global video strategies that compound competitive advantage.

Why Translating Videos to English Is a Strategic Priority

English video translation isn't just about accessibility—it's about market access, brand credibility, and competitive positioning in the global marketplace.

The Global Business Case for English Video Content

English holds a unique position in global business. While Mandarin Chinese has more native speakers, English dominates international commerce, technology, and professional communication. Consider these strategic realities:

Market Reach: The combined purchasing power of English-speaking markets (US, UK, Canada, Australia, and English speakers in other countries) exceeds $30 trillion annually. A video available only in another language excludes this massive audience entirely.B2B Decision-Making: In multinational corporations, English is typically the common language regardless of headquarters location. Technical evaluations, vendor assessments, and purchasing decisions happen in English—meaning your product demos, case studies, and training content must be available in English to be seriously considered.Digital Discovery: English dominates online search and content discovery. Google processes English queries differently and more comprehensively than most other languages. Video content in English is more discoverable, more likely to rank, and more frequently shared in professional contexts.Talent Acquisition and Training: For companies with distributed or global teams, English training content ensures every team member—regardless of location—can access critical learning materials. This is particularly important in tech, engineering, and other fields where English is the de facto standard.

The Traditional Translation Bottleneck

Despite these compelling reasons, many organizations underutilize video because traditional translation is prohibitively expensive and operationally complex:

Cost: Professional human translation, voice-over recording, and video re-editing for a 10-minute video typically costs $2,000-5,000 per target language. For videos requiring multiple languages, costs multiply rapidly.Timeline: Traditional workflows span 2-4 weeks from source video completion to translated version delivery—during which your content sits idle rather than driving business value.Coordination Complexity: Managing translation agencies, voice talent, and video editors across time zones creates project management overhead that many teams simply can't sustain.Update Challenge: When source content changes (products update, regulations change, information becomes outdated), the entire translation cycle must repeat. This makes maintaining current multilingual content practically impossible.

These barriers mean most organizations either: (1) don't translate video content at all, limiting global reach, or (2) translate only the highest-priority flagship content, leaving the bulk of their video library unavailable to English-speaking audiences.

How AI Translation Transforms the Economics

AI-powered video translation fundamentally changes this calculus. The global AI video translation market was valued at USD 2.68 billion and is projected to reach USD 33.4 billion by 2034—a 28.7% CAGR—driven by organizations discovering that AI makes translation affordable, fast, and operationally sustainable.

Modern platforms enable workflows where:

- Translation happens in hours instead of weeks

- Costs are 90% lower than traditional services

- Updates are trivial (regenerate rather than re-translate)

- Multiple languages can be created simultaneously (no linear cost scaling)

This transformation makes it practical to translate your entire video library to English, not just select pieces—fundamentally expanding your content's impact and reach.

Understanding Your Translation Options: Subtitles vs. Dubbing

When you translate videos to English, your first strategic decision is how you'll deliver that translation. This isn't just a technical choice—it shapes viewer experience, engagement, and content effectiveness.

English Subtitles: Preserving Original Audio

Adding English subtitles keeps your original video intact while making content accessible to English-speaking audiences.

Advantages:

- Preserves authenticity: Original speaker's voice, emotion, and personality remain unchanged

- Lower production complexity: No need for voice talent or audio replacement

- Cultural preservation: Viewers hear authentic pronunciation, accent, and delivery

- Accessibility bonus: Subtitles also benefit deaf/hard-of-hearing viewers and enable sound-off viewing

Disadvantages:

- Cognitive load: Viewers must split attention between reading and watching

- Reduced engagement: Reading subtitles is less immersive than native language audio

- Visual complexity: For content with heavy on-screen text or detailed visuals, subtitles can overwhelm

Best use cases:

- Documentary or interview content where speaker authenticity is central

- Technical demonstrations where viewers need to focus on visual details

- Content for audiences familiar with reading subtitles

- Social media video (where much viewing happens with sound off)

AI Dubbing: Creating Native English Audio

Replacing original audio with AI-generated English voice-over creates an immersive, native viewing experience.

Advantages:

- Natural viewing experience: English speakers can simply watch and listen without reading

- Higher engagement: Viewers retain more when not splitting attention with subtitles

- Professional polish: AI voices are now remarkably natural and appropriate for business content

- Emotional connection: Voice inflection and tone enhance message impact

Disadvantages:

- Original speaker presence lost: Viewers don't hear the actual person speaking

- Voice quality variance: AI voice quality varies by platform; testing is important

- Lip-sync considerations: If original speaker is prominently on camera, lip movements won't match English audio

Best use cases:

- Training and educational content where comprehension is paramount

- Marketing videos optimizing for engagement and emotional connection

- Content where the speaker isn't prominently on camera

- Professional communications where polished delivery matters

The Hybrid Approach: Maximum Accessibility

Many organizations implement both:

- Primary audio: AI-generated English dubbing for immersive viewing

- Secondary option: Subtitles available for viewer preference

This combination delivers maximum accessibility and viewer choice, though it requires slightly more production work.

The Colossyan Advantage: Integrated Translation

This is where unified platforms deliver exponential efficiency. Rather than choosing between subtitles and dubbing as separate production tracks, Colossyan lets you generate both from a single workflow:

1. Your original script is auto-translated to English

2. AI generates natural English voice-over automatically

3. English subtitles are created simultaneously

4. You can even generate an entirely new video with an English-speaking AI avatar

This integrated approach means you're not locked into a single translation method—you can test different approaches and provide multiple options to accommodate viewer preferences.

Step-by-Step: How to Translate Videos to English Efficiently

Executing professional video translation requires a systematic approach. Here's the workflow leading organizations use to translate content efficiently and at scale.

Phase 1: Prepare Your Source Content

Quality translation starts with quality source material. Invest time here to ensure smooth downstream processes.

Obtain accurate source transcription:

If your video was created from a script, you're already ahead—that script is your starting point. If not, you need an accurate transcript of what's being said.

Modern AI transcription tools like Whisper AI, Otter.ai, or built-in platform features deliver 95%+ accuracy for clear audio. Upload your video, receive the transcript, and spend 15-20 minutes reviewing for errors in:

- Proper names and terminology

- Technical jargon specific to your industry

- Numbers, dates, and specific figures

- Acronyms and abbreviations

This investment dramatically improves translation quality since errors in transcription cascade into translation mistakes.

Clean and optimize the script:

Before translation, refine your source text:

- Remove filler words (um, uh, like, you know)

- Clarify ambiguous phrases that might confuse machine translation

- Add context notes for terms that shouldn't be translated (product names, company names)

- Break very long sentences into shorter, clearer statements

Well-prepared source text yields dramatically better translations—spending 30 minutes optimizing can save hours of correction later.

Phase 2: Execute the Translation

With clean source text, translation becomes straightforward—though quality varies significantly by approach.

Machine Translation (Fast and Affordable):

AI translation services like Google Translate, DeepL, or built-in platform features provide instant translation at zero or minimal cost.

Best practices:

- DeepL typically delivers more natural results than Google Translate for European languages

- ChatGPT or Claude can provide contextual translation if you provide background ("Translate this technical training script from French to English, maintaining a professional but accessible tone")

- Split long documents into manageable chunks for free-tier services with character limits

For straightforward business content, modern machine translation delivers 85-95% quality that requires only minor human refinement.

Human-in-the-Loop (Optimal Quality):

The strategic approach: leverage AI speed, apply human expertise where it matters most.

1. Generate initial translation with AI (5 minutes)

2. Have a bilingual reviewer refine for naturalness and accuracy (20-30 minutes)

3. Focus human time on critical sections: opening hook, key messages, calls-to-action

This hybrid delivers near-professional quality at a fraction of traditional translation costs and timelines.

Professional Translation (When Stakes Are Highest):

For mission-critical content where precision is non-negotiable (legal disclaimers, medical information, regulated communications), professional human translation remains appropriate. Use AI to accelerate by providing translators with high-quality first drafts they refine rather than starting from scratch.

Phase 3: Generate English Audio

With your translated English script perfected, create the audio component.

Option A: AI Voice Generation

Modern text-to-speech systems create natural-sounding English audio instantly:

Using standalone TTS services:

- Google Cloud Text-to-Speech, Microsoft Azure, or Amazon Polly offer professional quality

- Test multiple voices to find the best fit for your content

- Adjust pacing and emphasis for technical or complex sections

Using integrated platforms like Colossyan:

- Select from 600+ professional English voices (different accents: American, British, Australian, etc.)

- Choose voice characteristics matching your content (authoritative, friendly, technical, warm)

- AI automatically handles pacing, pronunciation, and natural inflection

- Generate perfectly synchronized audio in minutes

Option B: Human Voice Recording

For flagship content where authentic human delivery adds value:

- Hire professional English voice talent (costs $200-500 for a 10-minute script)

- Or record in-house if you have fluent English speakers and decent recording equipment

- Provides maximum authenticity but sacrifices the speed and update-ease of AI

Option C: Regenerate with English-Speaking Avatar

The most transformative approach: don't just translate the audio—regenerate the entire video with an English-speaking AI avatar:

With platforms like Colossyan:

1. Upload your English-translated script

2. Select a professional AI avatar (can match original avatar's demographics or choose differently)

3. Generate a complete new video with the avatar speaking fluent English

4. Result: a fully native English video, not obviously a translation

This approach delivers the most immersive experience for English-speaking viewers—they receive content that feels created specifically for them, not adapted from another language.

Phase 4: Synchronize and Finalize

Bring together all elements into a polished final video.

For subtitle-only approach:

- Use free tools like Subtitle Edit or Aegisub to create perfectly timed SRT/VTT files

- Ensure subtitles are readable (appropriate font size, good contrast, strategic positioning)

- Follow language-specific conventions (English subtitles typically 15-20 words per screen)

- Test on different devices to ensure legibility

For dubbed audio:

- Replace original audio track with new English voice-over using video editors like DaVinci Resolve or Adobe Premiere

- Ensure perfect synchronization with on-screen action, transitions, and visual cues

- Balance audio levels to match any music or sound effects

- Add English subtitles as an optional track for maximum accessibility

For regenerated avatar videos:

- Review the AI-generated English video for quality and accuracy

- Make any necessary refinements (script edits, pacing adjustments)

- Regenerate if needed (takes minutes, not hours)

- Export in required formats and resolutions

Quality assurance checklist:

- Watch complete video at full speed (don't just spot-check)

- Verify pronunciation of technical terms, names, and acronyms

- Confirm visual sync at key moments

- Test audio levels across different playback systems

- Review on mobile devices if that's where content will be consumed

Phase 5: Optimize and Distribute

Maximize your translated content's impact through strategic optimization and distribution.

SEO optimization:

- Upload English transcripts as webpage content (makes video searchable)

- Create English titles and descriptions optimized for target keywords

- Add relevant tags and categories for platform algorithms

- Include timestamped chapter markers for longer content

Platform-specific formatting:

- Create multiple aspect ratios for different platforms (16:9 for YouTube, 1:1 for LinkedIn, 9:16 for Instagram Stories)

- Generate thumbnail images with English text

- Optimize length for platform norms (shorter cuts for social media)

Distribution strategy:

- Publish on platforms where English-speaking audiences congregate

- Include in English-language email campaigns and newsletters

- Embed in English versions of web pages and help centers

- Share in professional communities and forums

Performance tracking:

- Monitor completion rates, engagement, and conversion metrics

- Compare performance of translated vs. original content

- Use insights to refine future translation approaches

- A/B test different translation methods (subtitles vs. dubbing) to identify what resonates

This complete workflow—from source preparation through optimized distribution—can be executed in 1-2 days with AI assistance, compared to 2-4 weeks for traditional translation. The efficiency gain makes translating your entire video library practical, not just select flagship content.

Scaling Video Translation Across Your Organization

Translating one video efficiently is valuable. Building systematic capability to translate all appropriate content continuously is transformative. Here's how to scale video translation into a sustainable organizational capability.

Building Translation-First Workflows

The most efficient approach: build translation considerations into content creation from the start, rather than treating it as an afterthought.

Create translatable source content:

- Write scripts in clear, straightforward language (avoid idioms, slang, culturally-specific references that don't translate well)

- Use AI avatars for original content rather than human presenters (makes translation via avatar regeneration seamless)

- Structure content modularly (update individual sections without re-translating entire videos)

- Maintain brand consistency through templates and brand kits

Centralize translation workflows:

Rather than each department or team translating independently:

- Establish clear processes and tool standards

- Create shared libraries of translated assets (glossaries, voice preferences, avatar selections)

- Maintain translation memory (previously translated phrases for consistency)

- Enable team collaboration through platforms with built-in workflow features

Colossyan's enterprise features support this centralized approach with brand kits, team workspaces, and approval workflows.

Prioritizing Content for Translation

Not all content has equal translation priority. Strategic organizations segment their video libraries:

Tier 1: Immediate translation

- Customer-facing product content (demos, explainers, tutorials)

- Core training materials essential for all team members

- Marketing content for English-speaking markets

- Compliance and safety content required for operations

Tier 2: Regular translation

- New product announcements and updates

- Recurring communications and updates

- Expanding training library content

- Support and troubleshooting videos

Tier 3: Opportunistic translation

- Archive content with continued relevance

- Secondary marketing materials

- Supplementary training and development content

This tiered approach ensures high-value content is always available in English while building toward comprehensive library translation over time.

Measuring Translation ROI

Justify continued investment by tracking specific metrics:

Efficiency metrics:

- Translation cost per minute of video

- Time from source completion to English version availability

- Number of videos translated per month/quarter

Reach metrics:

- Viewership growth in English-speaking markets

- Engagement rates (completion, interaction, sharing)

- Geographic distribution of viewers

Business impact metrics:

- Lead generation from English-language video content

- Product adoption rates in English-speaking customer segments

- Training completion rates for English-speaking team members

- Support ticket reduction (as English help content improves self-service)

Organizations using AI translation report 5-10x increases in content output with 70-90% cost reduction compared to traditional translation—compelling ROI that justifies scaling investment.

Frequently Asked Questions About Translating Videos to English

What's the Most Cost-Effective Way to Translate Videos to English?

For most business content, AI-powered translation with strategic human review delivers the best cost-quality balance:

Approach: Use AI for transcription, translation, and voice generation, then have a fluent English speaker review for 20-30 minutes to catch errors and improve naturalness.Cost: Typically $20-100 per video depending on length and platform fees, versus $2,000-5,000 for traditional professional services.Quality: Achieves 90-95% of professional translation quality at a fraction of the cost.

For the absolute lowest cost, fully automated AI translation (no human review) works acceptably for internal or low-stakes content, though quality is variable.

How Accurate Is AI Translation for Business Video Content?

Modern AI translation delivers 85-95% accuracy for straightforward business content. Accuracy is highest for:

- Common language pairs (major languages to English)

- Standard business terminology

- Clear, well-structured source scripts

- Informational/educational content

Accuracy drops for:

- Highly specialized jargon or industry-specific terminology

- Idioms, cultural references, humor

- Legal or medical content requiring precision

- Ambiguous phrasing in source material

The strategic approach: let AI handle the bulk translation quickly, then apply focused human review to critical sections and specialized terminology.

Should I Use Subtitles or Replace the Audio Entirely?

This depends on your content type and audience context:

Choose subtitles when:

- Original speaker's authenticity is important (interviews, testimonials, expert content)

- Viewers need to focus on complex on-screen visuals

- Content will be consumed on social media (where much viewing is sound-off)

- You want to preserve cultural authenticity of original language

Choose dubbed audio when:

- Comprehension and retention are paramount (training, education)

- Engagement and immersion matter (marketing, storytelling)

- Original speaker isn't prominently on camera

- Professional polish is important

Many organizations create both versions, letting viewers choose their preference.

Can I Translate One Video Into Multiple Languages Simultaneously?

Yes, and this is where AI translation delivers exponential efficiency gains. With platforms like Colossyan:

1. Translate your source script into multiple target languages (AI handles this in minutes)

2. Generate videos for each language simultaneously (not sequential—truly parallel processing)

3. Create 10 language versions in the time traditional methods would produce one

This is transformative for global organizations that previously couldn't afford comprehensive localization. A training video can launch globally in all needed languages on the same day, rather than rolling out language-by-language over months.

How Do I Ensure Translated Content Maintains Brand Voice?

Maintaining brand consistency across languages requires strategic planning:

Establish translation guidelines:

- Document tone, formality level, and personality for your brand in English specifically

- Provide example translations (good and bad) for reference

- Define how to handle brand names, product names, and taglines

Use consistent AI voices:

- Select specific English voices that match your brand personality

- Use the same voices across all English content for consistency

- Document voice selections in brand guidelines

Leverage platform brand kits:

- Tools like Colossyan let you save brand colors, fonts, logos, and voice preferences

- Apply automatically to every video for visual and auditory consistency

Implement review processes:

- Have English-speaking brand or marketing team review translations before publication

- Check that tone, personality, and key messages align with brand guidelines

- Create feedback loops to continuously improve translation quality

Ready to Scale Your English Video Translation?

You now understand how to translate videos to English efficiently, which approaches deliver the best ROI, and how leading organizations are building scalable multilingual video strategies. The transformation from traditional translation bottlenecks to AI-powered workflows isn't just about cost savings—it's about making comprehensive video localization operationally feasible.

Colossyan Creator offers the most comprehensive solution for video translation, with auto-translation into 80+ languages, 600+ natural AI voices including extensive English voice options, and the unique ability to regenerate entire videos with English-speaking avatars. For global organizations, this integrated capability delivers ROI that standalone translation services simply can't match.

The best way to understand the efficiency gains is to translate actual content from your library. Experience firsthand how workflows that traditionally took weeks can be completed in hours.

Ready to make your video content globally accessible?Start your free trial with Colossyan and translate your first video to English in minutes, not weeks.

4 Best AI Video Generator Apps (Free & Paid Options Compared)

This guide compares five AI video generator apps that people are actually using today: Invideo AI, PixVerse, VideoGPT, and Adobe Firefly. I looked at user ratings, real-world feedback, speed, language coverage, avatar and lip-sync capability, template depth, safety for commercial use, collaboration options, and value for money. I also included practical workflows for how I pair these tools with Colossyan to create on-brand, interactive training that plugs into an LMS and can be measured.

If you want my quick take: use a generator for visuals, and use Colossyan to turn those visuals into training with narration, interactivity, governance, analytics, and SCORM. Most teams need both.

Top picks by use case

- Best for quick explainers and UGC ads: Invideo AI

- Best for viral effects and fast text/image-to-video: PixVerse

- Best for anime styles and frequent posting: VideoGPT

- Best for enterprise-safe generation and 2D/3D motion: Adobe Firefly

- Where Colossyan fits: best for L&D teams needing interactive, SCORM-compliant training with analytics, brand control, and document-to-video scale

1) Invideo AI - best for speedy explainers and UGC ads

Invideo AI is built for quick turnarounds. It handles script, visuals, and voiceovers from a simple prompt, supports 50+ languages, and includes AI avatars and testimonials. On mobile, it holds a strong rating: 4.6 stars from 24.9K reviews and sits at #39 in Photo & Video. On the web, the company reports a large base: 25M+ customers across 190 countries.

What I like:

- Fast to a decent first draft

- Good for product explainers and short social promos

- Built-in stock library and collaboration

What to watch:

- Users mention performance bugs and pricing concerns relative to stability

Example to try: “Create a 60-second product explainer in 50+ languages, with an AI-generated testimonial sequence for social ads.”

How to use Colossyan with it at scale:

- Convert product one-pagers or SOP PDFs into on-brand videos with Doc2Video, then standardize design with Brand Kits.

- Fix tricky names and jargon using Pronunciations so narration is accurate.

- Add quizzes and branching for enablement or compliance. Then I export SCORM, push to the LMS, and track completion with Analytics.

- Manage multi-team production using Workspace Management, shared folders, and inline comments.

2) PixVerse - best for trending effects and rapid text/image-to-video

PixVerse is big on speed and effects. It’s mobile-first, offers text/image-to-video in seconds, and features viral effects like Earth Zoom and Old Photo Revival. It has 10M+ downloads with a 4.5 rating from 3.06M reviews.

What I like:

- Very fast generation

- Fun, trend-friendly outputs for TikTok and shorts

What to watch:

- Daily credit limits

- Face details can drift

- Some prompt-to-output inconsistency

- Users report per-video credit cost rose from 20 to 30 without clear notice

Example to try: “Revive old employee photos into a short montage, then add Earth Zoom-style transitions for a culture reel.”

How to use Colossyan with it at scale:

- Embed PixVerse clips into a Colossyan lesson, add an avatar to deliver policy context, and layer a quick MCQ for a knowledge check.

- Localize the whole lesson with Instant Translation while keeping layouts and timings intact.

- Export SCORM to track pass/fail and time watched in the LMS; Analytics shows me average quiz scores.

3) VideoGPT - best for anime styles, cinematic looks, and frequent posting

VideoGPT leans into stylized content, including anime and cinematic modes. It reports strong usage: 1,000,000+ videos generated. The App Store listing shows a 4.8 rating from 32.4K reviews. The pricing is straightforward for frequent creators: $6.99 weekly “unlimited” or $69.99 yearly, with watermark removal on premium.

What I like:

- Versatile aesthetics (anime, cinematic) and easy volume posting

- Monetization-friendly claims (no copyright flags) on the website

What to watch:

- Watermarks on free plans

- Some technical hiccups mentioned by users

Example to try: “Produce an anime-styled explainer for a product feature and post daily shorts on TikTok and YouTube.”

How to use Colossyan with it at scale:

- Wrap VideoGPT clips in consistent intros/outros using Templates and Brand Kits, so everything looks on-brand.

- Keep terms consistent with cloned Voices and Pronunciations.

- Add branching to simulate decisions for role-based training, then export a SCORM package for LMS tracking.

4) Adobe Firefly - best for enterprise-safe 1080p, 2D/3D motion, and B-roll

Firefly’s pitch is quality and safety. It generates 1080p video from text or image prompts, supports 2D/3D motion, and focuses on commercial-safe training data. See: 1080p video, 2D/3D, and licensed/public domain materials.

What I like:

- Clear stance on legality and brand safety

- Strong for turning static assets into cinematic motion

What to watch:

- You may need to add voice and lip-sync elsewhere for end-to-end production

- Confirm the latest token/credit model

Example to try: “Transform a static hardware product photo set into 1080p cinematic B-roll for a launch deck.”

How to use Colossyan with it at scale:

- Import B-roll into Colossyan, add avatar narration, then layer quizzes and branching to turn marketing visuals into interactive training.

- Translate the module with one click and export SCORM 1.2 or 2004 for the LMS.

Honorable mentions and what benchmarks say

Recent comparisons point to several strong tools beyond this list. A standardized 10-tool test highlights filmmaker controls in Kling, realistic first frames in Runway Gen-4, and prompt accuracy in Hailou. It also notes cost differences, like plans from $8–$35 monthly and per-minute outputs such as $30/min for Google Veo 2.

Many platforms still lack native lip-sync and sound, which is why pairing tools is common. Practical takeaway: plan a multi-tool stack-use one for visuals and finish inside Colossyan for narration, interactivity, analytics, and LMS packaging.

Free vs paid: what to know at a glance

- Invideo AI: free version with weekly limits; robust paid tiers. App rating details and customer scale.

- PixVerse: daily credits constrain throughput; users report credit-per-video changes. Mobile rating and downloads.

- VideoGPT: free plan (up to 3 videos/day), paid at $6.99 weekly or $69.99 yearly; App rating.

- Adobe Firefly: commercially safe approach; confirm evolving token/credit structure.

Where Colossyan fits: the L&D-focused AI video platform

If your videos are for training, you need more than a generator. You need accurate narration, interactivity, analytics, and LMS compatibility. This is where Colossyan really shines.

- Document/PPT/PDF to video: Turn HR policies, compliance docs, or SOPs into structured, scene-by-scene videos with Doc2Video.

- Interactive learning: Add Multiple Choice Questions and Branching for decision-based scenarios, and track scores and completion.

- SCORM export and analytics: Export SCORM 1.2/2004 to the LMS, then measure pass/fail, watch time, and scores; I export CSVs for reports.

- Governance at enterprise scale: Manage roles and permissions with Workspace Management, organize shared folders, and collect comments in one place.

- Brand control: Enforce Brand Kits, Templates, and a central Content Library so everything stays consistent.

- Precision speech: Fix brand name and technical term pronunciation with Pronunciations and rely on cloned voices for consistent delivery.

- Global rollout: Use Instant Translation to replicate the full video-script, on-screen text, and interactions-into new languages while preserving timing.

Example workflows you can reuse

- Social-to-training pipeline: Generate a 15-second PixVerse effect (Old Photo Revival). Import into Colossyan, add an avatar explaining the context, include one MCQ, export SCORM, and track completions.

- Product launch enablement: Create cinematic B-roll with Firefly. Build a step-by-step walkthrough in Colossyan using Doc2Video, add branching for common objections, then localize with Instant Translation.

- Anime explainer series: Produce daily intros with VideoGPT. Standardize your episodes in Colossyan using Brand Kits, cloned Voices, Pronunciations, and use Analytics to spot drop-offs and adjust pacing.

Buyer’s checklist for 2025

- Do you need commercial safety and clear licensing (e.g., Firefly)?

- Will you publish high volume shorts and need fast, trendy styles (e.g., PixVerse, VideoGPT)?

- Are your videos for training with LMS tracking, quizzes, and governance (Colossyan)?

- How will you handle pronunciation of brand terms and acronyms at scale (Colossyan’s Pronunciations)?

- Can your team keep assets on-brand and consistent across departments (Colossyan’s Brand Kits and Templates)?

- What’s your budget tolerance for credit systems vs unlimited plans, and do recent changes impact predictability?

Top 10 Employee Development Training Strategies to Boost Skills in 2025

Employee development is still one of the strongest levers you have for retention, performance, and morale. In LinkedIn’s research, 93% of employees said they would stay longer at a company that invests in their careers, and companies with high internal mobility retain employees for twice as long. A strong learning culture also correlates with 92% more product innovation and 52% higher productivity. Yet 59% of employees report receiving no workplace training. If you want measurable impact in 2025, close that gap with focused strategy and simple execution.

Here are 10 practical strategies I recommend, plus how we at Colossyan can help you implement them without heavy production overhead.

Strategy 1 - build competency-based learning paths

Why it matters:

- 89% of best-in-class organizations define core competencies for every role. Clarity drives better training and fairer evaluation.

What it looks like:

- Map role-level competencies. Align courses, practice, and assessments to those competencies. Review quarterly with managers.

Example you can use:

- A sales org defines competencies for discovery, negotiation, and compliance. Each rep follows a leveled path with skill checks.

How we help at Colossyan:

- We use Doc2Video to turn competency frameworks and SOPs into short, on-brand video modules fast.

- We add interactive quizzes aligned to each competency and export as SCORM with pass marks for LMS tracking.

- Our Analytics show where learners struggle so you can refine the path and close gaps.

Strategy 2 - make internal mobility and career pathways visible

Why it matters:

- Companies with high internal mobility retain employees twice as long. And 93% stay longer when career investment is clear.

What it looks like:

- Publish clear career paths. Show adjacent roles, skills required, and 6–12 month transition steps. Add an internal marketplace of gigs and mentors.

Example you can use:

- “Day-in-the-life” videos for product marketing, solutions engineering, and customer success. Each shows required skills and a learning plan.

How we help at Colossyan:

- We record leaders as Instant Avatars so they can present career paths without repeated filming.

- With Conversation Mode, we simulate informational interviews between employees and hiring managers.

- Brand Kits keep all career content consistent across departments.

Strategy 3 - run a dual-track model: development vs. training

Why it matters:

- Employee development is long-term and growth-focused; training is short-term and task-based. You need both.

What it looks like:

- Split your roadmap: short-term role training (tools, compliance) and long-term development (leadership, cross-functional skills).

Example you can use:

- Quarterly “role excellence” training plus a 12-month development plan toward leadership or specialist tracks.

How we help at Colossyan:

- Templates let us standardize “how-to” and compliance content.

- SCORM exports track completion and scores on the training track.

- For development, we build branching scenarios that require decisions and reflection.

Strategy 4 - scale microlearning for just‑in‑time skills

Why it matters:

- Short modules increase uptake. The University of Illinois offers an “Instant Insights” microlearning series with 5–20 minute modules for flexible learning (source).

What it looks like:

- Build a library of 5–10 minute videos, each targeting one outcome (e.g., “Handle objections with the XYZ framework”).

Example you can use:

- A “Power Skills”-style certification delivered in 3-hour bundles made of 10-minute micro modules.

How we help at Colossyan:

- PPT/PDF Import turns slide decks into short scenes; we add avatars and timed text for quick micro-courses.

- We reuse graphics via the Content Library across a series.

- Analytics highlight drop-off points so we shorten scenes or add interactions.

Strategy 5 - double down on power skills and dialogue training

Why it matters:

- Programs like “Power Skills at Illinois” and “Crucial Conversations for Mastering Dialogue” (14-hour interactive) improve communication, teamwork, and leadership (source). These skills lift performance across roles.

What it looks like:

- Scenario-based role plays for high-stakes conversations: feedback, conflict, stakeholder alignment.

Example you can use:

- A branching scenario where a manager addresses performance concerns. Learners choose responses, see consequences, and retry.

How we help at Colossyan:

- Conversation Mode shows realistic dialogue with multiple avatars.

- Branching flows simulate decisions and outcomes; we track scores for mastery.

- Pronunciations ensure your brand and product names are said correctly.

Strategy 6 - empower self-directed learning with curated academies

Why it matters:

- A survey of 1,000+ US employees found self-directed learning and career development training are the most appealing for reskilling.

- The University of Illinois gives staff free access to 170+ Coursera courses and 1,200+ LinkedIn Learning lessons (source).

What it looks like:

- A role- and goal-based library with suggested paths and electives; learners choose modules and timing.

Example you can use:

- A “Data Fluency Academy” with beginner/intermediate/advanced tracks and capstone demos.

How we help at Colossyan:

- Instant Translation creates language variants while keeping layouts intact.

- Voices and cloned voices personalize narration for different regions or leaders.

- Workspace Management lets admins assign editors and viewers per academy track.

Strategy 7 - close the loop with data, feedback, and iteration

Why it matters:

- Employees are 12x more likely to be engaged when they see action on their feedback.

- Skills gaps can cost a median S&P 500 company roughly $163M annually.

What it looks like:

- Post-course surveys, pulse polls, and rapid updates. Fix the modules where analytics show confusion.

Example you can use:

- After a policy change video, collect questions and publish an updated module addressing the top 5 within 48 hours.

How we help at Colossyan:

- Analytics track plays, watch time, and quiz scores; we export CSV to link learning with performance.

- Commenting enables SME and stakeholder review directly on scenes for faster iteration.

- Doc2Video regenerates updates from revised documents in minutes.

Strategy 8 - use AI to accelerate content creation and updates

Why it matters:

- Marsh McLennan uses digital tools to boost productivity for 20,000+ employees, and AI will increase the need for AI upskilling. Faster production cycles matter.

What it looks like:

- New training in hours, not weeks. Monthly refreshes where tools and policies change.

Example you can use:

- An “AI essentials” onboarding series refreshed monthly as tools evolve.

How we help at Colossyan:

- Prompt2Video builds first drafts from text prompts; we edit with AI to shorten, fix tone, and add pauses.

- Brand Kits apply your identity at scale; Templates maintain visual quality without designers.

- Media features add screen recordings and stock to demonstrate tools clearly.

Strategy 9 - train in the flow of work with digital guidance

Why it matters:

- Digital Adoption Platforms guide users in-app. Training in the workflow reduces errors and speeds proficiency (source).

What it looks like:

- Embedded short videos and step-by-step guides inside the tools people use daily.

Example you can use:

- A CRM rollout supported by 90-second “how-to” clips on the intranet and LMS, plus in-app walkthroughs.

How we help at Colossyan:

- We export MP4s or audio-only for intranet and app embeds; SCORM for LMS tracking with pass/fail criteria.

- Screen Recording captures software steps; we add avatar intros for clarity.

- Transitions and animation markers time highlights to on-screen actions.

Strategy 10 - localize for a global, inclusive workforce

Why it matters:

- Global teams need multilingual, accessible content to ensure equitable development and adoption.

What it looks like:

- Consistent core curricula translated and adapted with local examples, formats, and voices.

Example you can use:

- Safety training in Spanish, French, and German with region-specific regulations.

How we help at Colossyan:

- Instant Translation adapts scripts, on-screen text, and interactions while keeping animation timing.

- Multilingual avatars and Voices localize narration; Pronunciations handle place and product names.

- We export captions (SRT/VTT) for accessibility and compliance.

Measurement framework and KPIs

- Participation and completion rates by role and location (SCORM/LMS + Colossyan Analytics).

- Quiz performance and retry rates aligned to competencies.

- Time to proficiency for new tools; reduction in errors or rework.

- Internal mobility rate; promotions and lateral moves within 12 months.

- Engagement after feedback cycles (pulse survey lift).

- Business outcomes tied to learning culture: productivity, innovation velocity aligned to Deloitte benchmarks on innovation and productivity.

How to set up measurement with Colossyan:

- Set pass marks for interactive modules in SCORM; export and connect to your LMS dashboard.

- Use Analytics to identify high drop-off scenes; adjust microlearning length and interactions.

- Tag videos by competency or program in folders for faster reporting.

Examples you can adapt (from the learnings)

- Career investment and retention: Reference LinkedIn’s 93% and internal mobility doubling retention in a short HR explainer delivered by an Instant Avatar.

- Best-in-class competency clarity: Build a competency library series and include a quiz per competency; cite the 89% best-in-class stat. Export via SCORM.

- Microlearning in practice: Mirror Illinois’ “Instant Insights” with 10-minute modules accessible on any device (source).

- Learning culture ROI: Cite Deloitte’s 92% innovation and 52% productivity plus $163M skills gap cost in a data-focused update for executives.

- Self-directed appeal: Use a choose-your-path branching video and nod to survey data showing self-directed learning is most appealing.

Suggested visuals and video ideas

- 60-second “What competencies look like here” video per role using avatars and on-screen text.

- Branching conversation role-play for crucial conversations with score tracking.

- Microlearning series on core tools using Screen Recording with avatar intros.

- Localized safety or compliance module translated via Instant Translation; export captions for accessibility.

- “Choose your reskilling journey” interactive video that matches learner interests.

Internal linking anchors (for your site architecture)

- Learning analytics

- LMS integrations

- SCORM guides

- Interactive video creation

- Microlearning best practices

- Competency models

- Localization workflows

One final point. Don’t treat development as a perk.

Employees leave when they can’t see progress: 63% cited lack of advancement as a top reason for quitting. Show clear paths.

Build competency clarity. Meet people in the flow of work. And iterate based on data and feedback.

If you do that, the retention and productivity gains will follow.

How To Create Professional AI Talking Avatars Instantly

When you need an AI talking avatar for business video content, you're looking to solve a persistent production challenge: creating professional, presenter-led videos without the logistical complexity, scheduling constraints, or costs of working with human talent. Traditional video production centers around human presenters—coordinating schedules, managing multiple takes, editing around mistakes, and starting from scratch whenever content needs updating. What if you could generate polished, professional presenter videos on demand, in any language, updated in minutes rather than weeks?

AI talking avatars represent one of the most transformative applications of artificial intelligence in enterprise content creation. These photorealistic digital presenters can deliver any scripted content with natural movements, appropriate expressions, and professional polish—enabling organizations to scale video production in ways previously impossible. Platforms like Colossyan demonstrate how AI talking avatars can serve as the foundation of modern video strategies for training, communications, and marketing. This guide explores exactly how AI talking avatars work, where they deliver maximum business value, and how to deploy them strategically for professional results.

Understanding AI Talking Avatar Technology

AI talking avatars are sophisticated digital humans created through multiple AI systems working in concert.

The Technology Stack

3D Facial Modeling:

High-resolution scanning of real human faces creates detailed 3D models preserving natural features, skin textures, and proportions. Professional platforms like Colossyan work with real models to create avatar libraries, ensuring photorealistic quality.

Natural Language Processing:

AI analyzes your script to understand meaning, sentiment, and structure—informing how the avatar should deliver the content, where emphasis should fall, and what emotional tone is appropriate.

Advanced Text-to-Speech:

Neural networks generate natural-sounding speech from text—far beyond robotic TTS. Modern systems understand context, adjust intonation appropriately, and create voices virtually indistinguishable from human speakers.

Facial Animation AI:

The most sophisticated component: AI drives the avatar's facial movements based on generated speech:

- Lip synchronization: Precisely matched to phonemes for natural speech appearance

- Micro-expressions: Subtle eyebrow movements, natural blinking, small facial adjustments

- Head movements: Natural gestures that emphasize points or convey engagement

- Emotional expression: Facial features adjust to match content tone (serious for warnings, warm for welcomes)

Real-Time Rendering:

All elements—animated face, selected background, brand elements—are composited into final video with proper lighting and professional polish.

From Uncanny Valley to Natural Presence

Early AI avatars suffered from the "uncanny valley" problem—they looked almost human but were unsettling because small imperfections screamed "artificial."

Modern AI talking avatars have largely overcome this:

- Natural micro-expressions make faces feel alive

- Appropriate pausing and breathing create realistic delivery

- Varied head movements prevent robotic stiffness

- High-quality rendering ensures visual polish

The result: digital presenters viewers accept as professional and natural, even when recognizing they're AI-generated.

Market Growth Signals Real Value

The AI avatar market was valued at USD 4.8 billion in 2023 and is projected to reach USD 30.5 billion by 2033—a 20.4% CAGR. This explosion reflects enterprises discovering that AI talking avatars solve real operational problems: eliminating production bottlenecks, ensuring consistency, enabling trivial updates, and scaling content infinitely.

Strategic Applications for AI Talking Avatars

AI talking avatars aren't universally applicable—they excel in specific scenarios while remaining unsuitable for others. Strategic deployment maximizes value.

Enterprise Training and L&D

The killer application. Training content demands consistency, requires frequent updates, and must scale globally—exactly where AI talking avatars excel.How avatars transform training:

- Consistency: Every learner experiences identical, professional delivery

- Update agility: Changed a process? Update the script and regenerate in 30 minutes

- Multilingual scaling: Same avatar presents in 80+ languages with appropriate voices

- Modular structure: Update individual modules without re-recording entire programs

Organizations using AI talking avatars for training report 5-10x more content produced and 4x more frequent updates compared to traditional video training.

Internal Communications

Velocity without executive time investment. Communications need speed and consistency; AI talking avatars deliver both.Applications:

- Regular company updates (quarterly results, strategic initiatives)

- Policy and process announcements

- Departmental communications

- Crisis or urgent messaging

Create custom avatars representing leadership or communications teams, enabling professional video messaging on demand without scheduling bottlenecks.

Product Demonstrations and Marketing

Content volume at scale. Marketing needs video for every product, feature, use case, and campaign—volumes traditional production can't sustain.Applications:

- Product explainer videos

- Feature demonstrations

- Use case showcases

- Social media content series

Test multiple variations (different avatars, messaging approaches, content structures) rapidly—impossible with human presenter coordination.

Customer Education and Support

Self-service enablement. Customers prefer video explanations but creating comprehensive libraries is resource-intensive.Applications:

- Getting started tutorials

- Feature walkthroughs

- Troubleshooting guides

- FAQ video responses

AI talking avatars make comprehensive video knowledge bases economically viable, improving customer satisfaction while reducing support costs.

Choosing the Right AI Talking Avatar

The avatar you select communicates instantly about your content. Strategic selection matters.

Matching Avatar to Content Context

Formal Corporate Content:

- Professional business attire (suit, dress shirt)

- Mature, authoritative appearance

- Neutral, composed expressions

- Clear, articulate delivery

Best for: Compliance training, executive communications, formal announcementsTraining and Educational Content:

- Smart casual attire

- Approachable, friendly demeanor

- Warm, encouraging expressions

- Conversational delivery style

Best for: Skills training, onboarding, how-to contentMarketing and Customer-Facing:

- Style matching brand personality (could be formal or casual)

- Energetic, engaging presence

- Expressions reflecting brand values

- Voice resonating with target demographic

Best for: Product videos, social content, promotional materials

Diversity and Representation

Professional platforms offer avatars reflecting diverse:

- Ages: Young professionals to experienced experts

- Ethnicities: Representative of global audiences

- Gender presentations: Various gender identities and expressions

- Professional contexts: Different industries and settings

Colossyan provides 70+ professional avatars with extensive diversity—dramatically more options than basic platforms with generic one-size-fits-all presenters.

Consistency Within Content Series

For multi-video projects, use the same avatar throughout:

- Builds familiarity with learners or viewers

- Creates professional, cohesive experience

- Strengthens brand association

Custom Avatar Options

For unique brand presence, consider custom avatar creation:

Digital twins of team members:

- Capture likeness of actual executives or subject matter experts

- Enable their scaled presence without their ongoing time

- Maintains personal credibility while adding operational flexibility

Unique branded avatars:

- Custom-designed avatars representing your brand specifically

- Exclusive to your organization

- Can embody specific brand characteristics

Investment typically $5,000-15,000 but delivers permanent asset enabling unlimited content creation.

Creating Professional AI Talking Avatar Videos

Effective AI talking avatar videos follow strategic workflows from script to distribution.

Step 1: Craft Effective Scripts

Quality avatars delivering poor scripts still produce poor content. Script quality is paramount.

Write for spoken delivery:

- Short sentences (15-20 words maximum)

- Conversational tone (contractions, direct address)

- Active voice (creates energy and clarity)

- Clear transitions between ideas

Structure for engagement:

- Strong hook (first 10 seconds capture attention)

- Logical information progression

- Clear value proposition throughout

- Specific call-to-action

Optimize for AI delivery:

- Avoid complex words AI might mispronounce

- Use punctuation to guide natural pacing

- Spell out acronyms on first use

- Test pronunciation of technical terms

Step 2: Select Avatar and Voice

Platform selection:

For professional business content, use premium platforms like Colossyan offering:

- High-quality avatar libraries

- Natural voice options

- Integrated workflow features

- Brand customization tools

Avatar selection:

- Match to target audience demographics

- Align with content formality level

- Consider brand personality

- Test multiple options to find best fit

Voice selection:

- Match voice to avatar (appropriate gender, approximate age)

- Choose accent for target audience (US, UK, Australian English, etc.)

- Adjust pacing for content type (slower for technical, normal for general)

- Select tone matching purpose (authoritative, warm, energetic)

Step 3: Enhance with Supporting Visuals

Avatar-only videos can feel monotonous. Strategic visual variety maintains engagement.

Supporting visual types:

- Screen recordings: Show software or processes being explained

- Slides and graphics: Display data, frameworks, key points

- Product images: Showcase items being discussed

- B-roll footage: Add contextual visuals

Aim for visual change every 10-15 seconds to maintain attention. Avatar serves as guide tying elements together.

Step 4: Add Interactive Elements (Training Content)

Transform passive videos into active learning experiences:

- Embedded quizzes: Knowledge checks at key moments

- Branching scenarios: Choices determine content path

- Clickable hotspots: Additional information on demand

Colossyan supports these interactive elements natively, creating sophisticated learning without separate authoring tools.

Step 5: Review and Refine

Quality assurance before publishing:

- Watch complete video at full speed

- Verify pronunciation of all terms and names

- Confirm visual timing and synchronization

- Test on target devices (mobile if primary viewing context)

- Ensure brand consistency (logos, colors, fonts)

This 15-20 minute review prevents errors and ensures professional output.

Platform Comparison for AI Talking Avatars

Strategic comparison helps identify the right platform for your needs:

Strategic recommendation: Evaluate based on primary use case, required volume, and feature needs. For most business applications, Colossyan's combination of quality, features, and workflow integration delivers optimal value.

Best Practices for Professional Results

Script Quality Drives Everything

Your AI talking avatar is only as effective as your script:

- Invest time in script development

- Read aloud before generating video

- Get feedback from target audience representatives

- Iterate based on performance data

Don't Over-Rely on Talking Head

Most engaging avatar videos blend presenter with supporting visuals:

- Integrate screen recordings, slides, graphics

- Change visual elements regularly

- Use avatar as connecting narrative thread

Maintain Brand Consistency

Ensure avatar videos feel authentically on-brand:

- Use consistent avatars across content series

- Apply brand kits (colors, fonts, logos) automatically

- Develop distinct visual style

- Maintain consistent voice and tone in scripts

Optimize for Platform

Different distribution channels have different optimal characteristics:

- LinkedIn: 2-5 minutes, professional, business-focused

- Instagram/TikTok: 30-90 seconds, visual, fast-paced

- YouTube: 5-15 minutes, detailed, comprehensive

- LMS: Any length appropriate for learning objectives

Disclose AI Usage Appropriately

Transparency builds trust:

- Note in description that video uses AI avatars

- For customer-facing content, brief disclosure is good practice

- For internal training, disclosure may be less critical but still recommended

Frequently Asked Questions

Do AI Talking Avatars Look Realistic?

Modern AI talking avatars from professional platforms are remarkably realistic—natural movements, appropriate expressions, photorealistic rendering. Most viewers recognize they're digital but find them professional and acceptable.

The goal isn't deception—it's professional content delivery. High-quality platforms like Colossyan produce avatars suitable for any business use.

Can I Create an Avatar That Looks Like Me?

Yes. Custom avatar creation services create digital twins of actual people. Process involves:

1. Recording session from multiple angles

2. AI processing to create digital replica

3. Testing and refinement

4. Final avatar available for unlimited use

Investment: $5,000-15,000 typically. ROI: Enables scaled presence without ongoing time investment.

How Much Do AI Talking Avatar Platforms Cost?

Pricing varies:

- Free trials: Test platforms before commitment

- Professional plans: $100-300/month for individuals/small teams

- Enterprise plans: $500-2,000+/month for unlimited production, teams, custom features

Most organizations find mid-tier plans deliver positive ROI within first month versus traditional production costs.

Can Avatars Speak Multiple Languages?

Yes, and this is a key advantage. Platforms like Colossyan support 80+ languages, letting you:

- Create multilingual versions with appropriate voices and accents

- Use same avatar speaking different languages (lip-sync adapts automatically)

- Build global content libraries with consistent presenter

This transforms localization economics for multinational organizations.

Ready to Deploy Professional AI Talking Avatars?

You now understand how AI talking avatars work, where they deliver maximum value, and how to implement them strategically. The right approach depends on your content type, volume requirements, and whether video is a strategic priority.

Colossyan Creator offers the most comprehensive solution for business AI talking avatars, with 70+ professional avatars, 600+ natural voices across 80+ languages, custom avatar creation services, and complete workflow integration. For organizations serious about scaling video content production, it delivers ROI that standalone or basic tools simply can't match.

The best way to understand the transformation is to create actual business content with AI talking avatars and experience the speed, quality, and flexibility firsthand.

Ready to see what AI talking avatars can do for your organization? Start your free trial with Colossyan and create professional avatar videos in minutes, not days.

How to Choose the Best LMS for Employee Training: A Complete Guide

Why the right LMS matters in 2025

Choice overload is real.

The market now lists 1,013+ employee-training LMS options, and many look similar on the surface.

Still, the decision affects core business results, not just course delivery.

Training works when it’s planned and measured. 90% of HR managers say training boosts productivity, 86% say it improves retention, and 85% link it to company growth.

People want it too: 75% of employees are eager to join training that prepares them for future challenges</a>.

Integration also matters. One organization saw a 35% sales increase and a 20% reduction in admin costs by integrating its LMS with its CRM. That’s not about features for their own sake. That’s about connecting learning with daily work.

And content quality is the multiplier. I work at Colossyan, so I see this every day: strong video beats long PDFs. I turn SOPs and policies into short, on-brand videos with Doc2Video, add quick knowledge checks, then export SCORM so the LMS tracks completions and scores.

This combination moves completion rates up without adding admin burden.

What an LMS is (and isn’t) today

An LMS is a system for managing training at scale: enrollments, paths, certifications, reporting, compliance, and integrations. In 2025, that means skills tracking, AI recommendations, stronger analytics, and clean integrations with HRIS, CRM, and identity tools.

Real examples show the shift. Docebo supports 3,800+ companies with AI-driven personalization and access to 75,000+ courses.

It’s worth saying what an LMS isn’t: it’s not a content creator. You still need a way to build engaging materials. That’s where I use Colossyan. I create interactive video modules with quizzes and branching, export SCORM 1.2 or 2004, and push to any LMS. For audits, I export analytics CSVs (plays, watch time, scores) to pair with LMS reports.

Must-have LMS features and 2025 trends

- Role-based access and permissions. Basic, linear workflows cause disengagement. A community post about Leapsome highlighted missing role differentiation, rigid flows, and admin access issues at a 300–500 employee company: role-based access and notification controls matter.

- Notification controls. Throttle, suppress, and target alerts. Uncontrolled notifications will train people to ignore the system.

- AI personalization and skills paths. 92% of employees say well-planned training improves engagement. Good recommendations help learners see value fast.

- Robust analytics and compliance. Track completions, scores, attempts, due dates, and recertification cycles. Export to CSV.