Blog

7 Best Video Editors With Built-In Voice-Over Features

Many creators and teams want to add voice-overs to their videos without piecing together three or four apps. Whether you need your own narration, AI-generated voices, or even voice clones for consistent branding, your editor should let you work all in one place. This guide looks at seven video editors that give you those built-in voice-over features - without extra complexity or lots of manual syncing.

What matters in a voice-over video editor

For this list, I looked for tools that make the process simple. The best editors let you record or generate voices, add auto-subtitles for accessibility, handle multiple languages when needed, and give you strong audio controls like volume, speed, pitch, and fades. Some go further with AI-powered dubbing, brand voice cloning, advanced analytics, or direct SCORM export for training teams.

I’ve picked options for all skill levels - beginners on mobile or desktop, agencies, marketing, and especially organizations modernizing their learning videos.

Here’s what the best editors offer:

- Record or create AI/text-to-speech voices right in the app

- Auto-generated, accurate subtitles and easy caption exports

- Tools for translating or localizing content to more than one language

- Audio controls for fine-tuning the result

- Simpler workflows: templates, script-to-VO syncing, stock media

- Collaboration features for teams, analytics where needed

- Cross-platform flexibility (web, desktop, mobile)

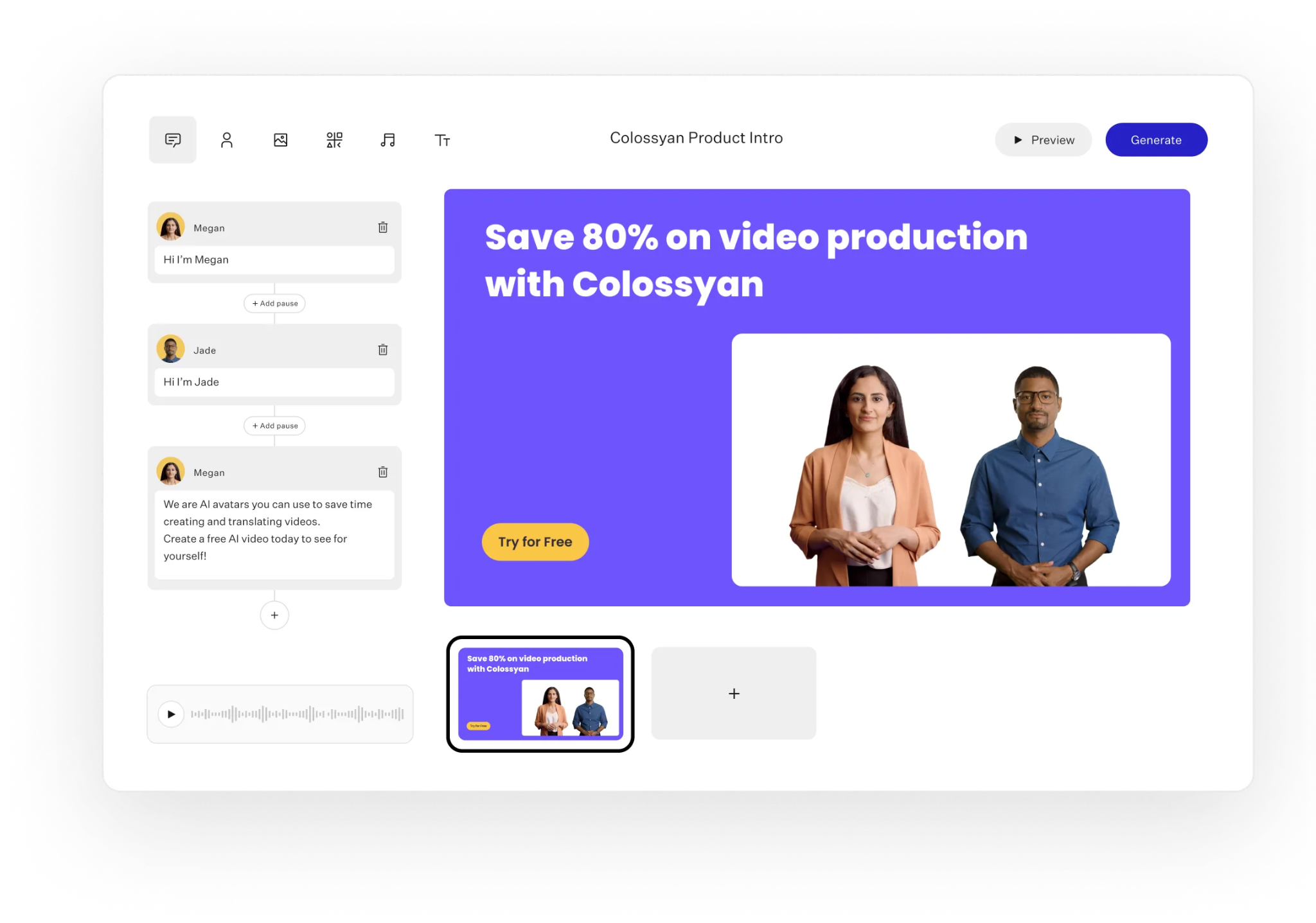

1) Colossyan - best for training teams who need scalable voice-over, localization, and analytics

If you need to build voice-over videos for training or learning at scale, you’ll run into problems most editors can’t handle: consistent brand pronunciation, instant translation, easy voice cloning, direct SCORM export, and analytics that measure real learning. This is where I think Colossyan stands out.

You don’t need to record your own narration. With Colossyan, you select from multilingual AI voices or even clone your own for consistency. Pronunciations for tricky names or acronyms are saved and instantly apply each time you reference them in a script.

If you’re localizing, you can use the Instant Translation feature to switch an entire video - voice, on-screen text, and all interactions - to a new language and export separate drafts for each version - a step beyond simple TTS dubbing. Timing stays in sync; you only adjust visual layout if the new language changes text length.

You can import documents, PowerPoints, or PDFs and have them auto-converted to scenes, with speaker notes turning into voice-over script instantly, which is much quicker than manual workflows found in most editors. Add pauses and script block previews to get natural delivery.

For interactivity, you can insert quizzes and branching dialogue into the video itself, set pass marks, and export as SCORM 1.2/2004 for use in any standard LMS. Real analytics track who is watching, for how long, and which questions they answer correctly.

You can also export audio-only narration or closed captions separately if you need those for compliance or accessibility.

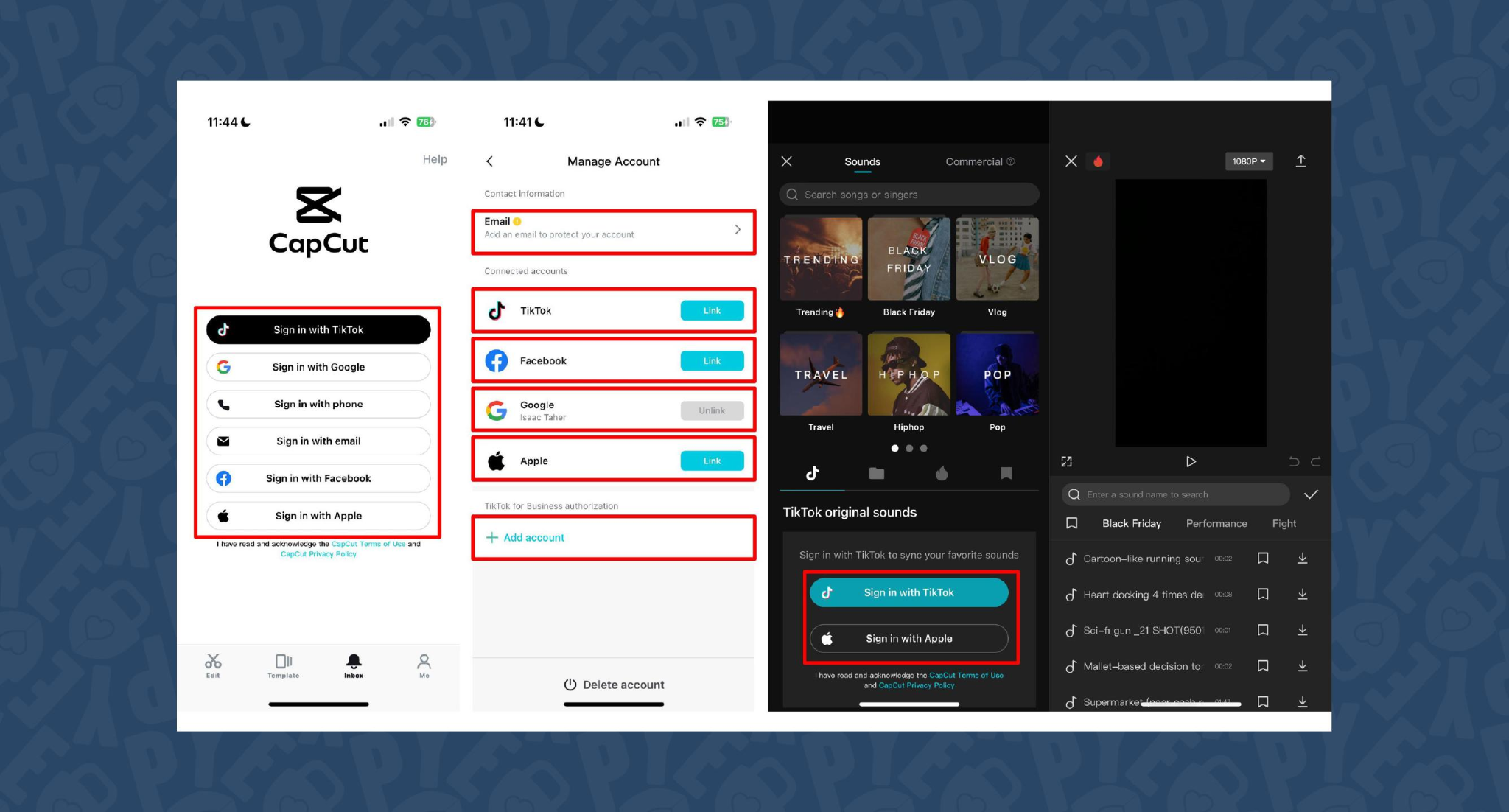

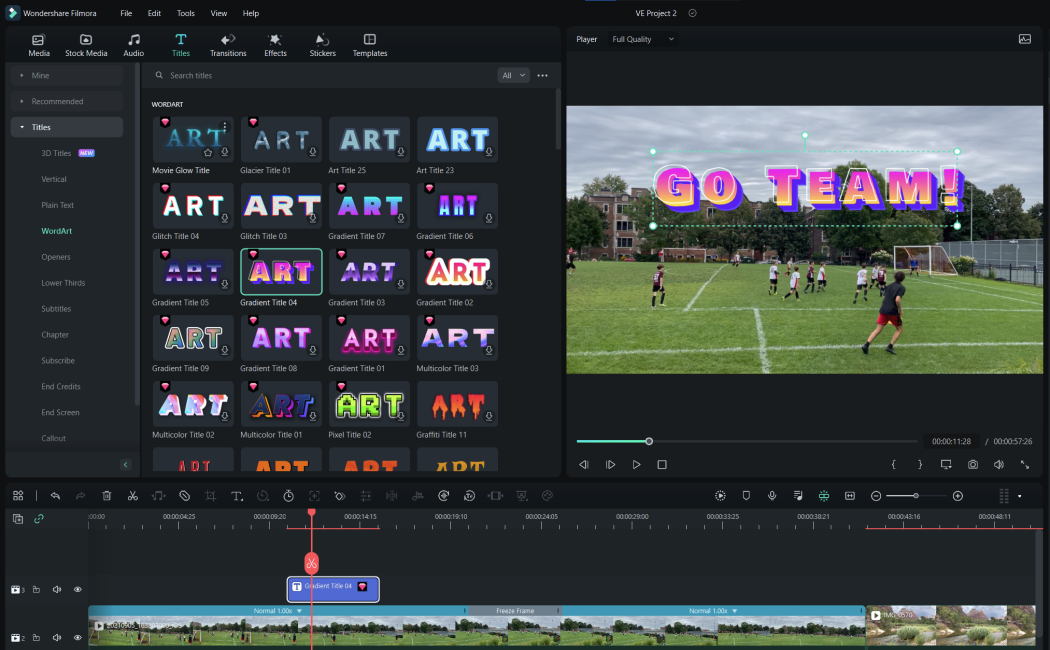

2) CapCut - best free pick with flexible recording, AI voices, and auto-subtitles

CapCut is popular because the basics are unlimited and easy. You can record voice-overs online, with no time limits, or use built-in AI for text-to-speech. It auto-generates subtitles even if the speaker’s not on screen. The editing controls let you adjust pitch, speed, volume, fades, and more, and you can mix several audio tracks. For global reach, you can use built-in AI dubbing to generate multi-language versions of your VO.

On mobile, the recording flow is in-app for iPhone (Sound > Microphone); on desktop or web, you script, record, add subtitles, edit, and export - all in one. This feels more like a professional tool than most free options.

You can use CapCut to clarify complex videos with on-screen captions, localize tutorials for other markets, or keep a consistent voice tone for social media videos.

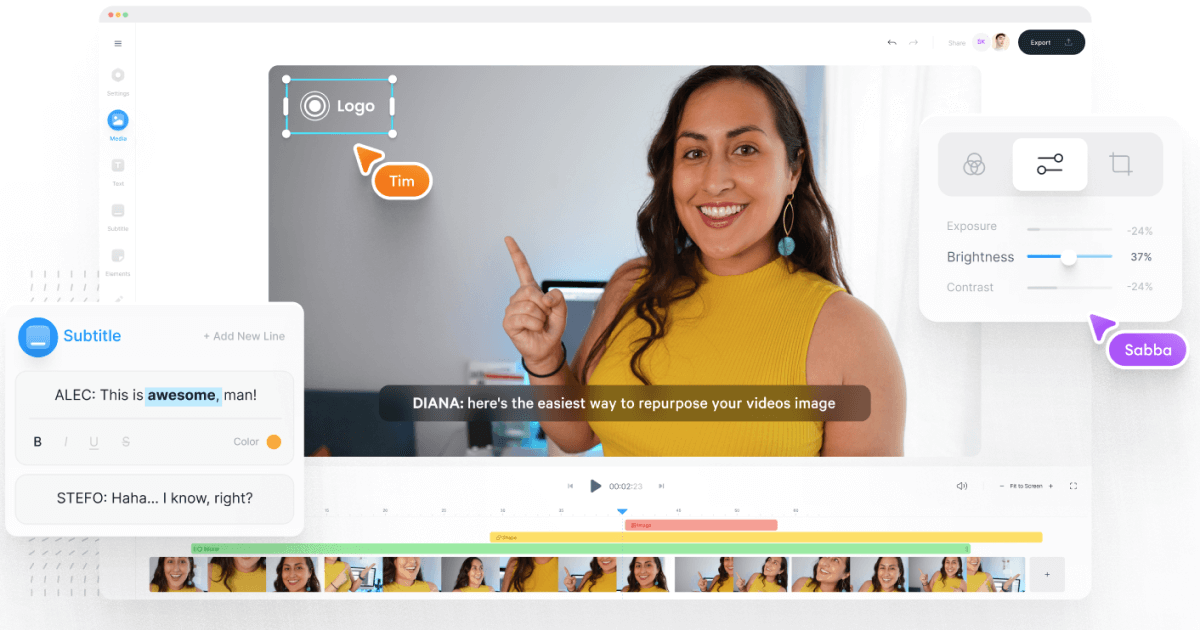

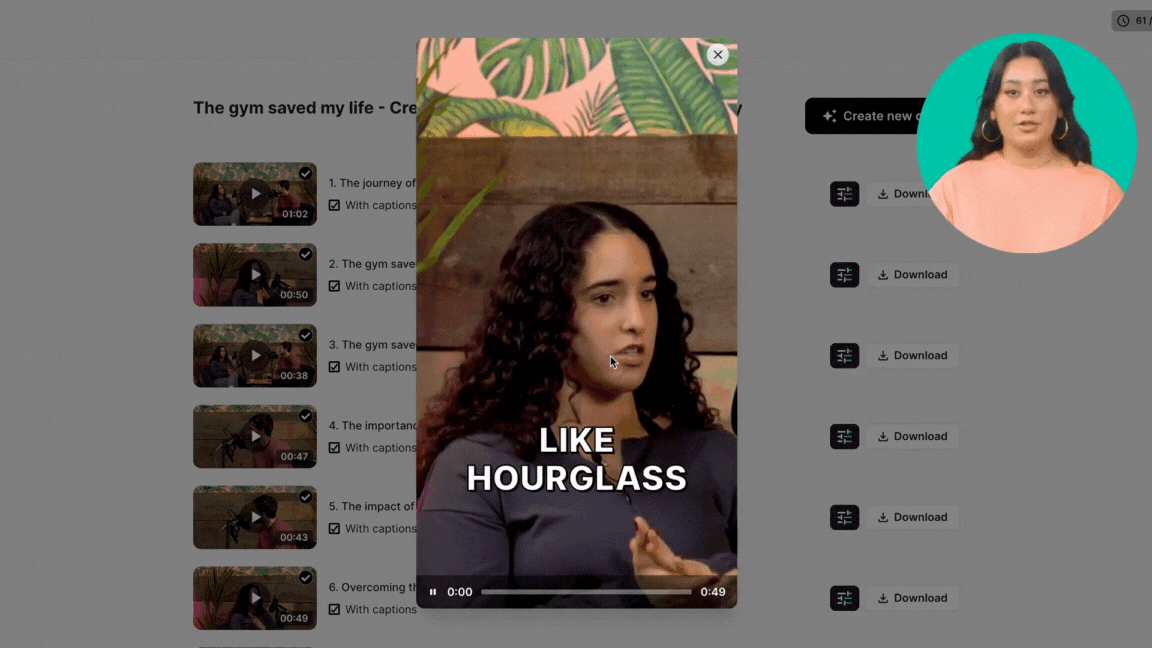

3) VEED - best for replacing multiple tools (recording, captions, storage, sharing)

VEED has built a reputation as an all-in-one workflow. Instead of bouncing between Loom, Rev, Google Drive, and YouTube, you get everything in one place: recording, AI text-to-speech, one-click subtitle generation, and automatic audio cleanup.

User reviews are strong (4.6/5, with about a 60% reduction in editing timeaccording to one testimonial). It’s aimed at teams who need consolidated workflows and secure sharing. You edit, subtitle, and publish in one tool - no more file shuffling or switching between apps.

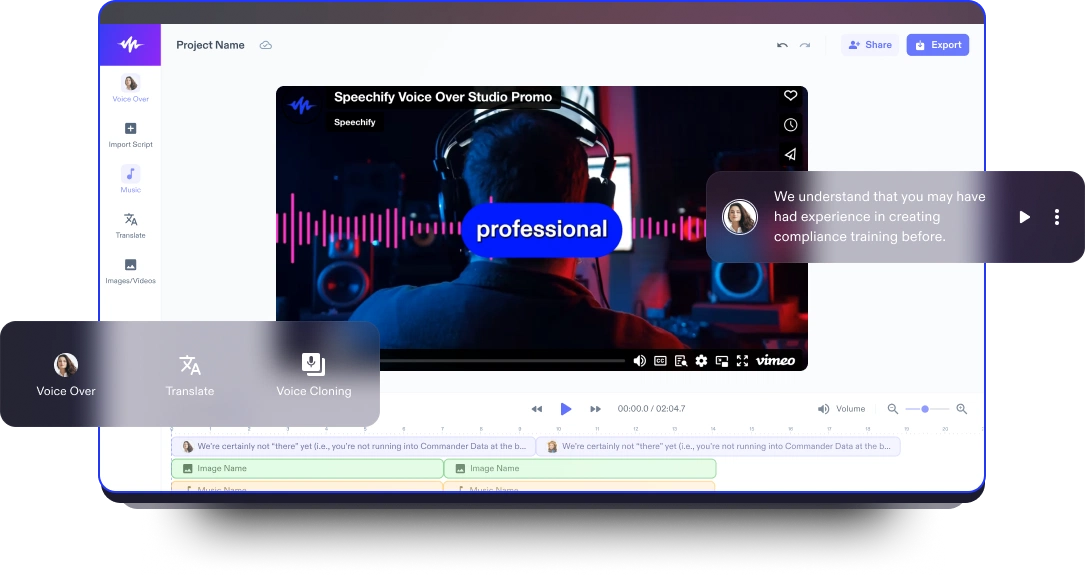

4) Speechify Studio - best for fast AI dubbing with a large voice library

Speechify Studio focuses on AI voice versatility. You get over 200 lifelike voices in multiple accents and languages, perfect for instant dubbing or easy localization. One click dubs into new languages and generates synchronized subtitles. The editor is drag-and-drop, with templates and a vast library of royalty-free music and video assets.

Everything happens in the browser, working across platforms. You can upload your own VO or just use the AI, mix in background tracks, and export in multiple sizes (for YouTube, Instagram, etc.). For YouTube, social teasers, or education, this is one of the fastest ways to get multi-language narration without hiring VO talent.

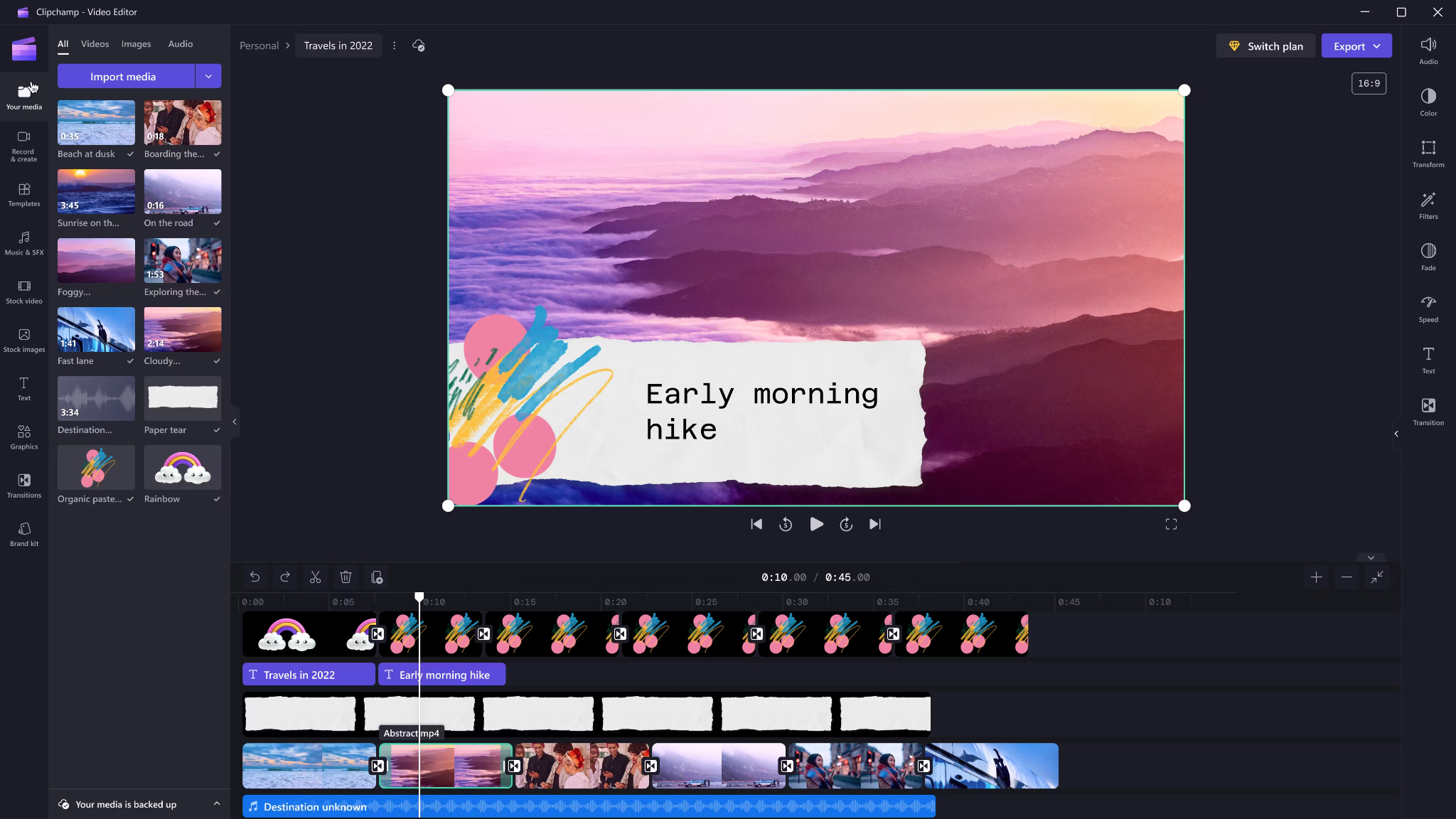

5) ClipChamp - best free TTS variety and easy script control

Clipchamp shines with variety and ease for AI voice-overs. It includes 400 AI voices (male, female, neutral) in 80 languages. You can tweak pitch, emotion, speed (0.5x to 2x) and control pauses/emphasis directly in your script by adding ellipses (“...”) or exclamation marks. If voices mispronounce a word, type it out phonetically.

Output options include transcript exports, subtitles, or just the audio as MP3. Every export is free and unlimited, and user reviews are high (4.8/5 from 9.5k reviews). This is a quick route for social videos, simple explainers, or business presentations.

6) Powtoon - best for animated explainers with built-in VO recording

Powtoon’s big advantage is simplicity for animated videos. It has built-in voice-over recording, lots of customizable templates, and a royalty-free music library. Major brands use it for onboarding and explainers, and it claims tens of millions of users.

You can record your narration directly in the editor, layer music, set up a branded look, and publish to social or business platforms straight from Powtoon. This works well if you want animations with matching narration, but don’t want to learn complex motion tools.

7) Wave.video - best for quick client-ready edits and layered audio tracks

Wave.video is designed for speed and easy audio layering. You can record or upload up to three audio tracks (voice, music, sound effects), then trim and sync each on a clear timeline. Automated captions let your video communicate even if played on mute. Users report getting client-ready videos in as little as 20–30 minutes, thanks to the streamlined process.

This tool fits agencies and freelancers who need regular, clear voice-over videos with quick turnarounds.

Honorable mentions and caveats

There’s also the Voice Over Video app for iOS/iPadOS. It handles multiple VO tracks and edits, and offers a cheap lifetime unlock. However, some users find problems with longer videos: slow playback, export glitches, or audio muting bugs. It’s okay for short clips - test it before using for multi-segment training pieces.

How Colossyan maps to the needs above

I’ll be clear - most editors focus on easy voice-over for marketing or social video. Colossyan stretches further for learning and enterprise.

If you need multilingual versions, Instant Translation creates new language drafts for the whole video (script, on-screen text, interactions), maintaining layout. You can use cloned voices for brand consistency, and our Pronunciations library does what some other editors only do for one-off cases: you save pronunciation settings for product names or technical terms, and every video stays correct.

Pauses, animation markers, and script editing give you fine control over delivery and pacing - a real edge if you want natural, accurate speech. And while Clipchamp lets you control delivery with punctuation, Colossyan lets you sync these to avatar gestures and on-screen animations for even more realism.

Large teams can import docs or PowerPoints and transform them into scenes with the narration built in - saves hours compared to manual scripting. Collaboration, brand kits, and workspace organization mean even non-designers or new team members can keep everything consistent.

We support interactive learning: quizzes and branching, tracked by analytics. SCORM export means your videos fit into any LMS or training system, and our analytics track real results (scores, time watched, drop-off points). For organizations, this is a serious step up from just generating a narrated video.

Example: For compliance learning, import a policy PDF, assign a voice clone, adjust pronunciations for terms like “HIPAA,” add quizzes, export SCORM, and get analytics on where learners struggle - a complete feedback loop.

For a multilingual product rollout, create an English master, translate to Spanish and Japanese, assign native accents or avatars, export closed captions and audio-only versions for other channels, and keep all assets in sync with your brand.

For scenario-based training, use Conversation Mode to create role-play videos with branching. Learners pick responses, and you measure the impact with analytics - something most consumer editors can’t do.

The right editor for your scenario

If you’re a beginner content creator or need simple, free TTS and subtitles, CapCut or Clipchamp is enough.

Marketing teams might prefer VEED to replace multiple tools and simplify sharing, or Powtoon if you need stylish explainers fast.

If you want fast, multi-language dubbing, Speechify Studio or Clipchamp do it, but for real global training with instant translation, on-screen adaptation, and analytics, Colossyan is the stronger choice.

For building e-learning, SCORM packages, compliance training, or videos for enterprise where accuracy and engagement matter, I’d pick Colossyan every time.

Want more guidance on scripting voice-overs, localizing training, or making sure your AI voice matches your brand? Book a demo with our team and we will run through the best ways you can.

How To Make Software Training Videos: A Step-By-Step Guide

To make effective software training videos: 1) Define learner outcomes and KPIs, 2) Break workflows into bite-sized tasks, 3) Script in a conversational, step-by-step format, 4) Choose a format like screencast, avatar, or hybrid, 5) Produce with clear branding, captions, and on-screen prompts, 6) Add interactivity like quizzes and branching, track results with SCORM, 7) Localize for key languages, 8) Publish to your LMS or knowledge base, 9) Analyze watch time and pass rates, 10) Update based on analytics.

Why software training videos matter now

A lot of employees say they need better training materials. Over half - 55% - report they need more training to do their jobs well source. And people actually look for video training: 91% have watched an explainer video to learn something in 2024 source. There’s good reason for this - e-learning videos can boost retention rates by up to 82% compared to traditional methods.

This isn’t just about feels or fads. U.S. companies spend about $1,286 per learner per year on training. That’s a big investment - so it should work. Some real examples back this up: Microsoft cut its learning and development costs by about 95% (from $320 to $17 per employee) when it launched an internal video portal. Zoom cut its video creation time by 90% after moving to AI-powered video production. Berlitz made 1,700 microlearning videos in six weeks, producing faster and cutting costs by two-thirds.

The lesson: shorter, purpose-built videos not only lower costs but actually help people learn more and stay with the company.

Pick the right training video format for software workflows

Not every video needs to look the same. Choosing the best format helps learners get what they need, faster.

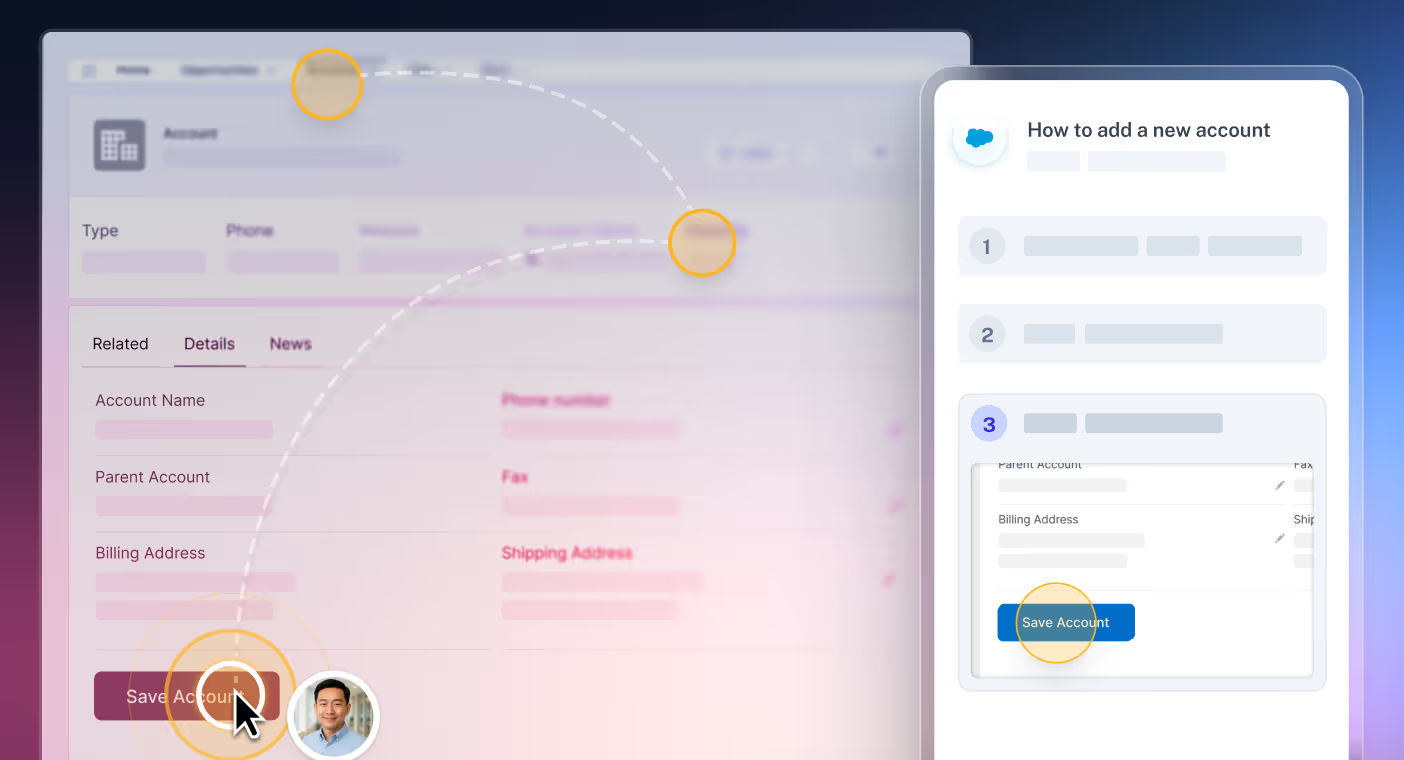

Screencasts are great for point-and-click steps, UI changes, or any kind of hands-on walk-through. If you’re explaining a new feature or daily workflow, a screencast with clear voice-over covers it.

AI avatar or talking-head formats add a personal touch. Use these when you need to explain why a change matters, show empathy, discuss policy, or onboard new users.

Hybrid approaches are gaining ground: start with an avatar giving context, then cut to a screencast for hands-on steps - so learners get clarity plus a human connection.

Don’t forget interactive training videos. Adding quick quizzes or branching scenarios creates active learning and gives you feedback on who actually understood the lesson.

Keep most topics to 2–7 minutes. Under 5 minutes tends to work best for engagement. Microlearning for single tasks works well at 60–90 seconds. Change scenes every 10–20 seconds and keep intros short (about 10 seconds). Always use captions.

Step-by-step: how to make software training videos efficiently

Step 1: define outcomes and KPIs

Decide what the learner should be able to do. For example: “Submit a bug ticket,” “Configure SSO,” or “Export a sales report.” KPIs might be quiz pass rate, average time to completion, watch time, or rate of errors after training.

If you use Colossyan, you can set up projects in organized folders for each workflow and use built-in analytics to track quiz scores and viewing time - especially useful if you want SCORM compliance.

Step 2: break the software workflow into micro-tasks

Split every workflow into the smallest possible tasks. This speeds up production and makes learning less overwhelming. For example, “Create a support ticket” is really several steps: open app, select project, fill summary, choose priority, submit.

With Colossyan, Templates help you scaffold these microlearning modules fast and keep things consistent, even if you don’t have a design background.

Step 3: gather your source content and SME notes

Scripts should always be based on company manuals, SOPs, or input from actual subject matter experts. Cut any fluff or redundant info.

Our Doc to video feature allows you to upload SOPs, PDFs, or even PowerPoint files; the platform then splits them into scenes, pulling out speaker notes and draft scripts.

Step 4: script a conversational, step-by-step narrative

Focus the language on step-by-step actions - don’t use confusing jargon. Keep each script to 1–3 learning objectives. Plan to include on-screen text for key steps and definitions, and change scenes quickly.

I usually rely on our AI Assistant to tighten up scripts, add Pauses for pacing, and set up Pronunciations so brand acronyms are said correctly.

Step 5: set brand and structure before recording

People trust materials that look consistent. Using Brand Kits in Colossyan, I apply the right fonts, colors, and logos across all video modules. I resize drafts to match the destination - 16:9 for LMS, 9:16 for mobile.

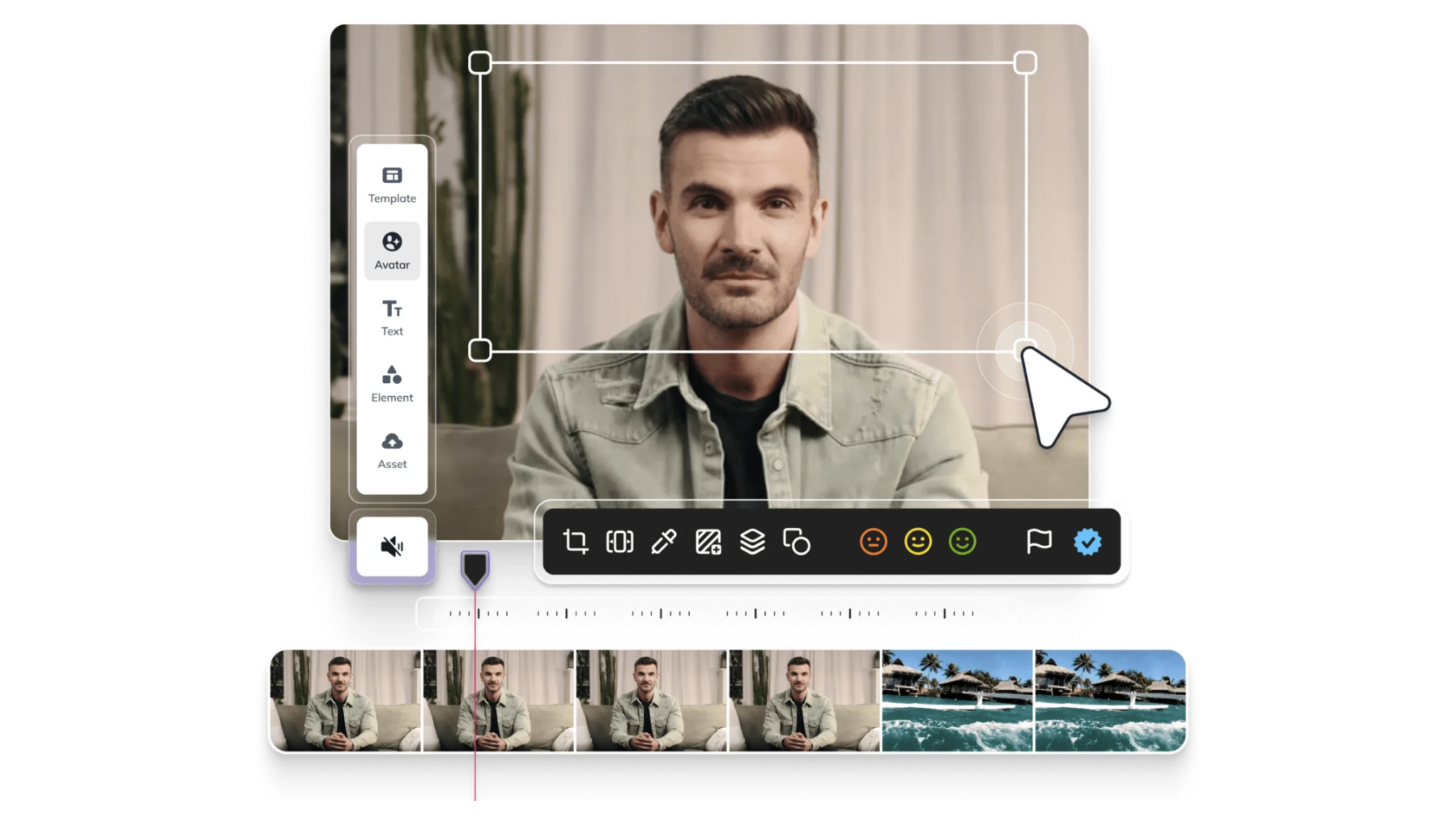

Step 6: produce visuals (screencast + presenter)

For actual workflows, I capture a screen recording to show the clicks and UI. Whenever possible, I add an avatar as presenter to introduce context or call out tricky steps.

In Colossyan, our Media tab supports quick screen recordings, and avatars (with custom or stock voices) let you give a consistent face/voice to the training. Conversation Mode is handy for simulating help desk chats or scenarios. Animation Markers and Shapes allow precise callouts and UI highlights.

Step 7: voice, clarity, and audio polish

Audio should be clean and clear, with no awkward pauses or filler. Colossyan has a Voices library or lets you Clone Your Voice so all videos sound consistently on-brand. You can tweak intonation and stability or download audio snippets for SME approval. A quiet music bed helps with focus, but keep it low.

Step 8: make it interactive to drive retention

Adding a quiz or decision branch makes the training stick. Interactive checks turn passive watching into active learning.

In Colossyan, you can insert Multiple Choice Questions, set branching paths (“what would you do next?”), and set pass marks that connect to SCORM tracking.

Step 9: accessibility and localization

Always include captions or transcripts - not everyone can listen, and localization helps scale training globally. Colossyan exports closed captions (SRT/VTT) and has Instant Translation to spin up language variants, matching voices and animation timing.

Step 10: review and approvals

Expect several rounds of feedback, especially in compliance-heavy orgs. You want time-stamped comments, version control, and clear roles.

Colossyan supports video commenting and workspace management - assign editor/reviewer roles to keep it structured.

Step 11: publish to LMS, portal, or knowledge base

When a module is ready, I export it as MP4 for wider compatibility or SCORM 1.2/2004 for the LMS, set up pass marks, and embed where needed. Our Analytics panel shows watch time and quiz results; you can export all this as CSV for reporting if needed.

Step 12: iterate with data

Check where people drop off or fail quizzes. Tweak scripts, visuals, or interaction. In Colossyan, you can compare video performance side by side and roll improvements out by updating Templates or Brand Kits for large programs.

Real-world patterns and examples you can use

For onboarding, I build microlearning tasks (about 60–90 seconds each): “Sign in,” “Create record,” or “Export report.” Typical structure: 8-second objective, 40-second demo, 10-second recap plus a quiz. I use Doc to video for scene drafts, add avatar intros, screen-record steps, set an 80% pass mark on the MCQ, export as SCORM, and track who completed what.

For a product rollout, the avatar explains why a new feature matters, then a screencast shows how to enable it, with branching for “Which plan are you on?” Colossyan’s Conversation Mode and Instant Translation help cover more teams with less work.

In a compliance-critical workflow (like masking PII), I use on-screen checklists, captions, and a final quiz. Shapes highlight sensitive areas. SCORM export keeps audits easy since pass/fail is tracked, and results can be exported as CSV.

How long should software training videos be?

Stick to 2–7 minutes per topic. Most people lose focus in anything longer than 20 minutes. Microlearning modules (about 60 seconds each) help people find and review single tasks fast.

Tool and budget considerations (what teams actually weigh)

Teams without heavy design skills want fast, simple tools. Expensive or complicated solutions are a nonstarter source. Platforms like Vyond are powerful but can cost more. Simple tools like Powtoon or Canva keep learning curves short.

With Colossyan, you don’t need editing or design background. Doc/PPT-to-video conversion and AI avatars keep things moving quickly - just fix the script and go. You get quizzes, SCORM export, analytics, captions, and instant translation all in one spot. Brand Kits and Templates mean everything stays consistent as the program grows.

Production checklist (ready-to-use)

Pre-production:

- Define audience, outcome, and KPIs.

- Choose format and length.

- Gather source SOPs and SME notes.

- Storyboard objectives and scenes.

- In Colossyan: Set up folder, apply Brand Kit, import doc/PPT.

Production:

- Record screens, add avatar.

- Polish scripts; add Pronunciations, Pauses, Markers.

- Add on-screen text, set up captions.

- In Colossyan: Add MCQ/Branching, music, and role-play if needed.

Post-production:

- Preview, edit pacing.

- Export captions, generate language versions.

- Collect stakeholder sign-off using comments.

- Export MP4/SCORM, upload to LMS, set pass mark.

- Review analytics, iterate.

Sample micro-script you can adapt (“create a ticket”)

Scene 1 (10 sec): Avatar intro: “In under a minute, you’ll learn to create a high-priority support ticket.”

Scene 2 (35 sec): Screencast steps, on-screen labels: “Click Create, add a clear summary, choose Priority: High. In Description, include steps to reproduce and screenshots.”

Scene 3 (10 sec): Recap + MCQ: “Which field determines escalation SLA?” Choices: Priority (correct), Reporter, Label.

Colossyan makes it easy to add Pauses, highlight fields, set quiz pass marks, captions, and export to SCORM for tracking.

Measuring success and iterating

Track watch time and where people drop off. Look at quiz pass rates - are people passing the first time or not? If possible, watch operational KPIs like error rates after training.

With Colossyan, you can review analytics by video and by learner. Export to CSV for reports, then update underperforming modules quickly using Templates.

If you’re looking to turn manuals and processes into clear, trackable, and brand-consistent training videos, it’s possible to do all of it in one platform - and you don’t need to be an expert. That’s how I build, localize, and measure software training programs at Colossyan.

How To Create Animated Videos From Text Using AI Tools

Introduction: From Text to Animation in Minutes

Turning text into animated video used to take days and a lot of design work. With text-to-animation AI, you can now enter a script, pick a style, and get a complete video - usually in minutes. This isn’t just about speed. You get consistent branding, easier localization, and it’s simple to scale training or marketing across regions and departments.

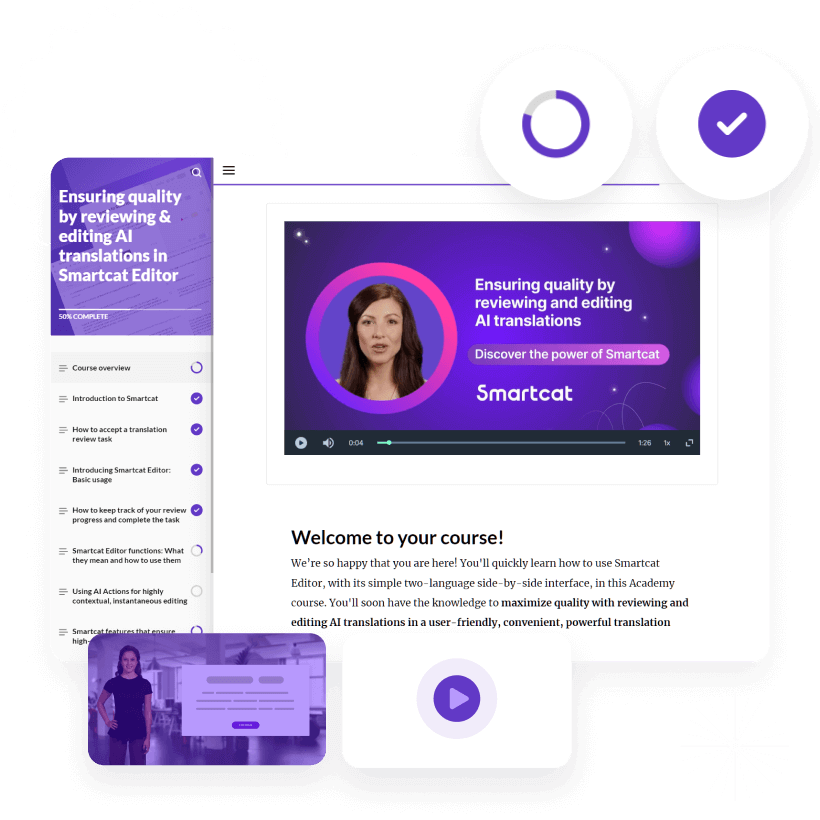

At Colossyan, we focus on L&D - helping teams quickly convert handbooks, docs, and presentations into interactive, branded, SCORM-compliant video training. Everything gets faster. You customize avatars and voices, keep everything on-brand, add quizzes, see analytics, and manage versions at scale. Here’s what I’ve found after comparing the field.

What Is Text-to-Animation AI?

Text-to-animation AI is software that builds animated scenes, visuals, motion, and AI voice narration straight from your script or document. Most tools ask for either a prompt (e.g., "Show animated coins falling into a piggy bank") or let you upload a file. They then auto-match visuals, voices, music, and subtitles. You can tweak the results without being a designer.

Common uses: training videos, explainers, tutorials, onboarding content, social videos, and ads. These aren’t just talking heads. Tools like Colossyan support different animation styles, avatars, voice cloning, captions, and language dubbing. Steve.ai reads your script, picks visuals by context, and builds social clips or onboarding in minutes. It’s almost mindless - just prep your message, and the platform does the rest.

Quick Tool Landscape and When to Use Each

Every tool has a twist. Here’s what stands out:

- Colossyan: Auto-animated L&D videos from prompts - add words like "animated" or "cartoon-style" to control look. You can generate large scripts in one pass, customize avatars, voices, quizzes, translations, and SCORM export. Trusted by enterprise users for speed and compliance.

- Animaker: Good for choice - 100M+ assets, billions of avatars, and wide templates. A marketer reported double sales conversions and 80% less production time. Free plan is useful; paid plans unlock more features.

- Steve.ai: Anyone can go from script to animated or live-action video with zero prior experience. Three steps: enter script, pick template, customize.

- Renderforest: Turns short scripts into custom scenes you can adjust - speaker, tone, or colors in real time.

- Adobe Express: Simple cartoon animations. Auto-syncs lip and arm movements, but uploads limited to two minutes per recording.

- Powtoon: Covers doc-to-video, animated avatars, translation, scriptwriting, and more. Trusted globally for enterprise scale.

For L&D, Colossyan focuses on document-to-video, branded templates, interactive quizzes, branching, analytics, SCORM support, avatars, cloned voices, pronunciation, translation, and workspace management - all to modernize and measure training content at high volume.

Step-by-Step: Create an Animated Video from Text (Generic Process)

- Write your script (300-900 words is ideal). Each scene should handle one idea (2–4 sentences).

- Choose a style. Be specific in prompts (“animated,” “cartoon-style,” “watercolor,” etc.).

- Upload or paste your script; let the AI build scenes.

- Replace or adjust visuals. Swap automated graphics with better stock or your own uploads, tweak colors, backgrounds, or add music.

- Assign a voice. Pick natural voices, and teach the tool custom pronunciations if needed.

- Add captions and translations for accessibility and localization.

- Export the video in the format you need.

Step-by-Step: Building an L&D-Ready Animated Video in Colossyan

- Upload your policy PDF - Colossyan splits it into scenes and drafts narration.

- Apply your brand fonts, colors, logos using Brand Kits.

- Drag in avatars; use two on screen for role-play scenarios.

- Assign multilingual voices; add custom pronunciations or clone a trainer’s voice.

- Rephrase or cut narration with AI Assistant, insert pauses for natural speech.

- Use animation markers for key visual timing; add shapes or icons.

- Insert stock images or screen recordings for clarity.

- Add interactive multiple-choice questions or branching scenarios.

- Translate instantly to another language while keeping timing and animations.

- Review analytics: who watched, duration, and quiz results.

- Export to SCORM for LMS tracking.

- Organize drafts, invite reviewers, manage permissions at scale.

Prompt Library You Can Adapt

- Finance: “Animated coins dropping into a piggy bank to demonstrate money-saving tips.”

- HR onboarding: “Cartoon-style animation welcoming new hires, outlining 5 core values with icons.”

- Tutorial: “Animated step-by-step demo on resetting our device, with line-art graphics and callouts.”

- Compliance: “Branching scenario showing consequences for different employee actions.”

- Safety: “Cartoon-style forklift safety checklist with do/don’t sequences.”

- Cybersecurity: “Animated phishing vs legitimate email comparison.”

- Customer service: “Two animated characters role-play de-escalation.”

- Marketing: “Watercolor 30-second spring sale promo with moving text.”

- Executive update: “Animated KPI dashboard with bar/line animations.”

- Localization: “Animate password hygiene video in English and Spanish - visuals and timings unchanged.”

Script, Voice, and Pacing Best Practices

- Keep scenes short and focused (6–12 seconds, one idea per scene).

- Write clear, spoken sentences. Use pauses, highlight key terms, fix mispronunciations.

- In Colossyan, use Script Box for pauses, animation markers, and cloned voices.

Visual Design and Branding Tips

- Apply templates and Brand Kits from the start.

- Keep on-screen text high contrast and minimal.

- Use animated shapes to highlight points. Centralize assets in Colossyan’s Content Library.

Localization and Accessibility

- Colossyan supports avatars, multilingual voices, captions, dubbing, and Instant Translation.

Interactivity, Measurement, and LMS Delivery

- Branching and MCQs improve engagement.

- Analytics show view time, quiz results, and compliance tracking. Export to SCORM.

Scaling Production Across Teams

- Organize folders, drafts, review workflows, and user roles.

- Colossyan keeps high-volume production manageable.

Troubleshooting and Pitfalls

- Watch for free tier watermarks.

- Specify animation style in prompts.

- Break long scripts into multiple scenes.

- Store licensed media in the Content Library.

FAQs

- Can I turn documents into video? Yes. Upload Word, PDF, or PowerPoint in Colossyan.

- Can I have an on-screen presenter? Yes, up to 4 avatars in Colossyan.

- How do I localize a video? Instant Translation creates language variants.

- How do I track results? Analytics and SCORM export track everything.

- Can I mix live-action and animation? Yes, screen recordings and animated avatars can coexist.

Mini-Glossary

- Text-to-speech (TTS): Converts text to voice.

- SCORM: LMS tracking standard.

- Branching: Lets viewers choose paths in a video.

- Animation markers: Cues for timing visuals.

Suggested Visuals and Alt Text

- Prompt-to-video screenshot (alt: “Text-to-animation prompt using an AI tool”)

- Before/after scene board with branding (alt: “Auto-generated animated scenes with brand colors and fonts”)

- Interaction overlay with MCQ and branches (alt: “Interactive quiz and branching paths in training video”)

- Analytics dashboard (alt: “Video analytics showing learner engagement and scores”)

Opinion: Where All This Sits Now

AI animated video creation is fast, consistent, and nearly push-button for explainer or training needs. Colossyan is ideal for L&D or enterprises needing branding, interactivity, analytics, workspace management, and compliance. For one-off social videos, other tools might suffice, but for SCORM, analytics, and enterprise control, Colossyan leads.

AI-Generated Explainer Videos: Best Tools + Examples That Convert

Why AI explainers convert in 2025

Explainer videos hold people’s attention longer than most formats - about 70% of their total length, especially if they’re short and focused. The sweet spot is 60–90 seconds [source]. That staying power is what makes AI-generated explainer videos so effective for both marketing and training.

AI tools have changed the process. Instead of days editing or filming, you can now turn a prompt or a document into a finished video in minutes. These videos support multiple languages, have natural-sounding AI presenters, and even include interactive questions. You can see exactly who watched, how long they stayed, and whether they learned anything.

When people talk about “conversion” with explainers, the meaning shifts by context:

- If it’s marketing, conversion is whether viewers sign up, request a demo, or understand what your product does.

- In L&D and training, it’s about who finishes the video, how they score on questions, and whether learning sticks. Did people pass the compliance test? Did they remember the new process change?

You don’t need to hire a studio. Platforms like Invideo AI now generate full explainer videos with voiceover, background music, product screencasts, and subtitles from a single prompt - no cameras, no actors, just a few clicks.

At Colossyan, I see L&D teams take slide decks or long SOPs and convert them straight into branded, interactive videos. With Doc to video, Templates, and Brand Kits, it’s easy to keep every video on-message. And because we track plays, watch time, quiz scores, and SCORM pass/fail data, you know exactly what’s working - and what needs a rewrite.

What makes a high-converting AI explainer

If you want explainer videos that don’t just get watched, but actually change behavior, here’s what matters.

- Keep it short: 60–90 seconds works best. Hook viewers in the first 3–5 seconds. Focus on one problem and its solution.

- Structure is key: Set up a problem, show your solution, offer proof, and end with a clear next step. Leave the hard sales pitch for another time, especially with internal training.

- Accessibility widens your reach: Add captions and create separate versions for each language. Don’t mix languages in one video; split them for clarity.

- High-quality visuals help: Natural audio, real or realistic avatars (not uncanny valley robots), clear graphics. Use stock footage and animation markers to match the voiceover with visuals.

- Make it interactive: Training videos with a quiz or branching scenario get more engagement. Good analytics let you fix weak spots fast.

A simple checklist:

- 60–90s total

- Problem/outcome in first line (the hook)

- Captions always on

- One language per version

- Clean, matched visuals

- Conclude with one clear outcome

With Colossyan, I can script out pauses and add animation cues so visuals match up with the narration exactly. If there’s a tricky product name, the Pronunciations tool gets it right every time. Voice cloning keeps the delivery consistent. And Instant Translation spins out a new language variant - script, captions, interactions - in minutes.

Interactive MCQs and branching scenarios turn passive viewers into active learners. Our Analytics panel tells you exactly how long people watched, what quiz scores they got, and which scenes you might need to tighten up.

Best AI explainer video tools (and who they’re best for)

There’s a tool for every use case. Here’s a rundown:

Invideo AI: best for quick, stock-heavy explainers with AI actors. Trusted by 25M+ users, supports 50+ languages, loads of studio-quality footage, and even lets you make your own digital twin. It’s ideal for rapid 60–90s marketing videos with real human avatars, b-roll, and subtitles. Free plan is limited but fine for light use.

simpleshow: best for turning dense topics into short, clear explainers. Their Explainer Engine generates scripts, chooses simple visuals, and adds timed narration and music. One-click translation to 20 languages. Made for anyone, no production skills needed.

Steve.AI: best for fast story-driven shorts. With over 1,000 templates and cross-device collaboration, it’s built to keep videos at the high-retention 60–90 second range. Great for social explainers with punchy hooks.

NoteGPT: best for one-click document-to-animation. Converts PDFs or Word files into animated explainers - auto voiceover, subtitles, editable scripts. Complete a training or lesson video in under 10 minutes. Used widely in education.

Synthesia: best for enterprise avatars, languages, and compliance. Has over 230 avatars, 140 languages, and top-tier compliance. Screen recording, AI dubbing, and closed captions included. If you need consistent presenters and solid security, this is it.

Imagine Explainers: best for instant, social-first explainers. You can tag @createexplainer in a tweet, and it’ll auto-generate a video from that thread. Perfect for trend-reactive marketing.

Pictory.ai: best for boiling long content into snappy explainers. Turn webinars or articles into concise, subtitled highlight videos. Huge stock library and compliance focus.

Colossyan: best for interactive, SCORM-compliant training explainers at scale. Designed for L&D to turn docs and slides into interactive, on-brand videos - quizzes, branching, analytics, full SCORM compliance, and quick brand customization. Instant Avatars and voice cloning make it easy to personalize content across large, global teams.

Real examples that convert (scripts you can adapt)

Example 1: 60-second SaaS feature explainer

Hook: “Teams lose hours each week on [problem].”

Problem: Show the frustrating workflow (screen recording helps).

Solution: Demo the streamlined steps.

Proof: Drop a client quote or key metric.

Close: Restate the outcome (“Now your team saves 5 hours a week.”).

In Colossyan, I’d import the feature’s PPT, use the built-in screen recording for the demo, then pick a template and sync animation markers to highlight UI clicks. Captions on by default, and after launch, I’d check Analytics to see where viewers dropped off or replayed.

Example 2: 90-second compliance microlearning

Hook: “Three decisions determine whether this action is compliant.”

Walk viewers through a branching scenario: each choice links to an outcome, a quick explanation, then a final quiz.

Recap the single rule at the end.

At Colossyan, I’d use Doc to video for the policy PDF, add a branching interaction for decision points, set a pass mark on the quiz, and export as SCORM to track completions in the LMS. Analytics would show which choices or wording confuse most learners.

Example 3: 75-second onboarding explainer

Hook: “New hires finish setup in under 5 minutes.”

Steps 1–3 with over-the-shoulder narration from a recognizable avatar.

Captions and translated variants for different regions.

I’d import speaker notes from the HR deck, build an Instant Avatar from the HR lead, fix system names with Pronunciations, clone the HR’s voice, and spin out Spanish/German variants for each region.

Example 4: Social explainer from a thread

Hook: “You’re doing X in 10 steps; do it in 2.”

30–45 seconds, bold text overlays, jumpy transitions.

In Colossyan, I’d start from scratch, heavy on text and shapes for emphasis, then tweak music and pacing for a vertical mobile feed.

The storyboard-to-animation gap (and practical workarounds)

A lot of creators want true “script or storyboard in, Pixar-like animated video out.” Reality: most tools still don’t hit that. Synthesia has the best avatars, but doesn’t do complex animation. Steve.AI gets closer for animation but you lose some visual polish and control.

Right now, the fastest and cleanest results come by sticking to templates, using animation markers to time scene changes, and prioritizing clear visuals over complex motion. Stock, AI-generated images, bold text, and light motion go a long way.

At Colossyan, we can tighten scenes with animation cues, use gestures (where avatars support it), and role-play conversations using Conversation Mode (two avatars, simple back-and-forth) for more dynamic scenarios - without a full animation crew.

Buyer’s checklist: pick the right AI explainer tool for your team

Languages: Need broad coverage? Synthesia, Invideo, and Colossyan all offer 20–140+ languages; Colossyan translates script, on-screen text, and interactive elements in one workflow.

Avatars/voices: Want custom avatars and consistent voices? Invideo, Synthesia, and Colossyan have depth. Instant Avatars and easy voice cloning are strengths in Colossyan.

Compliance/training: If SCORM and LMS tracking matter, Colossyan stands out: direct SCORM 1.2/2004 export with pass marks, play/quiz Analytics, CSV exports.

Speed from docs: For one-click doc-to-video, NoteGPT and Colossyan’s Doc/PPT/PDF Import take in almost any source.

Stock/assets: If you need a massive media library, Invideo and Pictory lead, but Colossyan’s Content Library keeps assets on-brand and organized for the whole team.

Collaboration/scale: Enterprise workspaces, roles, and approval are easier in Invideo’s Enterprise and Colossyan’s Workspace Management.

Free plans: Invideo free tier has limits on minutes and watermarks; Synthesia allows 36 minutes/year; NoteGPT is free to start and edit at the script/voice/subtitle level.

Step-by-step: build a high-converting explainer in Colossyan

Step 1: Start with your source material. Upload a manual, deck, or brief through Doc to video or PPT/PDF Import - Colossyan breaks it into scenes and drafts a first script.

Step 2: Apply Brand Kit - fonts, colors, logos - so even the first draft looks on-brand.

Step 3: Pick an Avatar or record an Instant Avatar from your team. Clone your presenter’s voice, and set custom Pronunciations for product or policy names.

Step 4: Don’t just tell - show. Screen record tricky software steps, then add animation markers to sync highlights with the narration.

Step 5: Insert a quiz (MCQ) or Branching scenario to make viewers think. Preview scene by scene, set pass marks, export as SCORM for the LMS, and check Analytics for engagement.

Step 6: Ready for multiple markets? Instant Translation turns a finished video into over 80 languages - script, captions, interactions - while keeping the look and structure.

Optimization tips from real-world learnings

One outcome per video works best. If you can’t compress the story to 60–90s, you’re trying to cover too much. Start with a punchy problem - don’t ease in. Use text overlays to hammer the point.

Always turn on captions - for both accessibility and higher completion. Most platforms, Colossyan included, generate these automatically.

Translate for your biggest markets. Colossyan translates everything at once and keeps the scenes clean, saving hours of reformatting.

Use analytics for continuous improvement:

- For courses: if scores are low or people drop out, rethink those scenes.

- For product demos: test new hooks or visuals and see what holds attention.

AI-generated explainers make it possible to move fast without cutting corners - whether for marketing, onboarding, or compliance. With the right approach and the right tool, you get measurable engagement and training outcomes, even as needs or languages scale. If you want on-brand, interactive L&D videos that deliver real results, I’ve seen Colossyan do it at speed and at scale.

AI Animation Video Generators From Text: 5 Tools That Actually Work

The AI boom has brought text-to-animation from science fiction into daily workflows. But with dozens of tools promising "make a video instantly from text," how do you know what actually gets results? Here, I’m cutting through the noise. These are the five best AI animation video generators from text. Each one delivers on critical points: real on-brief visuals from plain prompts, editable output, clear export and rights, and most important, actual user proof.

This isn’t a hype list. Every tool here makes text-to-video easy without constant manual fixes. If you need to turn a script into something polished fast, these are the platforms that work. Plus, if you’re building learning, onboarding, or policy explainers and need your output SCORM-ready, I’ll show you exactly where Colossyan fits.

How We Evaluated

I looked beyond the marketing pages. Each tool on this list had to meet high standards:

- Quality and control: Can you guide visual style, animation, lighting, and pacing?

- Speed and scale: How long do clips take? Are there character or scene limits? Can you batch projects?

- Audio: Are there real voice options, not just monotone bots? Is text-to-speech (TTS) language support strong?

- Editing depth: Can you swap scenes, voices, visual style, and update single scenes without starting over?

- Rights and safety: Is commercial use clear-cut, or riddled with fine print or dataset risks?

- Training readiness: Can you turn raw video into interactive, SCORM-compliant modules? (Where Colossyan shines.)

For every tool, you’ll see what it’s genuinely best at, plus practical prompt recipes and real-world results.

The Shortlist: 5 AI Text-to-Animation Tools That Deliver

- Colossyan - best for quick, automated animation videos with multilingual voiceovers

- Adobe Firefly Video - best for short, cinematic, 5-second motion with granular style controls

- Renderforest - best for template-driven explainers and branded promos

- Animaker - best for character-centric animation with a huge asset library

- InVideo - best for scene-specific edits and localized voiceover at scale

Colossyan Text-to-Animation - Fast From Prompt to Full Video

Colossyan’s text-to-animation generator stands out because it truly automates the process. You give it a script or prompt and get a video complete with AI voices, stock animation, background music, and captions. It’s quick - you might cut your editing time by 60%, according to power users.

Here’s how it works: plug in your script and use descriptors like "animated," "cartoon-style," or "graphics" to get animation (not just stock video). You get up to 5,000 TTS characters per run. Colossyan supports multiple languages and accents, so localization is simple.

Where it works best is when you need an explainer, policy video, or onboarding module fast. You can swap default footage for different animated looks—realistic, watercolor, even cyberpunk - plus add influencer-style AI avatars.

Limitations? Free exports are watermarked, and you need explicit prompts to avoid mixed stock assets. Paid unlocks more features and watermark removal.

Example prompt:

"Create a 60-second animated, cartoon-style safety explainer with bold graphics, friendly tone, and clear on-screen captions. Include watercolor-style transitions and upbeat background music. Language: Spanish."

Adapting it for training in Colossyan is simple. Turn your standard operating procedure into a module with Doc to Video. Import your assets from Colossyan drafts, apply your Brand Kit for consistent visuals, add avatars to speak the script (with multilingual output), and embed quizzes or MCQs. When it’s time to launch, export as SCORM and track real results in Colossyan Analytics.

Adobe Firefly Video - Cinematic 5-Second Motion With Precision

Adobe Firefly is about quality over quantity. It outputs five-second, 1080p clips, perfect for cinematic intro shots, product spins, or animated inserts. You get deep control over style, lighting, camera motion, and timing, so if you care about visual fidelity and brand consistency, Firefly excels.

You prompt with either text or a single image, and Firefly can animate objects into lifelike sequences. All clips are commercially usable and trained on Adobe Stock/public domain materials.

Where it excels: When you need perfect motion for product cutaways, micro-explainers, or branded short social content. Key limitation: each clip is capped at five seconds, so it’s not for full walkthroughs or longer training pieces.

Workflow: animate a 2D product render for a glossy hero shot, export, and import into your main video sequence. With Colossyan, use Firefly for motion graphics inserts, import it as a scene background, add AI avatar explanation, sync voice and visuals with Animation Markers, and drop it into an interactive scenario with Branching. Track knowledge checks via SCORM export.

Renderforest - Guided Text-to-Animation for Explainers and Promos

Renderforest is a go-to for non-designers looking for clear guidance and fast results. You go from idea or script to choosing your style and speaker, then let the AI suggest scenes. You can edit voiceover, transitions, and fonts before exporting - already in the right format for social, marketing, or internal explainers.

Their workflow is streamlined, supporting both animations and realistic videos. They’ve got big customer proof - 34 million users, 100,000+ businesses. Free to start (watermarked), then paid for higher export quality.

Use it when you want a plug-and-play template: onboarding, product demo, or startup pitch. The real value is in its guided approach. It means less choice overload, more speed.

Sample prompt:

"Text-to-animation explainer in a flat, modern style. 45 seconds. Topic: New-hire security basics. Calm, authoritative female voiceover. Include scene transitions every 7-8 seconds and bold on-screen tips."

For training, import Renderforest drafts into Colossyan’s Content Library, break up your script into slides/scenes, assign avatars for each section, and drop in MCQs. Interactive, tracked, and ready for export to any LMS.

Animaker - Character-Focused Animation at Scale

Animaker gives you sheer breadth. You can build almost any scenario - character animations for compliance, deep-dive explainers, or company-wide campaigns. The asset library is massive: over 100 million stock items, 70,000 icons, and billions of unique character options. You also get advanced tools like green screen and avatar presentations.

Real-world results stand out: GSK built 2,000+ videos, saving $1.4M. Others doubled sales or produced 70+ training modules with major time savings.

Best fit: HR, L&D, or marketing teams running recurring series with ongoing updates (e.g., new policy explainer every month, departmental updates).

Potential downside: with so many options, some users can feel lost. But for teams with a plan, it’s unmatched for animation variety.

If you’re pairing this with Colossyan, keep your visual storytelling/character arcs in Animaker, but move scripts into Colossyan for tracking, quizzes, Conversations (multi-avatar role-play), and LMS compliance.

InVideo - Scene-Specific Regeneration and Global Reach

InVideo brings scale and iteration. You can regenerate single scenes without touching the rest of the video, which is a real timesaver for last-minute tweaks. The platform covers 16 million licensed clips, AI avatars/actors in 50+ languages, and offers full commercial rights.

User reports highlight both speed and financial impact - production drops from hours to minutes, and some creators are monetizing channels in under two months.

If you want to localize, personalize, and test variants quickly, like marketing teasers or global product intros, InVideo is set up for it.

Prompt example:

"Create a 30-second animated product teaser. Energetic pacing, bold kinetic text, English narration with Spanish subtitles. Prepare variants for 1:1 and 9:16."

Use InVideo for teaser scenes or snackable intros, then build full training modules in Colossyan with your brand’s color and voice, localize at scale with Instant Translation, and add your assessment layers before SCORM export.

How These Tools Compare (Fast Facts You Can Use)

- Output length:

- Adobe Firefly: 5s, high-res

- Colossyan: full videos (TTS up to 5,000 chars/run)

- Renderforest/Animaker/InVideo: support longer storyboards

- Editing:

- Firefly: precise camera/lighting/motion

- InVideo: per-scene regeneration

- Colossyan/Renderforest/Animaker: edit scenes, swap voices, hundreds of style templates

- Voices & Languages:

- Colossyan, InVideo, Animaker: multilingual, neural TTS, subtitle/voiceover built-in

- Rights & Exports:

- Firefly: brand-safe (Adobe Stock)

- InVideo: commercial rights standard

- Others: paid plans remove watermark, unlock full exports

- Speed/Scale:

- Colossyan and Animaker users report 60–80% time savings

- Renderforest and InVideo tuned for quick, batch projects

Tip: In Colossyan, include "animated, cartoon-style" in your prompt to force animation. In Firefly, add details like "1080p, 5 seconds, slow dolly-in" for cinematic cutaways.

When You Need Training Outcomes and LMS Data, Layer in Colossyan

If you’re in Learning & Development, marketing, or HR, video is a means to an end. What really matters is how fast you can turn policy docs, safety SOPs, or onboarding decks into engaging, branded, and measurable learning.

That’s where Colossyan really helps. You can:

- Convert documents or slides into video instantly, split into scenes, generate script, apply animation, and assign an avatar for narration

- Use Brand Kits for instant visual consistency

- Add assessments (MCQs, Branching) for actual knowledge checks

- Export as SCORM (1.2/2004) and set pass marks, so every outcome is tracked in your own LMS

- Get analytics by user, video, scene - track who watched, how far, and how they scored

Example: Upload a 15-page security policy as a PDF, each page becomes a scene, assign script to avatars, and insert three knowledge checks. Create instant translations for global rollout. Export SCORM, upload to your LMS, and track completions in real time.

The Complete Guide To Choosing An e-Learning Maker In 2026

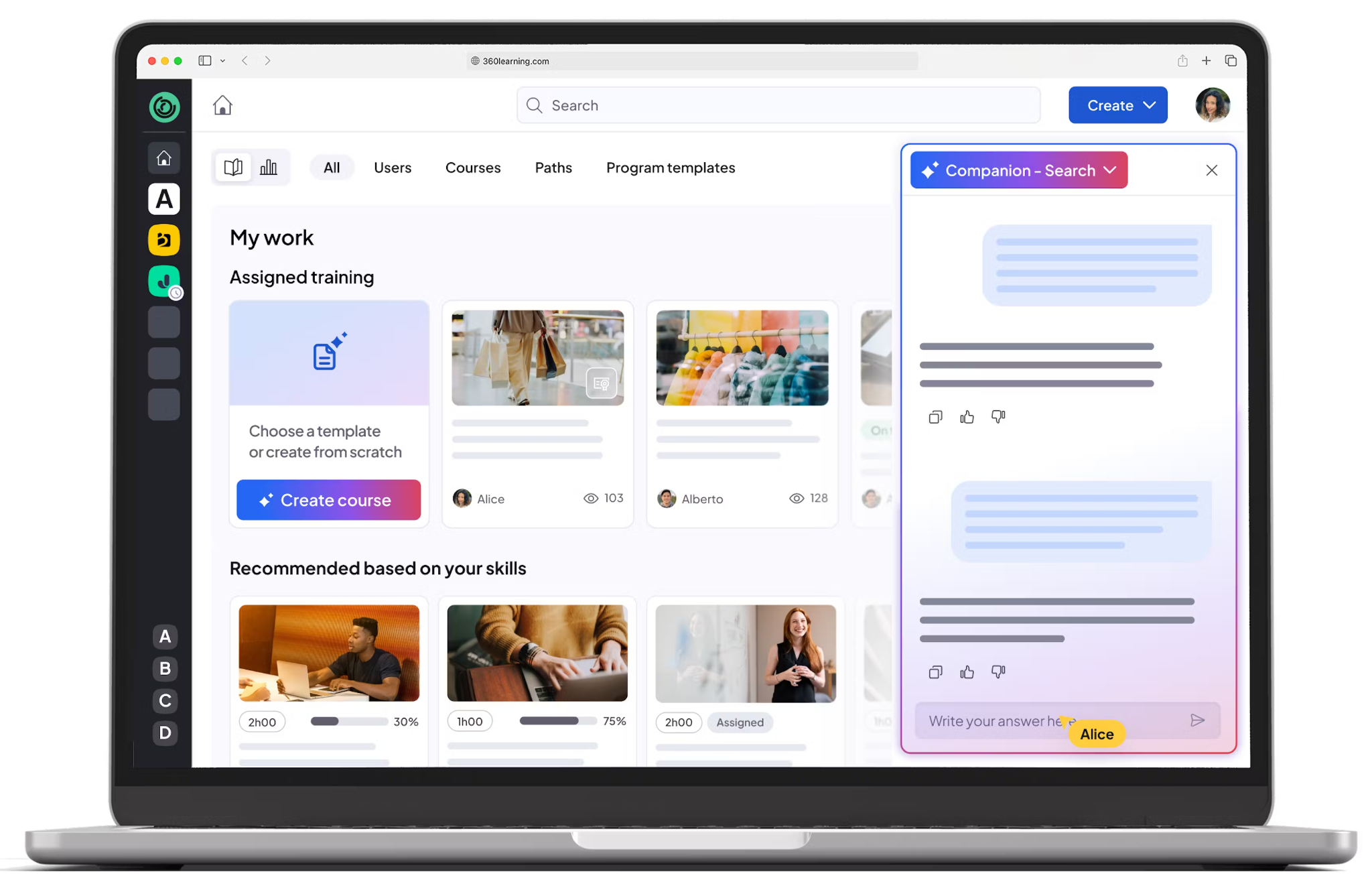

Choosing an e-learning maker in 2026 isn’t easy. There are now more than 200 tools listed on industry directories, and every product page claims a new AI breakthrough or localization milestone. The good news is certain trends have become clear. Cloud-based, AI-native tools with instant collaboration, scalable translation, and reliable SCORM/xAPI tracking are taking over - and the market is moving from slow, high-touch custom builds to simple, reusable workflows and quick updates.

This guide lays out how to navigate the choices, what matters now, how to judge features, and where video-first tools like Colossyan fit.

The 2026 landscape: why making the right choice is hard

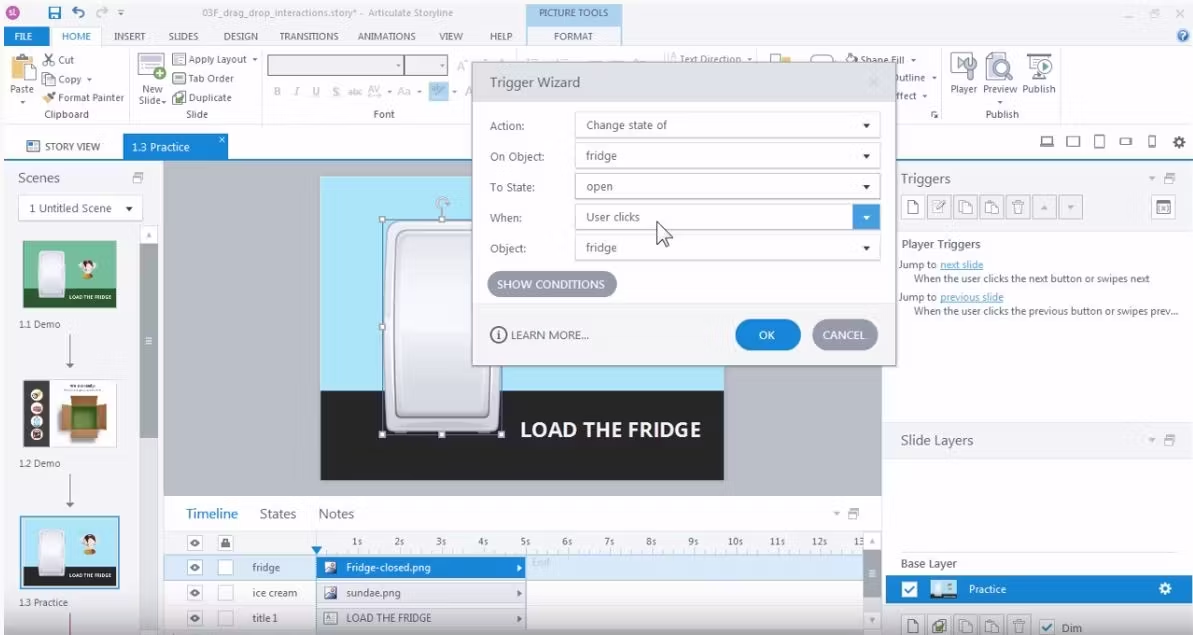

The pace of change is the first challenge. The authoring tool market listed over 200 products by late 2025. AI isn’t a checkbox now; it's embedded everywhere. Tools like Articulate 360 use agentic AI to turn static materials into courses in minutes. Others let you upload a policy document or slide deck and see an interactive course ready almost instantly.

Cloud-native platforms are now the baseline. Their speed comes from shared asset libraries, in-tool review, and “no re-upload” updates - features that desktop tools just can’t match (Elucidat’s breakdown).

Localization quickly shifted from nice-to-have to critical. Some tools handle 30 languages, others hit 75 or 250+, and all claim “one-click” translation. Yet the quality, workflow, and voice options vary a lot.

And analytics still lag. Most systems push data to your LMS, but not all have native dashboards or support deeper learning records like xAPI.

When people compare on Reddit or in buyer guides, the same priorities keep coming up: a clean interface, fair pricing, SCORM tracking everywhere, help with translation, and the option to pilot before you buy.

What is an “e-learning maker” now?

It’s a broad term, but most fall into three camps:

- All-in-one authoring platforms: Examples are Articulate Rise/Storyline, Adobe Captivate, Elucidat, Gomo, dominKnow | ONE, Lectora, iSpring, Easygenerator, Genially, Mindsmith. These let you build, localize, and (sometimes) distribute learning modules of all types.

- Video-first or interactive video platforms: Colossyan turns Word docs, SOPs, or slides into videos with avatars, voiceovers, and quizzes, ready for LMS use. Camtasia is video-first too, but it focuses more on screen capture.

- LMS suites or hybrids: Some are bundling in authoring and distribution, but most organizations still export SCORM or xAPI to their own LMS/LXP.

Know what you need: authoring, distribution, analytics, or a mix? Map this before you start shortlisting.

A decision framework: twelve checks that matter

1. Speed to create and update.

AI script generation, document import, templates, and instant updates are the gold standard. Elucidat says templates make modules up to 4x faster; Mindsmith claims 12x. With Colossyan, you upload a doc or slide deck and get a polished video draft with avatars and voice in minutes.

2. Scale and collaboration.

Look for: simultaneous authoring, real-time comments, roles and folders, asset libraries. Colossyan lets you assign admin/editor/viewer roles and keep teams organized with shared folders.

3. Localization and translation workflow.

Don’t just count languages; check workflow. Gomo touts 250+ languages; Easygenerator does 75; Genially covers 100+; others less. Colossyan applies Instant Translation across script, screen text, and interactions, and you get control over pronunciation.

4. Distribution and update mechanism.

SCORM support is non-negotiable: 1.2 or 2004. Dynamic SCORM lets you update content in place without re-exporting (Easygenerator/Genially); Mindsmith pushes auto-updating SCORM/xAPI. Colossyan exports standard SCORM with quiz pass/fail and share links for fast access.

5. Analytics and effectiveness.

Genially provides real-time view and quiz analytics; Mindsmith reports completions and scores. Many tools still rely on the LMS. Colossyan tracks video plays, quiz scores, and time watched, and exports to CSV for reporting.

6. Interactivity and gamification.

Genially shows 83% of employees are more motivated by gamified learning; branching, simulated conversations, MCQs are now table stakes. On Colossyan, you build quizzes and branching scenarios, plus multi-avatar conversations for real-world skills practice.

7. Mobile responsiveness and UX.

True responsiveness avoids reauthoring for every screen size. Rise 360 and Captivate do this well. Colossyan lets you set canvas ratios (16:9, 9:16, 1:1) to fit device and channel.

8. Video and multimedia.

Expect slide-to-video conversion, automatic voiceover, avatars, brand kits. With Colossyan, you drag in slides, choose avatars (including your own), auto-generate script, and add music, stock video, or AI-generated images.

9. Security and privacy.

ISO 27001, GDPR, SSO, domain controls - must-haves for any regulated environment. Colossyan lets you manage user roles and permissions; check your infosec rules for more details.

10. Accessibility.

Support for closed captions, WCAG/508, high contrast, keyboard nav. Mindsmith is WCAG 2.2; Genially and Gomo publish accessibility statements. Colossyan exports SRT/VTT captions and can fine-tune pronunciations for clear audio.

11. Pricing and TCO.

Subscription, perpetual, or free/open source - factor in content volume, translation, asset limits, and hidden support costs. Open eLearning is free but manual. BHP cut risk-training spend by 80%+ using Easygenerator; Captivate is $33.99/month; iSpring is $720/year.

12. Integration with your stack.

Check for SCORM, xAPI, LTI, analytics export, SSO, and content embedding. Colossyan’s SCORM export, share links, and analytics CSV make integration straightforward.

Quick vendor snapshots: strengths and trade-offs

Articulate 360 is great for a big organization that wants AI-powered authoring and built-in distribution, but Rise 360 is limited for deeper customization. Adobe Captivate offers advanced simulations and strong responsive layouts but takes longer to learn. Elucidat is all about enterprise-scale and speed, while Mindsmith leads for AI-native authoring and multi-language packages. Genially stands out for gamified interactivity and analytics, and Gomo wins on localization breadth (250+ languages) and accessibility.

Colossyan’s core value is rapid, on-brand video creation from documents and slides - useful if you want to turn existing SOPs or decks into avatar videos for scalable training, with quizzes and analytics built in. For basic software simulation or deeply gamified paths, you might pair Colossyan with another specialized authoring tool.

Distribution, tracking, and update headaches

SCORM 1.2/2004 is still the standard - you want it for LMS tracking. Dynamic SCORM (Easygenerator, Genially) or auto-updating SCORM (Mindsmith) kill the pain of re-exports. If your LMS analytics are basic, pick a tool with at least simple dashboards and CSV export. Colossyan handles standard SCORM, as well as direct link/embed and built-in analytics.

Localization at scale

Language support ranges from 30+ to 250+ now. But don’t just count flags: test the voice quality, terminology, and whether layouts survive language expansion. Colossyan lets you generate variants with Instant Translation, pick the right AI voice, and edit separate drafts for each country. Brand terms won’t be mispronounced if you manage Pronunciations per language.

Interactivity and realism

Gamification is provable: the University of Madrid found a 13% jump in student grades and 83% of employee learners say gamified modules are more motivating. For compliance, use branching scenarios. Skills training works better with scenario practice or “conversation mode” - something you can build with multi-avatar videos in Colossyan.

Security, governance, and accessibility

Always confirm certifications and standards - ISO 27001, SOC 2, GDPR. Use role-based permissions and asset libraries to keep governance tight. Colossyan’s workspace management and access controls were built for this, but final oversight depends on your own team.

TCO and budgeting

Subscription may seem cheaper, but annual content, translation, and update workloads matter more. Easygenerator cut BHP’s risk training spend from AU$500k to under AU$100k. The real gain comes from reusable templates and dynamic update paths. Colossyan reduces ongoing spend by slashing video creation time and letting anyone with docs or slides drive production.

Picking your use cases and matching tools

Compliance needs detailed tracking and branching, so think Gomo or Captivate. For onboarding or sales, speed and multi-language are key; Colossyan lets you push out consistent playbooks across markets. Software training means screen demos - Captivate is strong here; Colossyan’s screen recording plus avatars is a good fit for guided walk-throughs.

Implementation: a 90-day plan

Start small: pilot 3–5 doc-to-video builds, test export to LMS, check analytics and language variants. Next, standardize templates, set up brand kits and permissions, integrate with your analytics. Expand to 10–20 full modules, add branching, and run A/B tests on engagement.

FAQs and final reality checks

SCORM is still necessary. Authoring tools aren’t the same as an LMS. Agentic AI means auto-structuring your content, like turning a manual into an interactive video with quizzes. Cloud is standard unless you need offline creation for rare cases. Always test your translations for voice and terminology.

Colossyan’s place in the stack

I work at Colossyan, where our focus is helping L&D and training teams turn existing content - documents, SOPs, slides - into engaging, on-brand, interactive videos quickly. You upload a file, choose an AI avatar (even your own), select a brand kit, add quizzes or branches, translate in a click, and export a SCORM module to plug into your LMS. Analytics reporting, closed captions, and branded voice controls are part of the workflow. For teams who want to move fast, localize easily, track outcomes, and deliver visually consistent training without specialist design skills, Colossyan is a strong complement or even main workhorse - with the caveat that for very deep gamification or advanced simulations, you might connect with a more specialized authoring tool.

The bottom line

Match your tool to your needs: speed, collaboration, scalable translation, interactivity, and analytics matter most. Use pilots and a detailed RFP checklist to separate real value from feature noise. And if quick, scalable, high-quality corporate training video is a core use case, Colossyan is ready to help - especially when you need to go from static resources to interactive, trackable videos without hassle.

Top Avatar Software For Training, Marketing & Personal Branding

Choosing the best avatar software comes down to what you need: live interaction, game or app development, mass video content for training or marketing, or a focus on privacy. There’s a lot out there, and most options cater to a specific use case. Here are the main categories, who they're best for, and strong examples from the market—including how we use Colossyan to streamline and scale enterprise video training.

Real-time streaming and VTubing

If you need your avatar to appear live on a stream or in a webinar, VTubing and avatar streaming tools are your answer. Animaze is one of the most mature. Over 1 million streamers, VTubers, and YouTubers use it. It works with standard webcams or even iPhones, so no special 3D setup is required. You can stream as an animated character on Twitch, YouTube, TikTok, or use it in Zoom meetings.

Animaze offers broad integration—think OBS, Streamlabs, Discord, Google Meet. It accepts lots of avatar formats, like Live2D or Ready Player Me. Advanced tracking (Leap Motion, Tobii Eye Tracker) means your digital persona can even match your hand or eye movements. You also get props, backgrounds, emotes, and a built-in editor to bring in custom 2D/3D models.

If you want free, no-frills real-time facial animation for quick Zoom or Teams sessions, Avatarify does the job. But VTubing tools aren’t made for learning management (LMS), SCORM, or detailed analytics. They’re about being “live” and engaging your audience on the spot.

Developer-grade 3D avatars for apps, games, and the metaverse

Building your own app, metaverse, or game? You need a developer ecosystem that can generate and manage custom avatars across platforms. Ready Player Me is built for exactly this—25,000+ developers use their infrastructure to get avatars to work in Unity, Unreal, and many other engines. Their value is in asset portability: you can import avatars or cosmetics without having to rebuild them for each project. Their AI will auto-fit, rig, and style assets to match.

Want a user to build an avatar from a selfie that can go straight into your game? That’s Avatar SDK. Their MetaPerson Creator runs in the browser; snap a single photo and get a full animatable 3D avatar, customizable down to facial features and clothes. There’s an SDK for Unity and Unreal, or you can run the pipeline on-premises for privacy.

Avaturn is similar. One selfie, 10,000 possible customizations, instant export to Blender, Unity, Unreal, and more. The difference is that Avaturn also focuses on making avatars instantly usable for animation and VTubing, with ARKit and Mixamo compatibility. For apps or virtual worlds needing embedded user-created avatars, either SDK will work.

But unless you’re running an app or game platform, these are usually overkill for standard L&D, marketing, or HR needs.

AI video avatar generators for training, marketing, and branding

This is where things get interesting for teams who need to modernize training, make scalable marketing explainers, or give a consistent face to brand/customer comms—especially at global scale.

Synthesia, HeyGen, D-ID, Colossyan, Elai, and Deepbrain AI are leading the way here. Colossyan stands out for training at enterprise scale. Here’s what I actually do with Colossyan to help organizations transform their process:

Imagine you have to turn a new company policy into interactive training for 12 markets in a tight timeframe. Here’s my workflow:

- I import the policy as a PDF; each slide becomes a scene.

- Doc-to-Video auto-generates narration scripts and scenes.

- I turn on Conversation Mode, so two avatars role-play employee/manager dialog—with real, recognizable faces thanks to Instant Avatars (recorded or uploaded short clips).

- For key compliance moments, I insert quiz questions and branching. Learner answers shape what happens next.

- Need terms read a certain way? I set custom pronunciations and use voice cloning to capture the real subject-matter expert's style.

- I apply the brand kit for logos, colors, and fonts, switch to 9:16 aspect for mobile delivery, and add interaction markers for well-timed visuals.

- Instant Translation lets me spin out Spanish, German, or Japanese variants, each with a native voice and consistent timing.

- I export as SCORM 2004, with pass/fail set for quizzes, upload to the LMS, and analytics show me who watched, finished, or passed—down to the name and score.

This workflow easily drops production time for interactive, localized training from weeks to hours.

Marketing teams also use Colossyan by scripting updates with Prompt-to-Video, building product explainers using the CEO’s Instant Avatar and cloned voice, and batch localizing variants with translation, all while keeping the brand visuals fixed. I can download MP4s for web or extract SRT captions. Engagement analytics let me pause (or change) production if viewers drop off early.

Privacy-first or on-device generation

Sometimes privacy matters most. RemoteFace keeps everything on your machine—images never leave the device, but you can still appear as an avatar in Zoom, Teams, or Meet. This is best for healthcare, government, or any sector with sensitive data.

How to decide: pick by use case

If you want live engagement—webinars, virtual meetups, streaming—stick to tools like Animaze or Avatarify.

If you want avatars in your product or game, Ready Player Me, Avatar SDK, or Avaturn will provide SDKs, asset management, and portability that generic “video avatar” services can’t.

If you need training videos, onboarding, multi-lingual explainer content, or standardized messaging—focus on AI video avatar generators. I’ve seen the fastest results and simplest LMS integration come from Colossyan. Features like SCORM export, quizzes, branching, analytics, and one-click translation are must-haves for compliance and L&D.

If you’re a creator or marketer focused on “digital twin” effects—i.e., your own look and cloned voice—Colossyan, HeyGen, and Synthesia all support it, but the workflow and speed are different. Colossyan’s Instant Avatars + voice lets you create a real brand spokesperson in minutes; Synthesia requires a more formal shoot, but matches on security/compliance.

On budget or just want a cool new profile image? Try creative tools like Fotor or Magic AI. Read community threads if you want to see how others stack up tools—a recent Reddit thread showed people still search for affordable AI avatar generators, VRChat options, and quick animated character tools.

Real examples

"Animaze supports advanced tracking with Leap Motion and Tobii Eye Tracker, and it integrates with OBS, Streamlabs, and Zoom—ideal for live webinars or VTubing."

"Avatar SDK’s MetaPerson Creator turns a single selfie into an in-browser, animatable 3D avatar recognizable from your photo, with Unity and Unreal integrations."

"Ready Player Me’s ‘any asset, any avatar’ infrastructure helps studios import external avatars and cosmetics without rebuilding pipelines, extending asset lifetime value."

From a 2025 industry roundup: D-ID enables real-time interactive agents with RAG and >90% response accuracy in under two seconds; Colossyan emphasizes scenario-based training, quizzes, and SCORM export; Deepbrain AI reports up to 80% time and cost reductions.

HeyGen lists 1,000+ stock avatars and a Digital Twin mode to record once and generate new videos on demand; language claims vary by source, so verify current coverage.

Which avatar software is best for corporate training?

Look for SCORM, quizzes/branching, analytics, and brand controls. Colossyan is purpose-built for this, combining document-to-video, scenario creation, instant translation, and LMS-ready exports.

What’s the difference between VTubing tools and ai video avatar generators?

VTubing is live, for streaming and engagement. AI video avatar generators like Colossyan or Synthesia create scripted, on-demand videos for structured training or marketing.

How can I create a digital twin for my brand?

In Colossyan, record a short clip to create an Instant Avatar and clone your voice. In HeyGen, use Digital Twin mode. In Synthesia, order a custom avatar; it takes about 24 hours.

How do I add avatars to my LMS course?

Produce interactive video in Colossyan, insert quizzes and branching. Export as SCORM 1.2/2004, set pass criteria, upload to your LMS, and monitor completions with Analytics.

Final thoughts

Most teams fall into one of three needs: live avatar presence for dynamic meetings and streams, developer infrastructure for in-app avatars, or scaled video creation for L&D and marketing. Colossyan is where I’ve seen L&D and comms teams get the most value. Document-to-video, customizable avatars, quizzes, instant translation, and SCORM/analytics make it possible to build, localize, and track on-brand interactive content at scale, without a production studio.

Check current pricing and language features before you commit - these change fast. And always match the tool to your real use case, not just the trend.

AI Ad Video Generators Ranked: 5 Tools That Boost Conversions

AI ad video generators have changed how businesses, agencies, and creators make ads. Five minutes of footage can mean thousands in revenue - if you get the details right. But with so many platforms, it’s easy to get lost in stats, features, and vendor promises. Here’s my honest look at the best AI video ad makers for conversion lift, what actually makes them work, and why operationalizing with your team matters as much as the software itself.

The top 5 ai ad video generators

Arcads.ai - best for direct-response teams chasing revenue

Arcads.ai stands out for teams who want scale and numbers to back it up. It offers a library of 1,000+ AI actors and localizes ads in 30+ languages, allowing you to launch, iterate, and test quickly in multiple markets. Their sponsored results are bold: campaigns like Coursiv saw 18.5K views and $90K revenue (+195%), and MellowFlow notched 25.2K views and a reported +270% revenue lift. These are vendor-reported, but the direction is clear - Arcads is built for people who want to track every click and dollar.

The fit is strongest for fast-growth D2C brands, app studios, or agencies hungry for ROI and creative scale. Arcads cards show influencer metrics too, with some accounts reporting up to +195% growth in followers.

One gap: What you see is based on what's shared by the vendor. Always validate with your own testing and attribution.

How does Colossyan help here? If your team, or your creator network, needs to follow a repeatable playbook to get similar results, we make it easy. I can turn your latest ad brief or testing framework into an interactive, trackable training video. Want your creators certified before running global campaigns? Add MCQs and analytics to ensure every market knows which hooks and formats to run. And, with Instant Translation, your training adapts as easily as Arcads’ output does - no more copy-paste errors or lost-in-translation creative.

Invideo AI - best for global scale and brand safety

Invideo AI covers the globe, with support for more than 50 languages and over 16 million licensed clips built in. You get an actor marketplace spanning the Americas, Europe, India, and more, and their privacy guardrails are layered: think consent-first avatars, actor-controlled usage blocks, face-matching to prevent unauthorized likeness, and live moderation.

If you need to avoid copyright headaches or want to ship ad variants safely to dozens of markets, you’ll find most needs covered. Invideo’s anecdotal case study claims a customer cut ad production time from six hours to thirty minutes and doubled sales. Is that a lock for every business? No. But it shows the workflow is fast.

In practice, Invideo is best for larger teams or brands who need a single system to manage rights, scale, and creative quickly.

Colossyan fits in by making your training process match this scale. I can import your playbooks from PDF or PPT and turn them into video lessons, branching based on region or campaign logic. Voices and Pronunciations features guarantee your AI actors say every branded term just the way your markets expect. And all these assets remain on-brand, thanks to Brand Kits and centralized management.

Deevid AI - best for rapid, flexible testing

Deevid AI markets itself as the no-crew, no-delay solution. You put in text, images, or even just a product URL, and get out a video with visuals, voiceover, and animation. Its most original offer is AI Outfit Changer - instantly swap clothes on your AI presenter - which means you can refresh ads for different seasons, regions, or A/B tests without a re-shoot. It’s especially useful for ecommerce, explainers, and UGC-style ads optimized for TikTok, Reels, or Shorts.

Speed is Deevid’s promise - ad variants go from idea to output in minutes. This is for marketers or agencies needing new creative every week, not once a quarter.

Brand fit may require some extra work compared to pricier, bespoke editing; and if you’re in a category where realism matters for virtual try-ons, you’ll want to validate that feature first.

When your goal is to enable your own team, or creators, to test and report on dozens of variants rapidly, Colossyan helps by translating your creative testing matrix into a micro-course. I use our interactive video and Conversation Mode to role-play feedback cycles, embed certified naming conventions, and standardize review checkpoints - all while keeping assets easy to find in our Content Library.

Amazon Ads AI video generator - best for sponsored brands speed

Amazon Ads’ AI Video Generator is purpose-built for one job: churning out Sponsored Brands ad videos at scale. It’s free, English-only (for now), and claims to generate six SB videos in up to five minutes per request. No editing skills required, and it leverages your product detail page to produce platform-ready variants.

This tool is best for U.S.-only Amazon sellers and vendors working to fill the Sponsored Brands shelf with heaps of quick, on-brand video creative. Its creative control is lighter than paid tools, but nothing matches the time-to-first-ad for this format.

Colossyan lets you bottle this repeatability for your teams - make a single spec training on what a good SB ad looks like, translate it instantly for later expansion, and track which teams complete their onboarding. LMS (SCORM) export is built in so you meet compliance or knowledge check standards.

VEED - best for ugc and model versatility

VEED stands apart for its support of multiple video AI models: talking-heads, deepfakes with lip-sync, and visually rich short clips. You can blend scenes, add narration, captions, and brand, plus pick avatars and dub into multiple languages. For user-generated content (UGC), testimonials, or rapid variant generation, the workflow is fluid and flexible. One user testimonial reports up to 60% time saved on project editing.

Its free tier comes with a watermark, and the most advanced models and exports require credits, so budget accordingly. Also, max lengths for some models are short (as little as 10 or 12 seconds), so this isn’t your full-length video suite.